Scraping Product Details from Google Shopping with Scrapeless

Advanced Data Extraction Specialist

In today's fiercely competitive global business environment, web scraping technology has become the core driving force for e-commerce companies and retailers to maintain their market competitiveness. By collecting accurate public data from thousands of target data sources around the world through intelligent agent networks, companies can build dynamic pricing models, optimize inventory management, gain insights into consumer behavior trends, and ultimately provide end users with the most competitive product price system.

This guide will systematically demonstrate how to legally obtain public product data on the Google Shopping platform through professional tools. Whether you are a technical team looking to build your own data pipeline or a business decision maker seeking market intelligence support, this article will provide you with an action framework that has both practical and strategic value.

What is Google Shopping?

Google Shopping (formerly known as Google Products Search, Google Products, and Froogle) is a shopping platform where users can browse, compare, and purchase products from a wide range of paid suppliers. It not only allows consumers to easily select their favorite products from a large number of brands, but also provides retailers with an efficient online promotion channel. When users click on a product link, they will be directly directed to the supplier's website to complete the purchase, making Google Shopping a powerful tool for companies to increase product exposure and promote sales.

Overview of Google Shopping results page structure

The data obtained when browsing Google Shopping depends on three key input parameters: search, product and price. Here is a brief analysis of each parameter:

- Search: Google Shopping's product list contains detailed information about each product, such as ID, title, description, price and inventory status.

- Product: Displays detailed information about a single product, including sales at other retailers and product prices.

- Price: Lists product prices from all retailers and additional information, such as shipping details, total cost and retailer name.

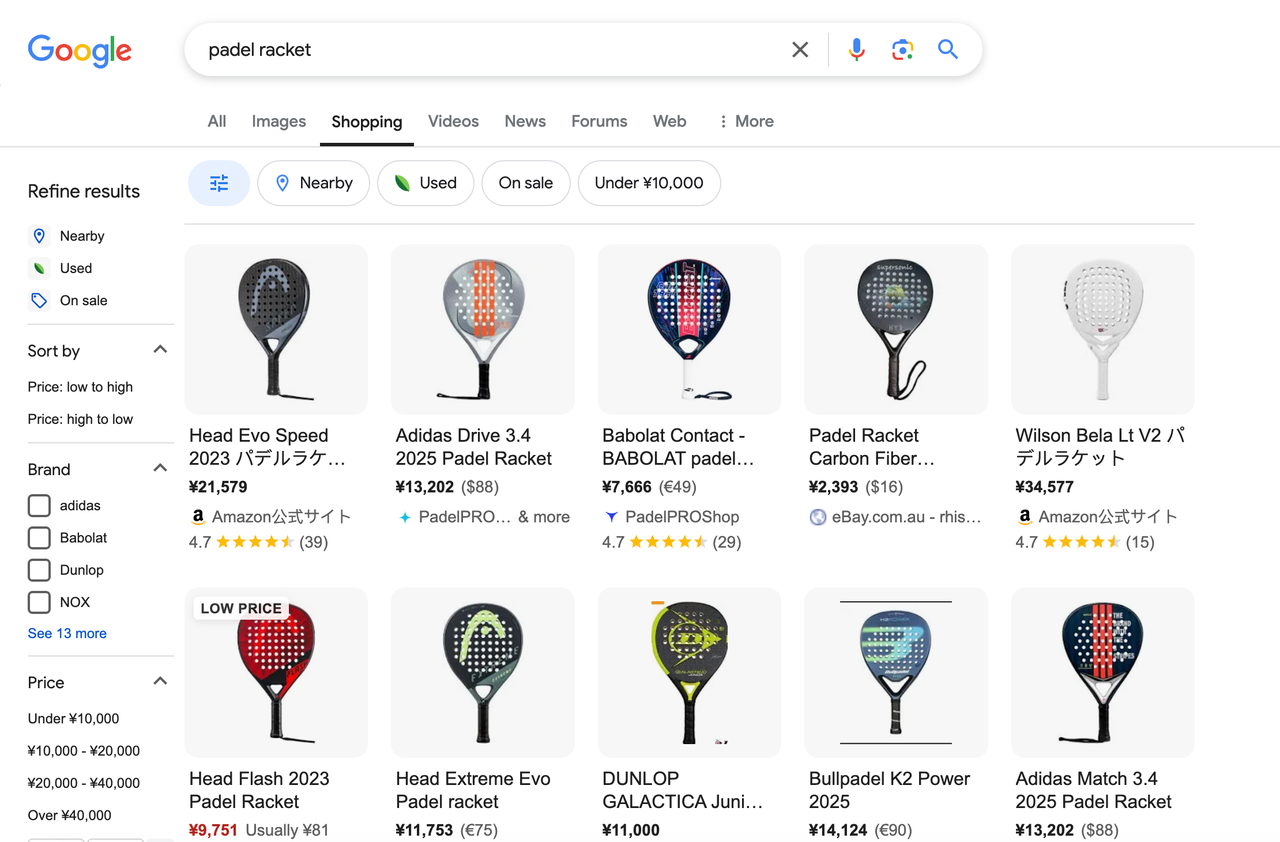

Search results page

The search results page of Google Shopping displays all products related to the user's query. For example, when searching for "padel racket", the page will display the following elements:

- Search bar: allows users to enter keywords to search for products.

- Product list: displays detailed information of all products in the search results.

- Filters: allows users to filter products by price range, color, style, etc.

- Sorting options: supports sorting results by attributes such as ascending/descending price, popularity, etc.

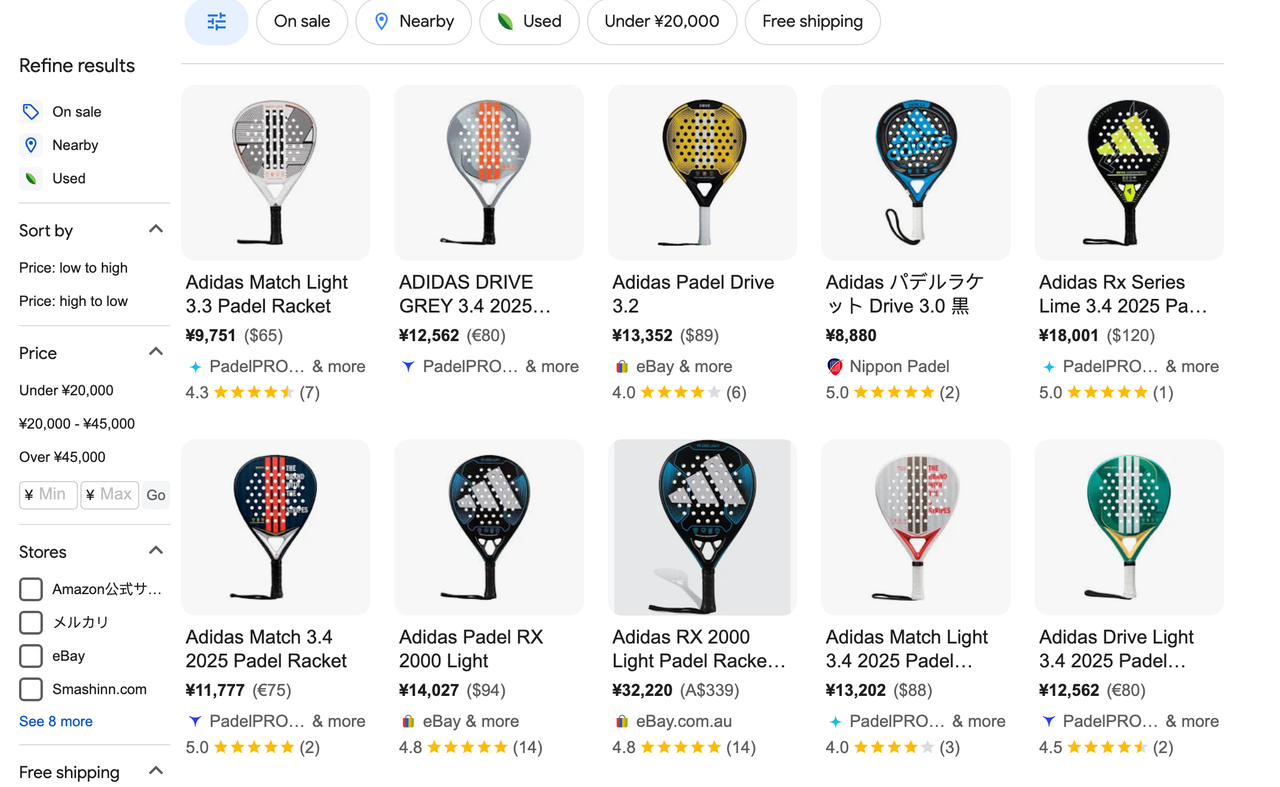

Product page

After clicking on a product from the search results page, users are directed to the product page, which contains the following:

- Product Name: The name of the product.

- Product Highlights: A quick overview of the product's core features.

- Product Details: A detailed description of the product.

- Pricing Information: Prices offered by different retailers.

- Product Reviews: Displays product ratings and customer reviews.

- Price Range: Displays the lowest and highest selling prices from different sellers.

- General Specifications: Provides basic parameters of the product.

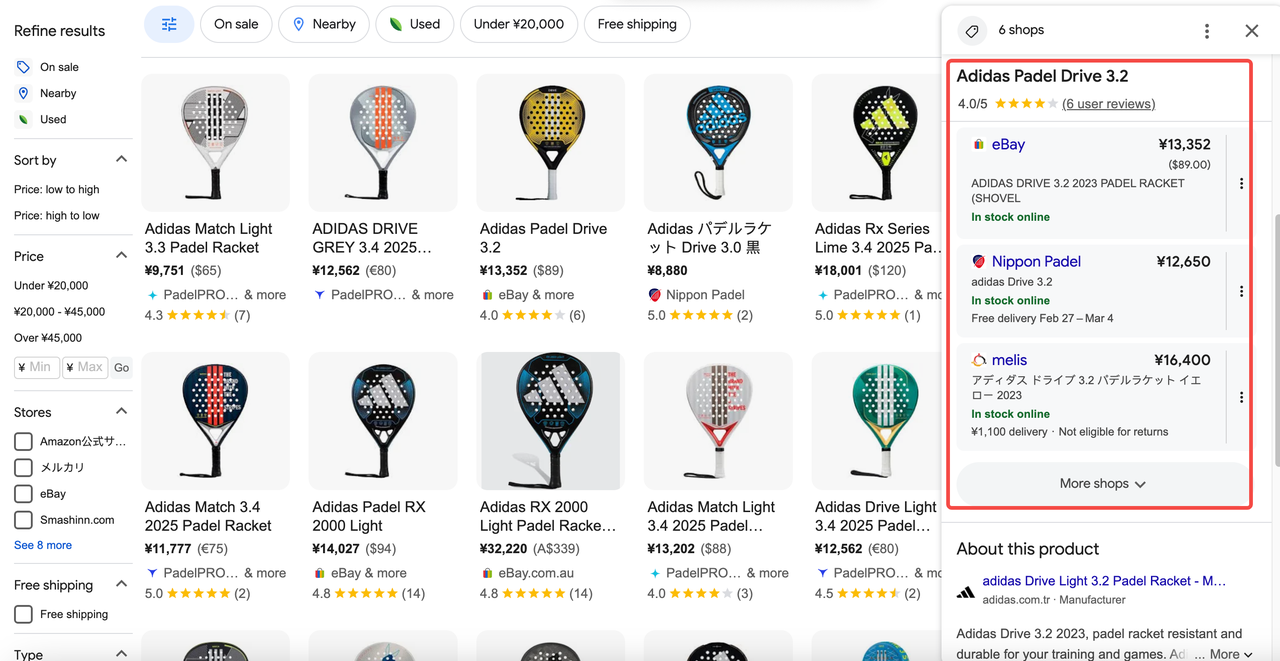

Pricing Page

The Pricing page aggregates product prices from different retailers and displays information such as the retailer's reputation and whether it offers the Google Guarantee. This page contains the following:

- Product Name: The name of the product you searched for.

- Rating: The product's overall rating and number of reviews.

- Prices by Store: Lists retailers' offers, deals, and purchase links.

- Filters: Filters that can be applied to the retailer list.

Is it legal to scrape Google Shopping results?

Scraping data is considered legal in some cases:

- Fair use: In some jurisdictions, fair use allows limited data scraping for purposes such as research, education, or non-commercial use.

- Public data: If the data you want to scrape is public (such as product pricing or descriptions on Google Shopping), then scraping this data may seem fine.

How to Scrape Google Shopping Results with Python [Complete Guide]

In this comprehensive guide, we'll walk you through the process of scraping Google Shopping results using Python. Whether you're collecting product details, pricing, or reviews, this tutorial will provide you with step-by-step instructions to set up your scraping environment and start collecting data efficiently. We'll leverage the powerful Scrapeless Google Shopping API to simplify the process so you can focus on building your project without worrying about complex scraping logic or legal issues.

[Scrapeless API Technical advantages]

- Built-in anti-crawling engine (supports Cloudflare/recaptcha v3)

- Automatic processing of dynamically rendered content

- Provides standardized data fields for quick integration and analysis

- Efficient IP proxy pool to ensure high concurrent crawling and avoid IP blocking

- Real-time data updates to ensure the latest Google Shopping information is captured

- Global proxy network, supports multi-regional data crawling, and ensures coverage of product information in different markets

- High scalability, supports large-scale data crawling needs, suitable for enterprise-level applications

Step 1: Set up Python and install the required libraries

First, we need to build a data crawling environment and prepare the following tools:

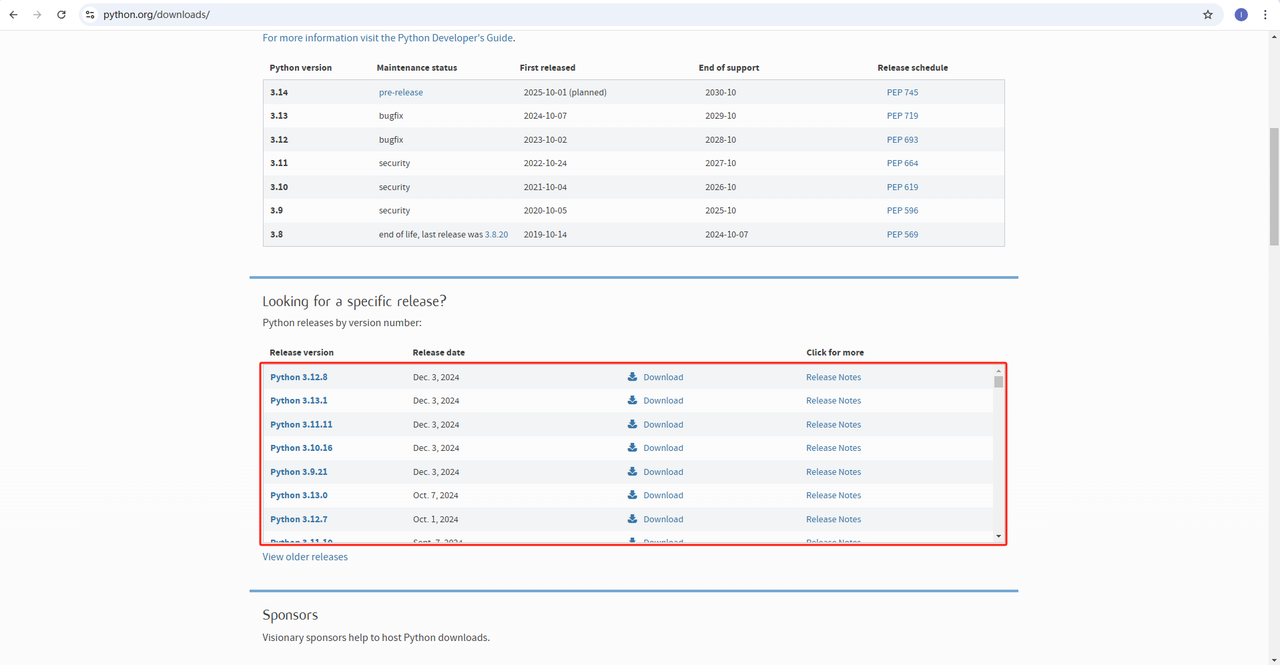

- Python: This is the core software for running Python. You can download the version we need from the official website link, as shown in the figure below, but it is recommended not to download the latest version. You can download 1-2 versions before the latest version.

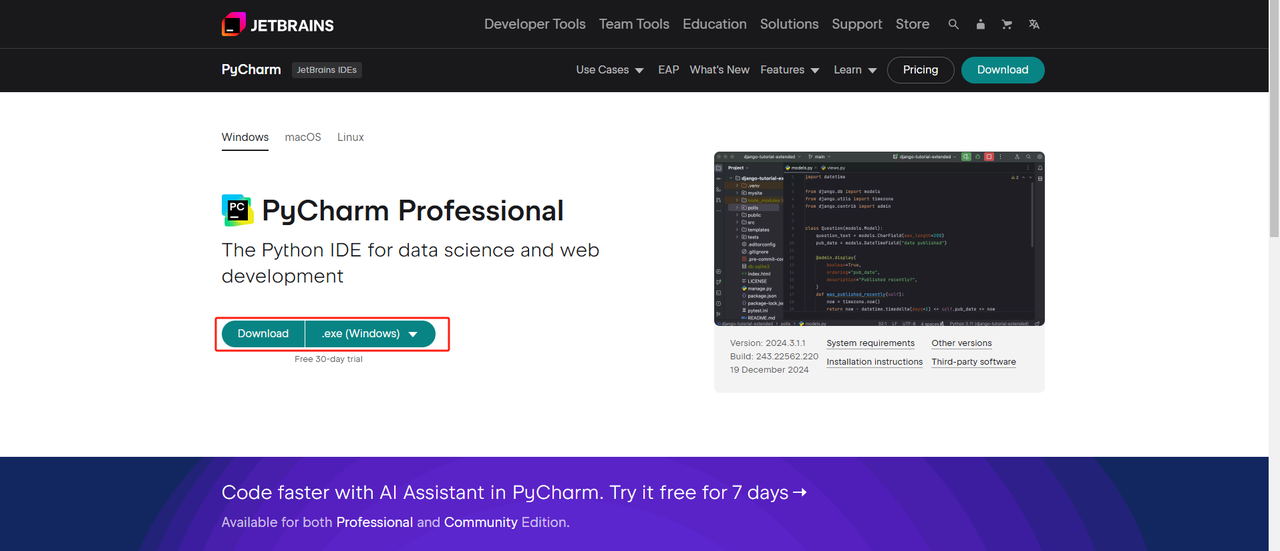

- Python IDE: Any IDE that supports Python will do, but we recommend PyCharm, which is an IDE development tool software designed specifically for Python. For the PyCharm version, we recommend the free PyCharm Community Edition.

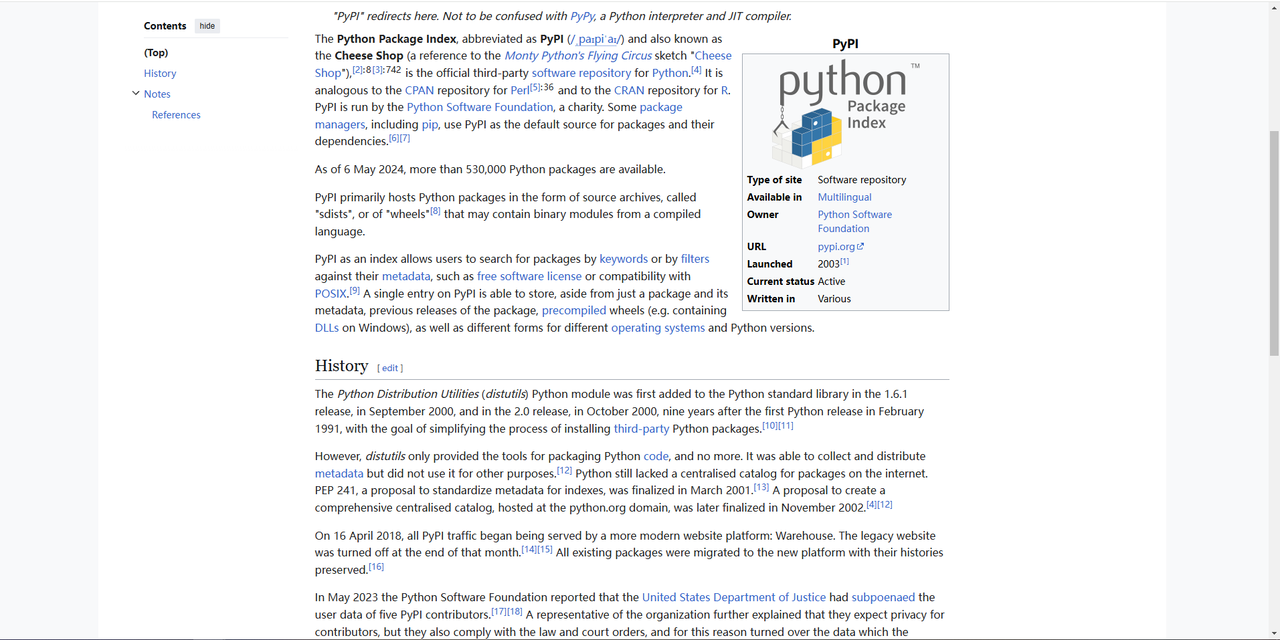

- Pip: You can use the Python Package Index to install the libraries you need to run your programs with a single command.

Note: If you are a Windows user, don't forget to check the "Add python.exe to PATH" option in the installation wizard. This will allow Windows to use Python and commands in the terminal. Since Python 3.4 or later includes it by default, you don't need to install it manually.

Through the above steps, the environment for crawling Google Shopping data is set up. Next, you can use the downloaded PyCharm combined with Scraperless to crawl Google Shopping data.

Step 2: Use PyCharm and Scrapeless to scrape Google Shopping data

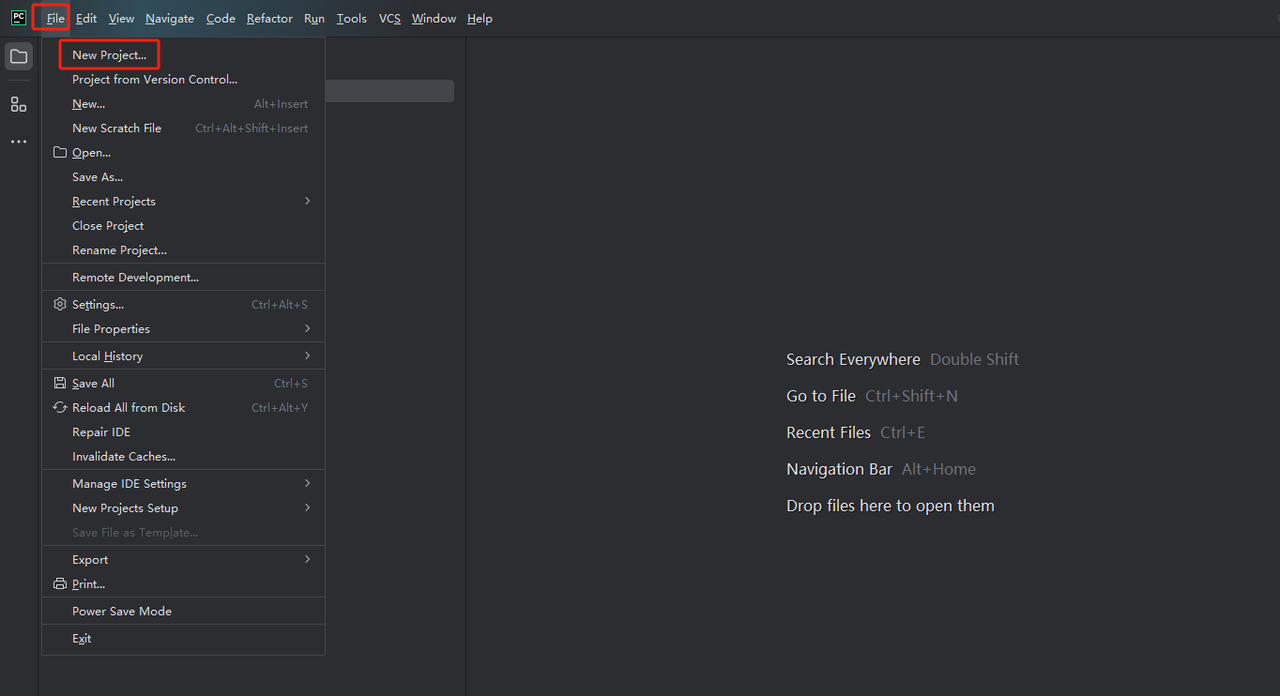

- Launch PyCharm and select File>New Project… from the menu bar.

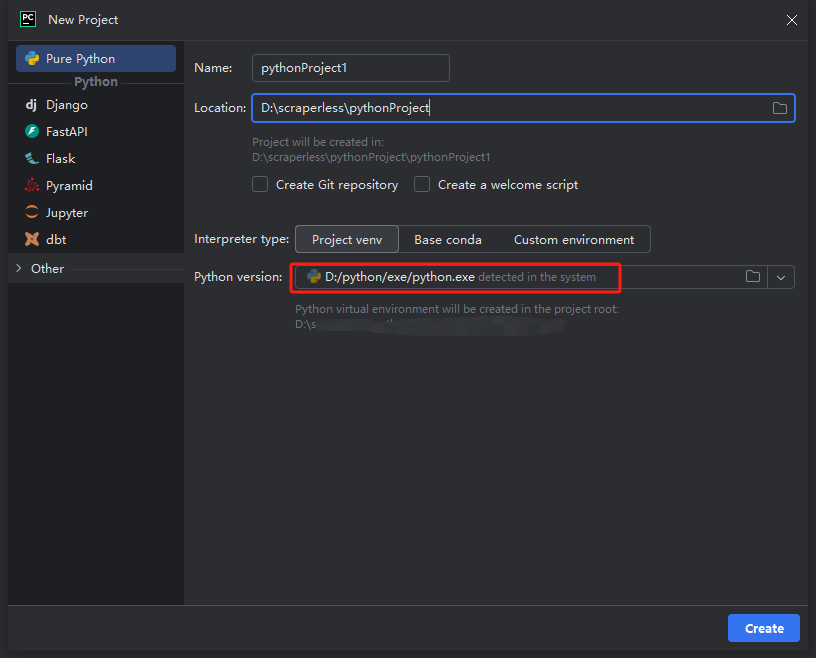

- Then, in the window that pops up, select Pure Python from the left menu and set up your project as follows:

Note: In the red box below, select the Python installation path downloaded in the first step of environment configuration

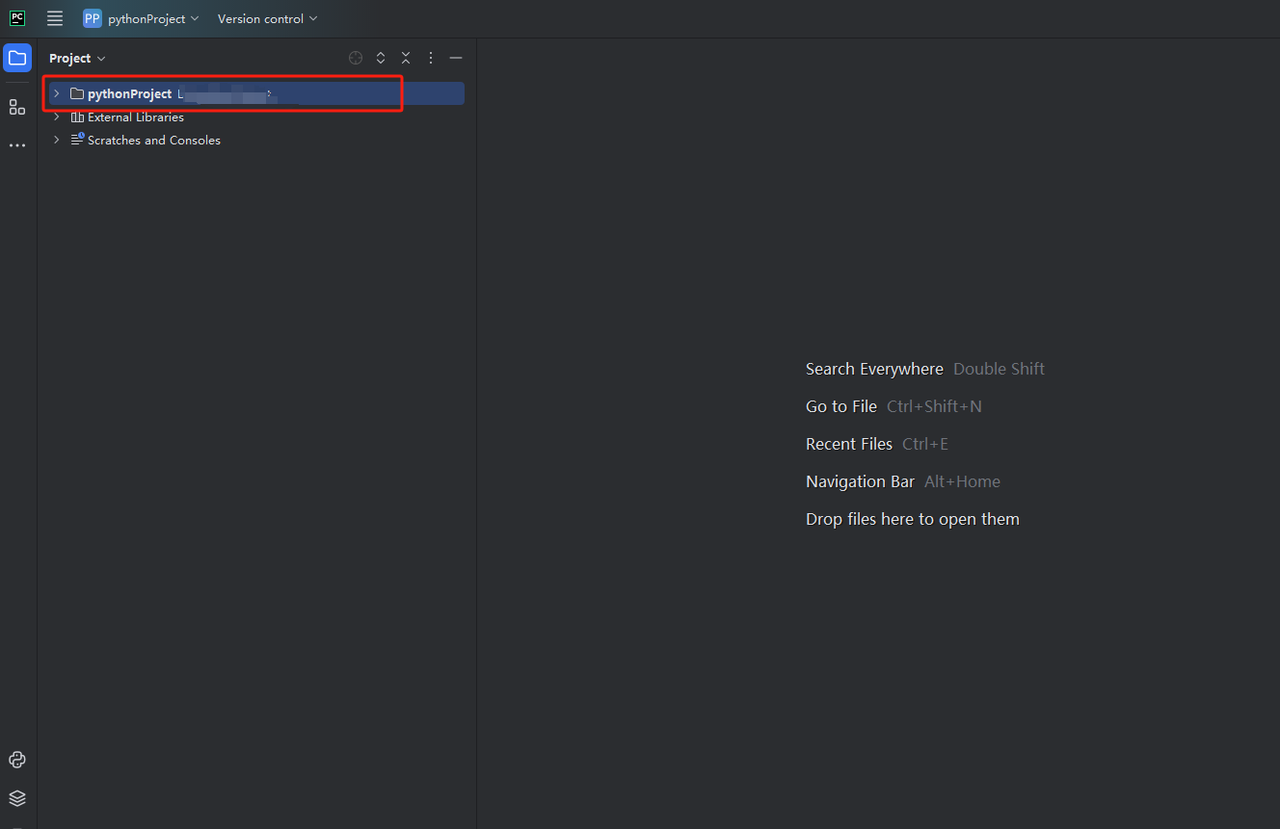

- You can create a project called python-scraper, check the "Create main.py welcome script option in the folder" and click the "Create" button. After PyCharm sets up the project for a while, you should see the following:

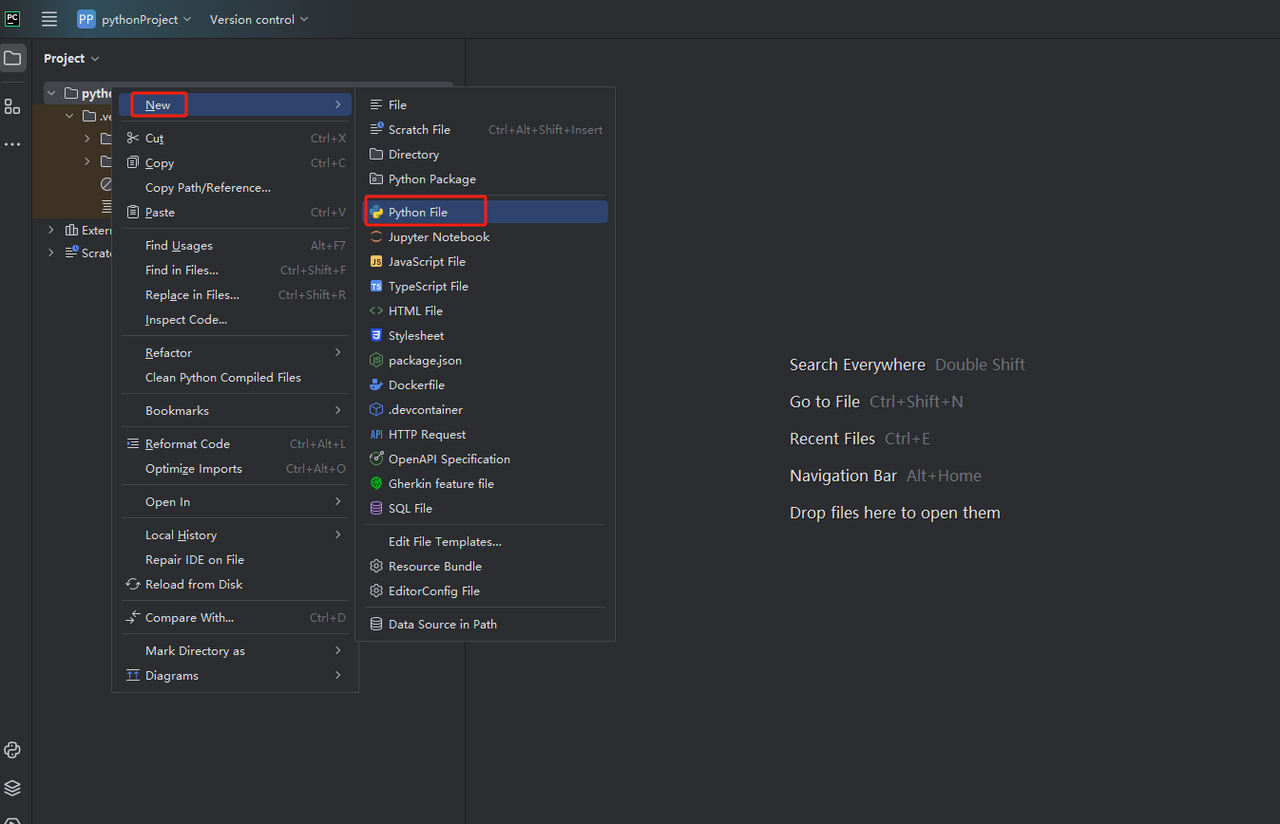

- Then, right-click to create a new Python file.

- To verify that everything is working correctly, open the Terminal tab at the bottom of the screen and type: python main.py. After launching this command you should get: Hi, PyCharm.

Step 3: Sign up for Scrapeless and get your API key

Now you can directly copy the Scrapeless code into PyCharm and run it, so you can get the JSON format data of Google Shopping. But you need to get the Scrapeless API key first.

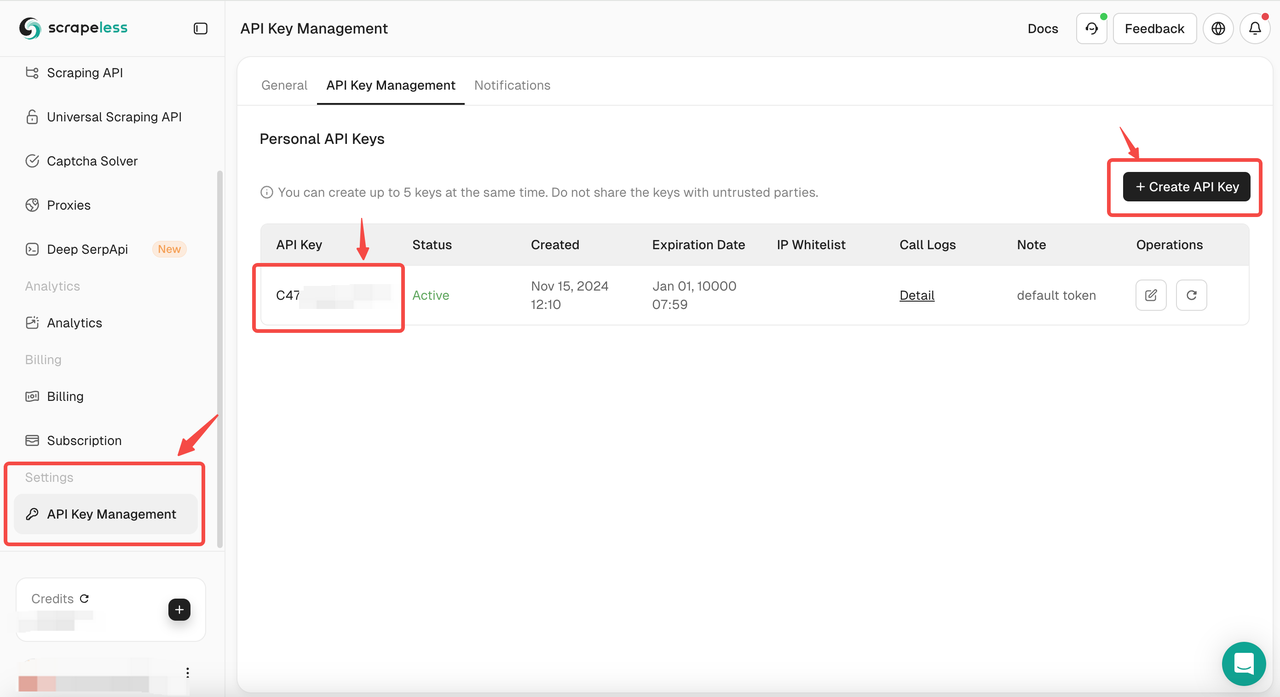

- If you don't have an account yet, please sign up for Scrapeless. After signing up, log in to your dashboard.

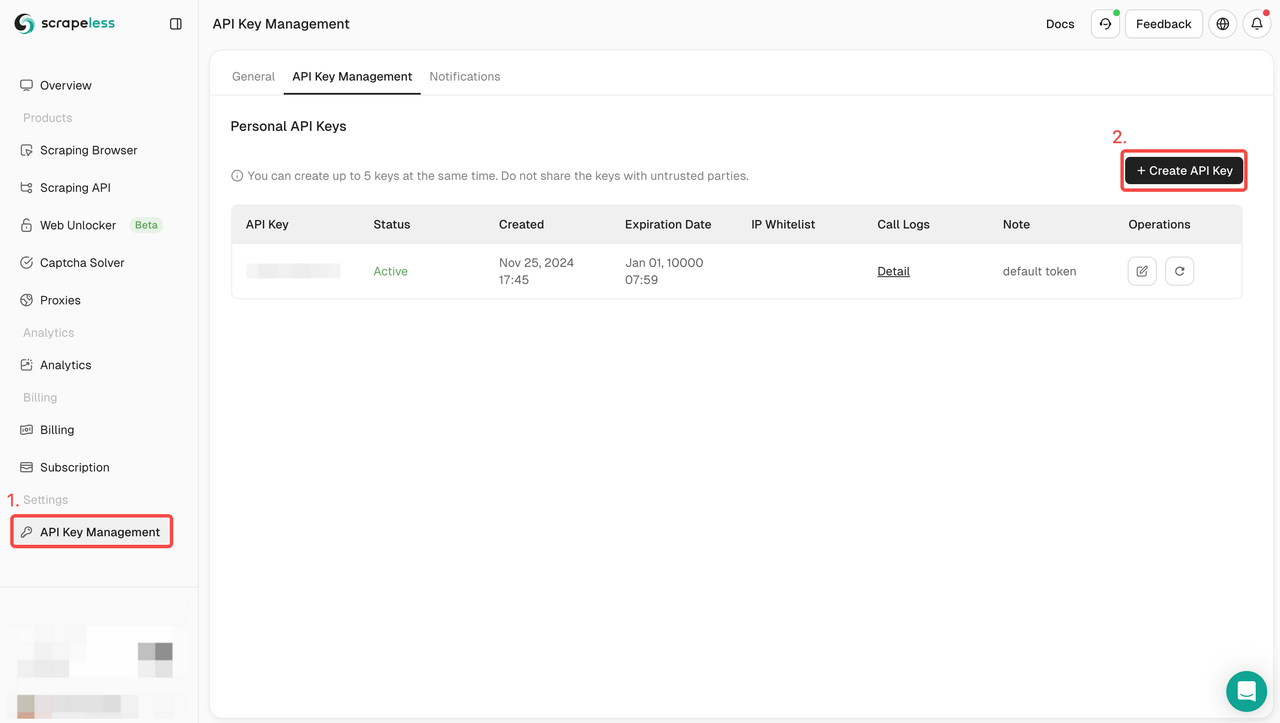

- In your Scrapeless dashboard, navigate to API Key Management and click on Create API Key. You will get your API Key. Just put your mouse on it and click it to copy it. This key will be used to authenticate your request when calling the Scrapeless API.

Step 4: Understand the Scrapeless Google Shopping API parameters

| Parameters | Required | Desc |

|---|---|---|

| engine | TRUE | Set parameter to google_shopping to use the Google Shopping API engine. |

| q | TRUE | Parameter defines the query you want to search. You can use anything that you would use in a regular Google Shopping search. |

| location | FALSE | Parameter defines from where you want the search to originate. If several locations match the location requested, we'll pick the most popular one. The location and uule parameters can't be used together. It is recommended to specify location at the city level. |

| uule | FALSE | Parameter is the Google encoded location you want to use for the search. uule and location parameters can't be used together. |

| gl | FALSE | Parameter defines the country to use for the Google search. It's a two-letter country code. (e.g., us for the United States, uk for United Kingdom, or fr for France). It defaults to us. |

| hl | FALSE | Parameter defines the language to use for the Google Maps search. It's a two-letter language code. (e.g., en for English, es for Spanish, or fr for French). It defaults to en. |

| tbs | FALSE | (to be searched) parameter defines advanced search parameters that aren't possible in the regular query field. |

| direct_link | FALSE | The parameter determines if the search results should include direct links to each product. By default, it is false. If you need the direct link, set it to true. This parameter only applies to the new layout (US and a few other countries). |

| start | FALSE | Parameter defines the result offset. It skips the given number of results. It's used for pagination. (e.g., 0 (default) is the first page of results, 60 is the 2nd page of results, 120 is the 3rd page of results, etc.). For the new layout, the parameter is not recommended. |

| num | FALSE | Parameter defines the maximum number of results to return. (e.g., 60 (default) returns 60 results, 40 returns 40 results, and 100 (maximum) returns 100 results). Any number greater than 100 will default to 100. Any number lesser than 1 will default to 60. |

Step 5: How to integrate the Scrapeless API into your scraping tool

Once you have your API key, you can start integrating the Scrapeless API into your own scraping tools. Here is an example of how to use Python and requests to call the Scrapeless API and retrieve data.

Code integration example:

import json

import requests

class Payload:

def __init__(self, actor, input_data):

self.actor = actor

self.input = input_data

def send_request():

host = "api.scrapeless.com"

url = f"https://{host}/api/v1/scraper/request"

token = "your_token"

headers = {

"x-api-token": token

}

input_data = {

"engine": "google_shopping",

"q": "Macbook M3"

}

payload = Payload("scraper.google.shopping", input_data)

json_payload = json.dumps(payload.__dict__)

response = requests.post(url, headers=headers, data=json_payload)

if response.status_code != 200:

print("Error:", response.status_code, response.text)

return

print("body", response.text)

if __name__ == "__main__":

send_request()Replace "your_token" with your Scrapeless API KEY. You can also customize your scraping code according to the API parameter information above.

How to use Deep SerpApi to scrape Google Shopping data

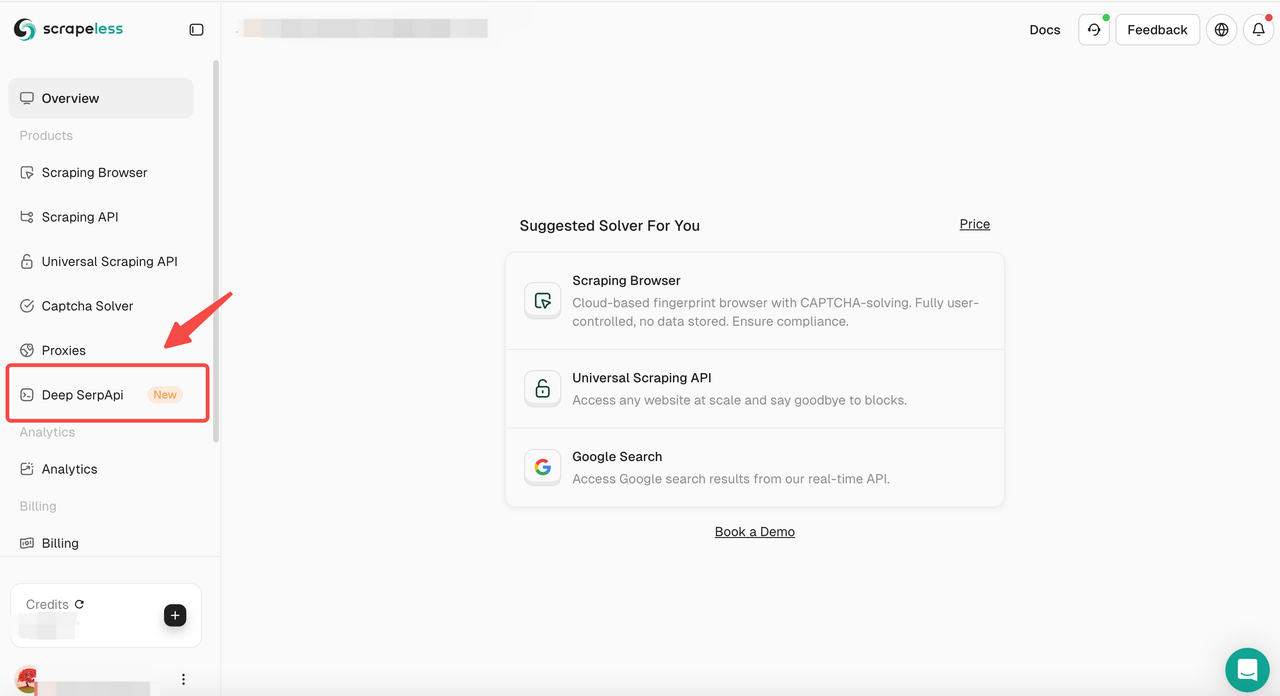

If you don't want to build the crawling parameters and code yourself, you can consider using Scrapeless Deep SerpApi.

Deep SerpAPI provides a cost-effective solution to help developers quickly obtain Google search results page (SERP) data. Its pricing plan is very competitive, with prices as low as $0.1 per 1,000 queries, applicable to more than 20 search results scenarios of Google.

Advantages of Deep SerpAPI

- Lowest price: Deep SerpAPI is priced as low as $0.1/k. It is the lowest price on the market.

- Easy to use: No need to write complex code, just get data through API calls.

- Real-time: Each request can instantly return the latest search results to ensure the timeliness of the data.

- Global support: Through global IP addresses and browser clusters, ensure that search results are consistent with the experience of real users.

- Rich data types: Supports more than 20 search types, such as Google Search, Google Maps, Google Shopping, etc.

- High success rate: Provides up to 99.995% service availability (SLA).

1. Sign Up and Access the API Key

- After signing up for free on Scrapeless, you can get 20,000 free search queries.

- Navigate to API Key Management. Then click Create to generate a unique API key. Once created, just click on AP to copy it.

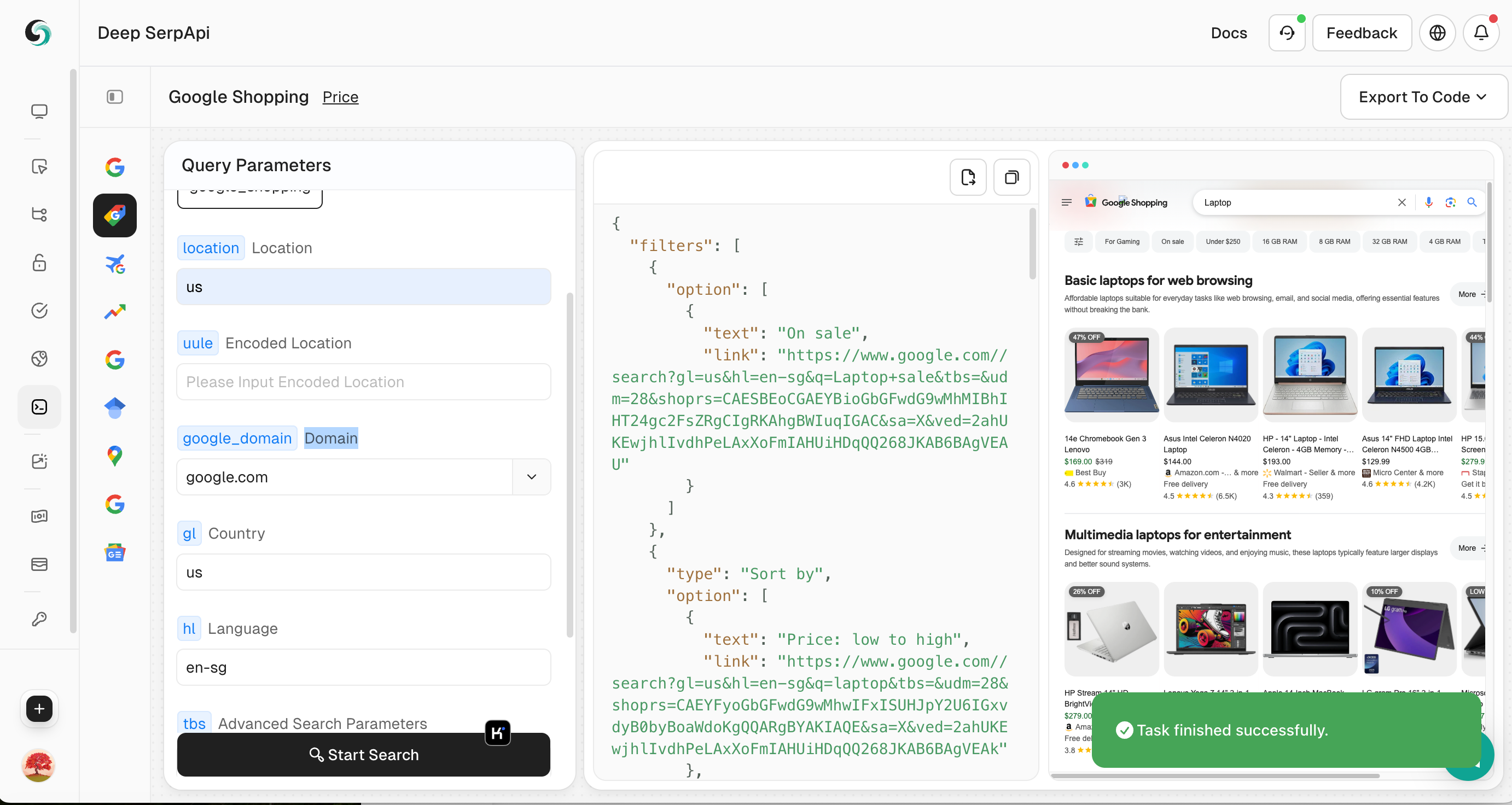

2. Access the Deep SerpApi Playground

- Then navigate to the "Deep SerpApi" section.

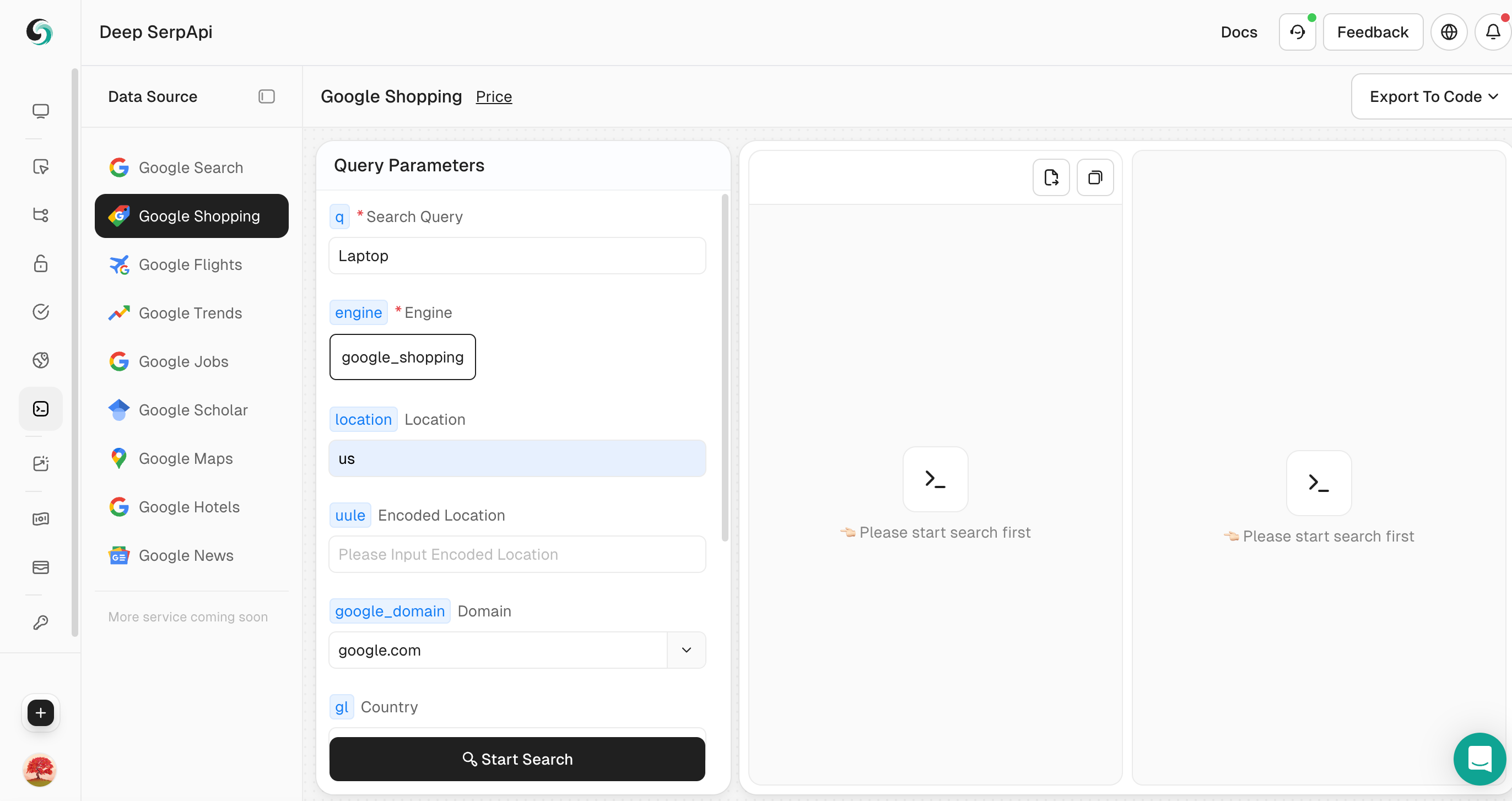

3. Set search parameters

- In the Playground, enter your search keyword, such as "Laptop".

- Set other parameters, such as country, language, Location, Domain etc.

You can also click to view the official API documentation of Scrapeless to learn about the parameters of Google Shopping.

4. Perform a search

- Click the "Start Search" button, and the Playground will send a request to the Deep Serp API and return structured JSON data.

5. View and export data

- Browse the returned JSON data to view detailed information.

- If necessary, you can click "Export to Code" in the upper right corner to export the data to CSV or JSON format for further analysis.

Explore Other E-commerce APIs for Data Scraping

In addition to crawling product data from Google Shopping, you can also collect and analyze market trends through other e-commerce platforms to understand product performance, price changes, and sales trends on different platforms.

- Amazon API: Through our Amazon API, you can efficiently crawl Amazon product data to understand prices, reviews, and inventory.

- Shopee API: Get product data from the Shopee platform and gain in-depth understanding of product demand in the Southeast Asian market.

- Shein API: Through Shein API, analyze data from the global fast fashion industry to understand consumer preferences and trends.

If your business needs to crawl data from these e-commerce platforms, or you have similar needs, our API interface provides powerful data crawling capabilities, allowing you to easily obtain product data from multiple e-commerce platforms. If you need a customized solution, please contact our sales team directly, and we will provide you with the best service based on your specific needs.

Join our Scrapeless Discord community today! 🎉 Get exclusive access to a free trial of Scrapeless. Don’t miss out - click the link, it’s a limited time offer!

Conclusion

In summary, scraping Google Shopping results with Scrapeless provides an effective way to collect valuable data for analysis, product research, and comparison. By following the step-by-step guide outlined in this article, you can easily set up the necessary tools, integrate the Scrapeless API into your workflow, and start extracting relevant information in a compliant and efficient manner. Whether you are a developer or a business owner looking to leverage Google Shopping data, the process is simple and scalable. Remember to always comply with legal and ethical guidelines regarding web scraping.

FAQs

Q1: How do I adjust the number of results per page?

To adjust the number of results returned per page, use the limit parameter. For example, setting "limit": 20 will return 20 results per request.

Q2: How do I crawl other pages?

Use the page parameter to crawl other pages. For example, "page": 2 will return the second page of results.

Q3: Can I crawl data from multiple locations?

Yes, you can specify a country or region using the location parameter. For example, "location": "UK" will crawl Google Shopping results from the UK.

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.