How to Scrape Google Lens Results with Scrapeless

Advanced Data Extraction Specialist

What is Google Lens?

Google Lens is an application based on artificial intelligence and image recognition technology that can identify objects, text, landmarks and other content through the camera or pictures, and provide relevant information.

Is it legal to scrape data from Google Lens?

Scraping Google Lens data is not illegal, but there are various legal and ethical guidelines that need to be followed. Users must understand Google's Terms of Service, data privacy laws, and intellectual property rights to ensure their activities are compliant. By following best practices and staying informed about legal developments, you can minimize the risk of legal issues associated with web scraping.

Challenges in Scraping Google Lens

- Advanced anti-bot technology: Google monitors network traffic patterns. Large numbers of repetitive requests from crawlers can be quickly detected, leading to IP bans, which stop the crawling process.

Recommended reading: Anti-Bot: What Is It and How to Avoid It - JavaScript - rendered content: Much of the data for Google Lens is dynamically generated by JavaScript, which is inaccessible to traditional crawlers, requiring the use of headless browsers such as Puppeteer or Selenium, but this increases complexity and resource consumption.

- CAPTCHA protection: Google uses CAPTCHA to authenticate human users. Crawlers may encounter CAPTCHA challenges that are difficult to solve programmatically.

- Frequent website updates: Google regularly changes the structure and layout of Google Lens. The crawling code can quickly become outdated, and the XPath or CSS selectors used for data extraction may stop working. Constant monitoring and updates are required.

Step Guide to Scrape Google Lens with Python

Step 1. Configure the environment

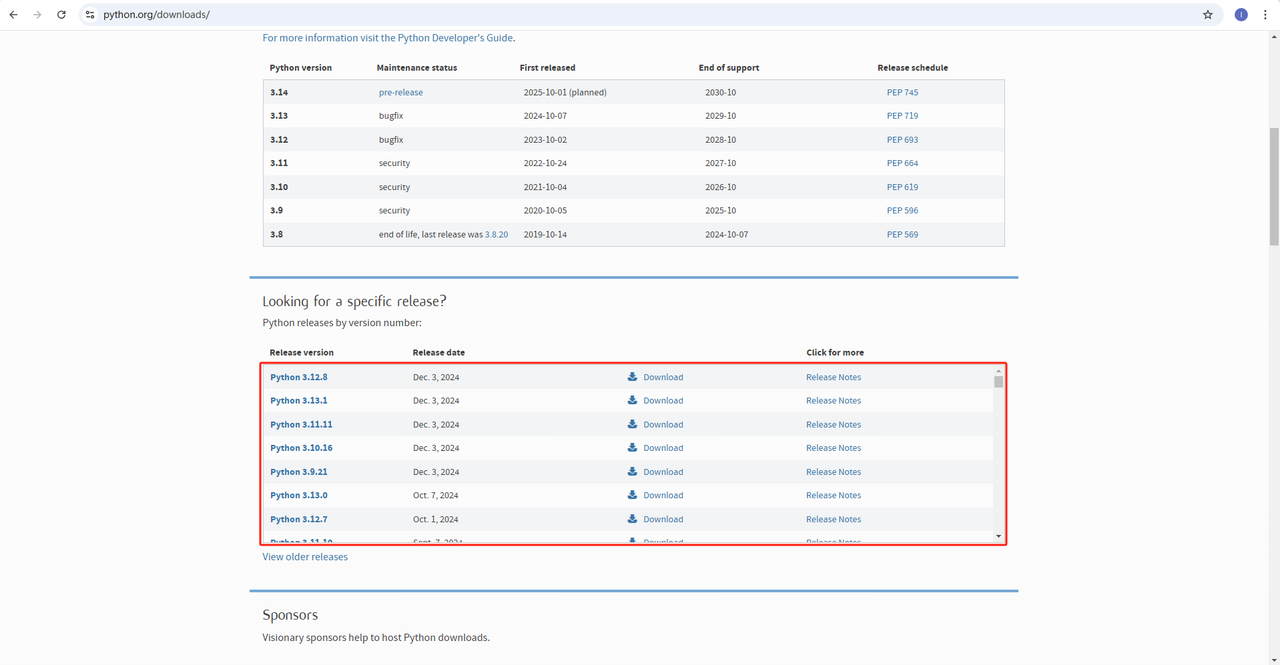

- Python: The software is the core of running Python. You can download the version we need from the official website as shown below. However, it is not recommended to download the latest version. You can download 1.2 versions before the latest version.

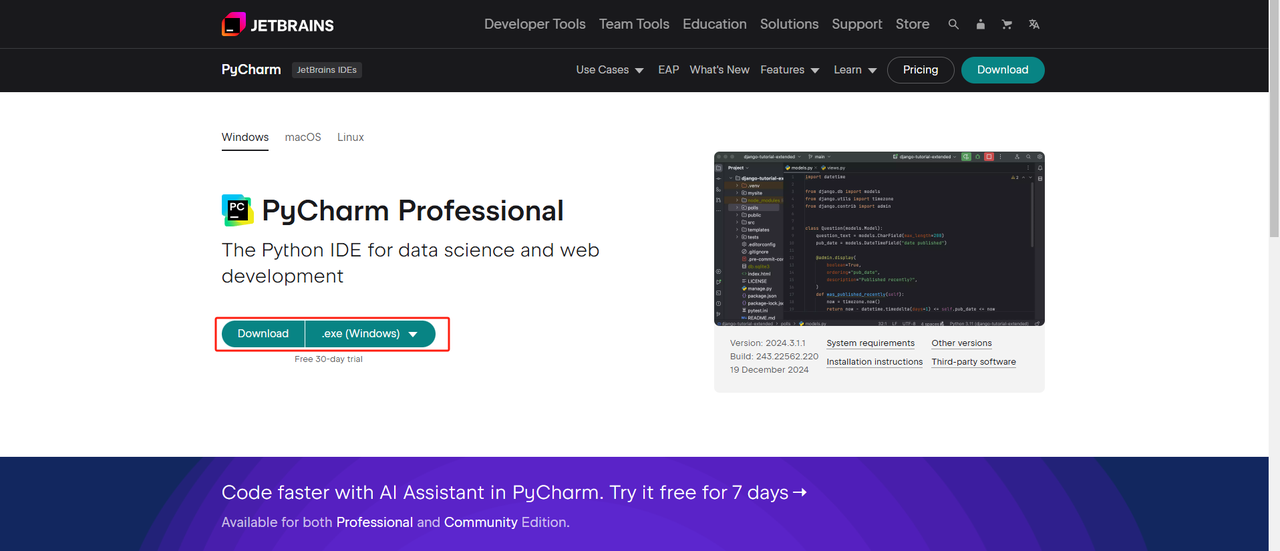

- Python IDE: Any IDE that supports Python will work, but we recommend PyCharm. It is a development tool specifically designed for Python. For the PyCharm version, we recommend the free PyCharm Community Edition

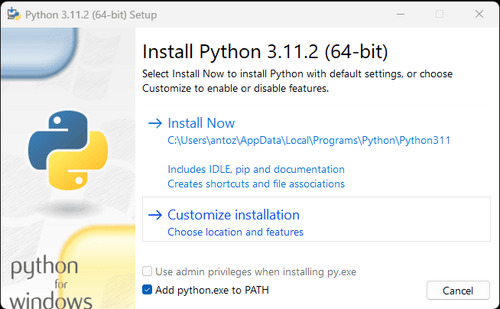

Note: If you are a Windows user, do not forget to check the "Add python.exe to PATH" option during the installation wizard. This will allow Windows to use Python and commands in the terminal. Since Python 3.4 or later includes it by default, you do not need to install it manually.

Now you can check if Python is installed by opening the terminal or command prompt and entering the following command:

python --versionStep 2. Install Dependencies

It is recommended to create a virtual environment to manage project dependencies and avoid conflicts with other Python projects. Navigate to the project directory in the terminal and execute the following command to create a virtual environment named google_lens:

python -m venv google_lensActivate the virtual environment based on your system:

Windows:

google_lens_env\Scripts\activateMacOS/Linux:

source google_lens_env/bin/activateAfter activating the virtual environment, install the required Python libraries for web scraping. The library for sending requests in Python is requests, and the main library for scraping data is BeautifulSoup4. Install them using the following commands:

pip install requests

pip install beautifulsoup4

pip install playwrightStep 3. Scrape Data

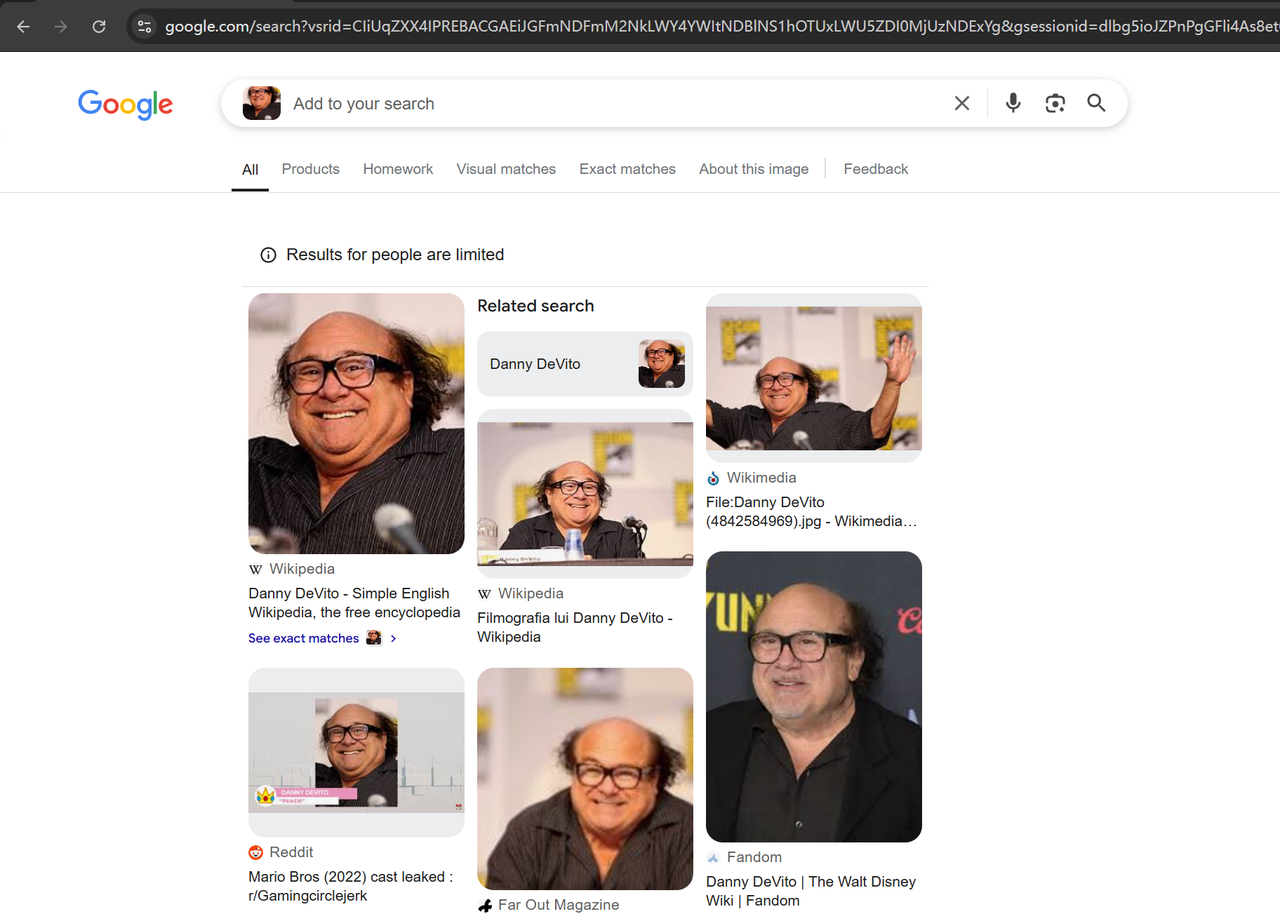

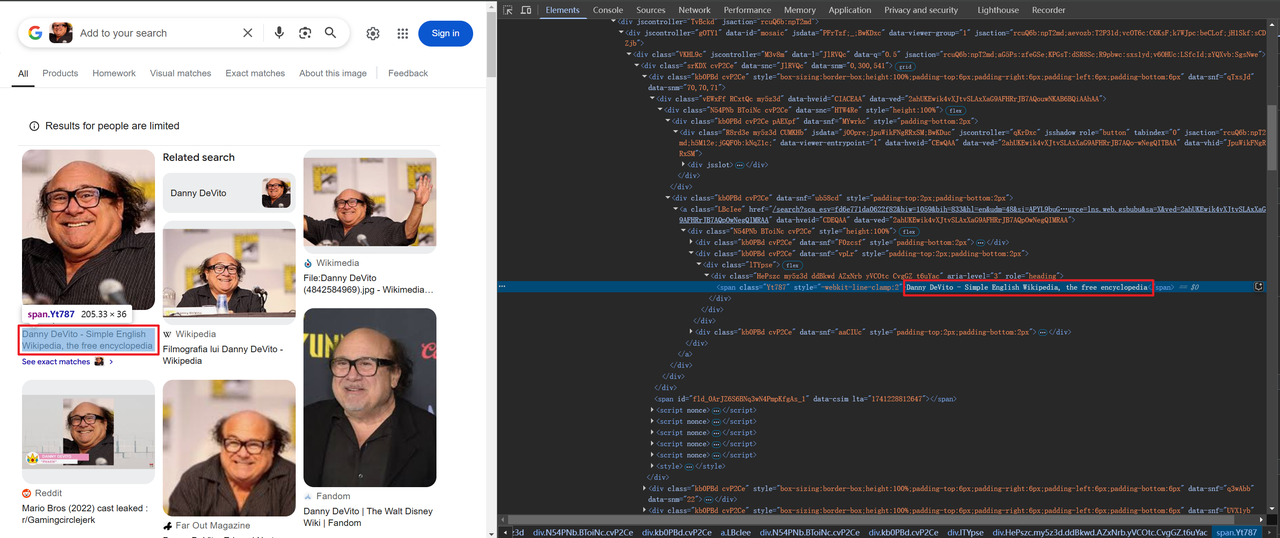

Open Google lens(https://www.google.com/?olud) in your browser and search for "https://i.imgur.com/HBrB8p0.png". Below is the search result:

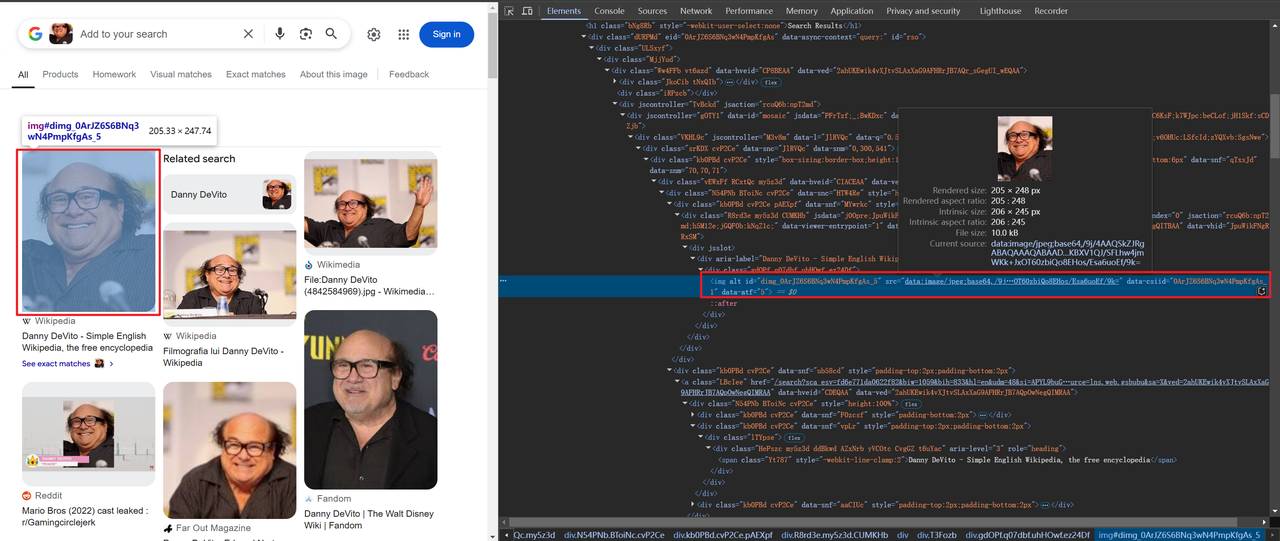

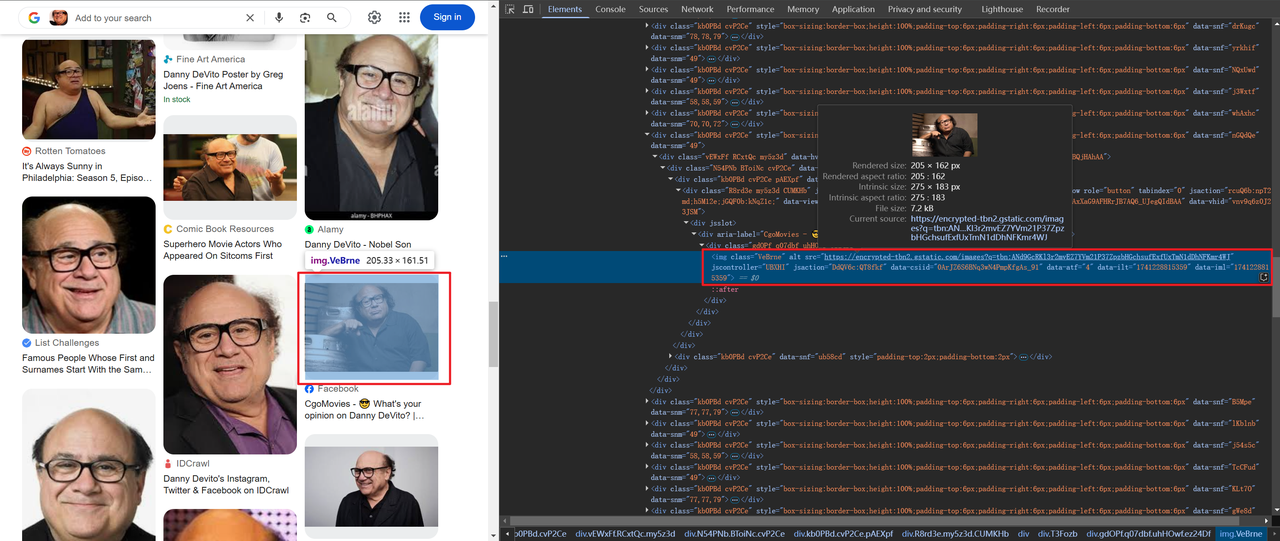

Scrape title and image info

Some images are encoded in base64, while others are linked via HTTP,eg:

The code for obtaining title and image information is as follows:

# Store lens information in a dictionary

img_info = {

'title': item.find("span").text,

'thumbnail': item.find("img").attrs['src'],

}Since we need to scrape all the data on the page, not just one, we need to loop through and scrape the above data. The complete code is as follows:

import json

from bs4 import BeautifulSoup

from playwright.sync_api import sync_playwright

def scrape(url: str) -> str:

with sync_playwright() as p:

# Launch the browser and disable some features that may cause detection

browser = p.chromium.launch(

headless=True,

args=[

"--disable-blink-features=AutomationControlled",

"--disable-dev-shm-usage",

"--disable-gpu",

"--disable-extensions",

],

)

context = browser.new_context(

user_agent="Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/132.0.0.0 Safari/537.36",

bypass_csp=True,

)

page = context.new_page()

page.goto(url)

page.wait_for_selector("body", state="attached")

# Wait for 2 seconds to ensure that the page is fully loaded or rendered

page.wait_for_timeout(2000)

html_content = page.content()

browser.close()

return html_content

def main():

url = "https://lens.google.com/uploadbyurl?url=https%3A%2F%2Fi.imgur.com%2FHBrB8p0.png"

html_content = scrape(url)

soup = BeautifulSoup(html_content, 'html.parser')

# Obtain the main data of the page

items = soup.find('div', {'jscontroller': 'M3v8m'}).find("div")

# circular assembly

assembly = lens_info(items)

# Save results to a JSON file

with open('google_lens_data.json', 'w') as json_file:

json.dump(assembly, json_file, indent=4)

def lens_info(items):

lens_data = []

for item in items:

# Store lens information in a dictionary

img_info = {

'title': item.find("span").text,

'thumbnail': item.find("img").attrs['src'],

}

lens_data.append(img_info)

return lens_data

if __name__ == "__main__":

main()Step 4. Output Results

A file named google_lens_data.json will be generated in your PyCharm directory. The output is as follows(Partial example):

[

{

"title": "Danny DeVito - Wikipedia",

"thumbnail": "data:image/jpeg;base64,/9j/4AAQSkZJRgABAQAAAQABAAD/2wCEAAkGBxAQEhAQEBAQEB

},

{

"title": "Devito Danny Royalty-Free Images, Stock Photos & Pictures | Shutterstock",

"thumbnail": "https://encrypted-tbn1.gstatic.com/images?q=tbn:ANd9GcSO6Pkv_UmXiianiCh52nD5s89d7KrlgQQox-f-K9FtXVILvHh_"

},

{

"title": "DATA | Celebrity Stats | Page 62",

"thumbnail": "https://encrypted-tbn3.gstatic.com/images?q=tbn:ANd9GcQ9juRVpW6sjE3OANTKIJzGEkiwUpjCI20Z1ydvJBCEDf3-NcQE"

},

{

"title": "Danny DeVito, Grand opening of Buca di Beppo italian restaurant on Universal City Walk Universal City, California - 28.01.09 Stock Photo - Alamy",

"thumbnail": "https://encrypted-tbn0.gstatic.com/images?q=tbn:ANd9GcQq_07f-Unr7Y5BXjSJ224RlAidV9pzccqjucD4VF7VkJEJJqBk"

}

]More efficient tools: How to Scrape Google Lens Results with Scrapeless

Scrapeless provides a powerful tool that helps developers easily scrape Google Lens search results without writing complex code. Here are the detailed steps to integrate the Scrapeless API into your Python crawler tool:

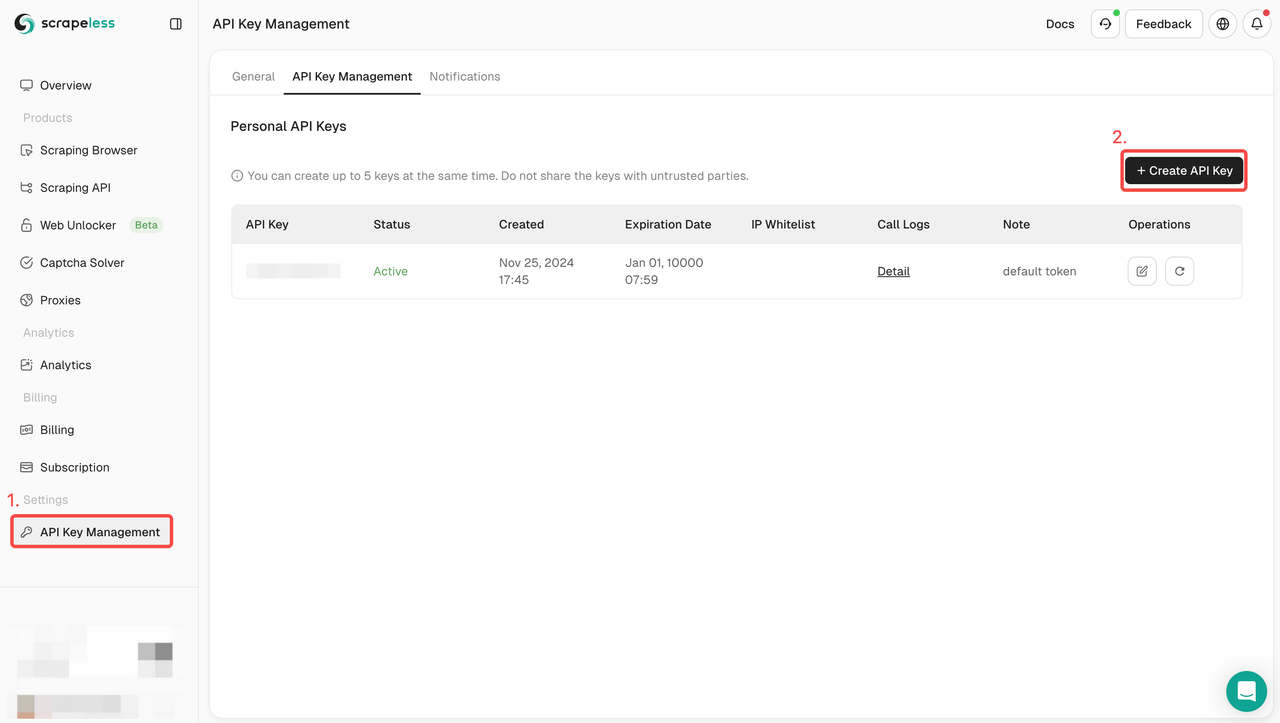

Step 1: Sign up for Scrapeless and get an API key

- If you don't have a Scrapeless account yet, visit the Scrapeless website and sign up.

- Once you've signed up, log in to your dashboard.

- In the dashboard, navigate to API Key Management and click Create API Key. Copy the generated API key, which will be your authentication credential when calling the Scrapeless API.

Step 2: Write a Python script to integrate the Scrapeless API

The following is a sample code for scraping Google Lens results using the Scrapeless API:

import json

import requests

class Payload:

def __init__(self, actor, input_data):

self.actor = actor

self.input = input_data

def send_request():

host = "api.scrapeless.com"

url = f"https://{host}/api/v1/scraper/request"

token = "your_token"

headers = {

"x-api-token": token

}

input_data = {

engine: "google_lens",

hl: "en",

country: "jp",

url: "https://s3.zoommer.ge/zoommer-images/thumbs/0170510_apple-macbook-pro-13-inch-2022-mneh3lla-m2-chip-8gb256gb-ssd-space-grey-apple-m25nm-apple-8-core-gpu_550.jpeg",

}

payload = Payload("scraper.google.lens", input_data)

json_payload = json.dumps(payload.__dict__)

response = requests.post(url, headers=headers, data=json_payload)

if response.status_code != 200:

print("Error:", response.status_code, response.text)

return

print("body", response.text)

if __name__ == "__main__":

send_request()Notes

API key security: Please make sure not to expose your API key in the public code repository.

Query optimization: Adjust the query parameters according to your needs to get more precise results. For more information about API parameters, you can check the official API documentation of Scrapeless

Why Choose Scrapeless for Scraping Google Lens

Scrapeless is a powerful AI-driven web scraping tool designed for efficient and stable web scraping.

1. Real-time data and high-quality results

Scrapeless provides real-time Google Lens search results and can return Google Lens search results within 1-2 seconds. Ensure that the data users get is always up to date.

2. Affordable price

Scrapeless's pricing is very competitive, with prices as low as only $0.1 per 1,000 queries.

3. Powerful function support

Scrapeless supports multiple search types, including more than 20 Google search result scenarios. It can return structured data in JSON format, which is convenient for users to quickly parse and use.

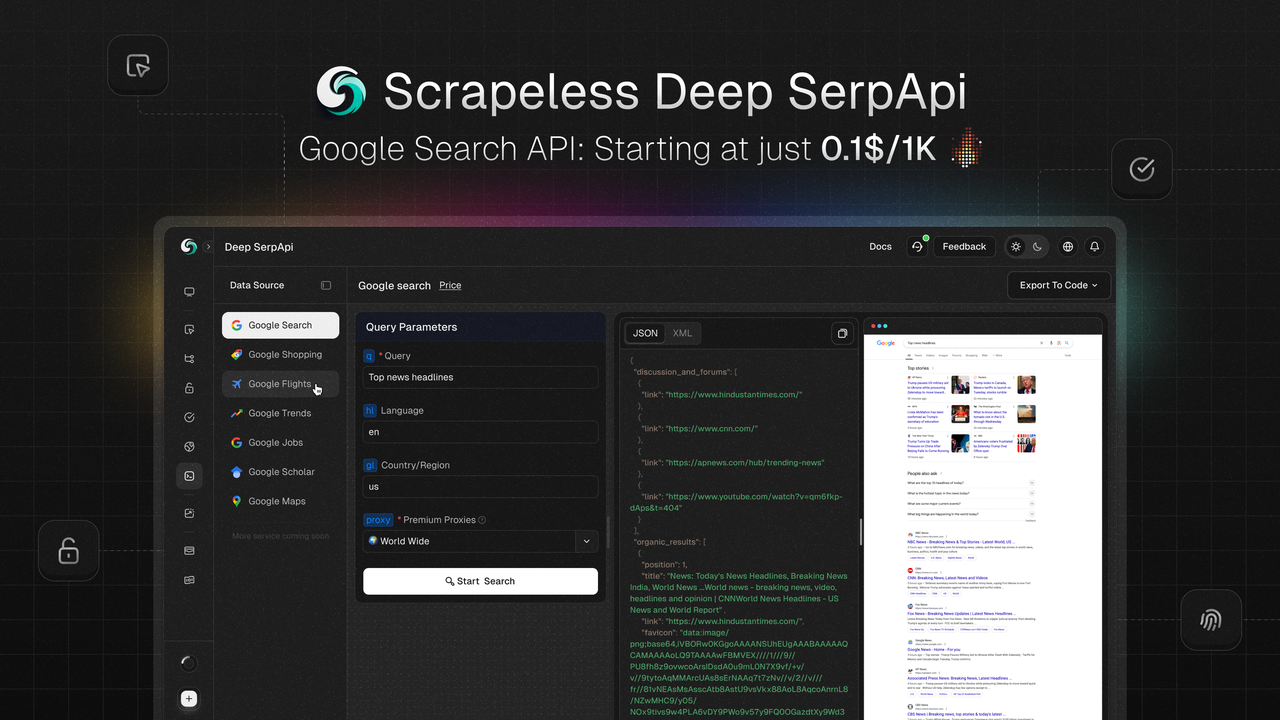

Scrapeless Deep SerpAPI: A powerful real-time search data solution

Scrapeless Deep SerpApi is a real-time search data platform designed for AI applications and retrieval-augmented generation (RAG) models. It provides real-time, accurate and structured Google search results data, supporting more than 20 Google SERP types, including Google Search, Google Trends, Google Shopping, Google Flights, Google Hotels, Google Maps, etc.

Core Features

- Real-time data update: based on data updates within the past 24 hours, ensuring the timeliness and accuracy of information.

- Multi-language and geo-location support: supports multi-language and geo-location, and can customize search results based on the user's location, device type and language.

- Fast response: The average response time is only 1-2 seconds, suitable for high-frequency and large-scale data retrieval.

- Seamless integration: compatible with mainstream programming languages such as Python, Node.js, Golang, etc., easy to integrate into existing projects.

- Cost-effective: With a price as low as $0.1 per 1,000 queries, it is the most cost-effective SERP solution on the market.

Special Offers

- Free Trial: A free trial is provided, and users can experience all features.

- Developer Support Program: The first 100 users can get a free API call quota worth $50 (500,000 queries), suitable for testing and expansion projects.

If you have any questions or customization requirements, you can contact Liam by clicking the Discord link.

Conclusion

In this article, we detailed how to use Scrapeless to crawl Google Lens search results. With the powerful API provided by Scrapeless, developers and researchers can easily obtain real-time, high-quality visual data without writing complex code or worrying about anti-crawling mechanisms. Scrapeless's efficiency and flexibility make it an ideal tool for processing Google Lens data.

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.