How to Scrape Public Google Maps data and reviews using Python

Advanced Data Extraction Specialist

Google Maps lists millions of businesses, and accessing this valuable data can provide insights into consumer behavior, market trends, and competitive analysis. By scraping Google Maps data and reviews, developers, marketers, and data analysts can transform the way they work, enabling them to efficiently extract location-based information.

In this guide, we will walk you through the process of using Python to scrape Google Maps, covering everything from setting up the environment to extracting data and aggregating reviews.

Understanding Google Maps Data

Google Maps provides vast amounts of data that hold immense value for various use cases, such as business analysis, market research, and competitive intelligence. To effectively scrape Google Maps data, it is essential to understand the available data, its structure, and the best methods for extraction. Let’s take a closer look at the different types of data Google Maps offers and how to extract them efficiently.

Google Maps data types

When you crawl Google Maps, the most common data type you will encounter is:

- Merchant Information: Each location or business on Google Maps has detailed information, including its name, address, contact information, and website. This data is usually displayed in merchant profiles and can be scraped to collect key information about businesses in various industries.

- Ratings and Reviews: One of the most valuable Data Points on Google Maps are merchant-related ratings and reviews. This includes average star ratings, individual reviews, reviewer names, review dates, and sometimes reviewer pictures. Reviews can provide valuable insights into customer satisfaction and sentiment.

- Location coordinates: Every place on Google Maps is associated with geographic coordinates (latitude and longitude), which is useful for mapping, geolocation, or location-based analytics.

- Business Hours: Google Maps provides business hours for most businesses, including opening and closing times, which may vary on weekends or holidays.

- Photos and media: Many businesses upload photos to their Google Maps profiles. These images can be scraped to provide additional background information about the business, such as store layout, food (for restaurants), or product displays.

- Categories and Tags: Businesses on Google Maps are labeled with categories (such as restaurants, gyms, or hotels) and sometimes with keywords to help users filter and search for businesses based on their needs.

How to Scrape Google Maps data and reviews using Python

There are at least three ways to scrape Google Maps data efficiently:

Method 1: Use the Scrapeless Google Maps API (Recommended)

Scrapeless provides a well-structured JSON containing all the relevant information extracted from Google Maps search results. It also offers additional comprehensive data such as reviews, photos, and navigation routes. By using this API, you can extract Google Maps data without the need to build your own Google Maps scraper, saving significant time and effort.

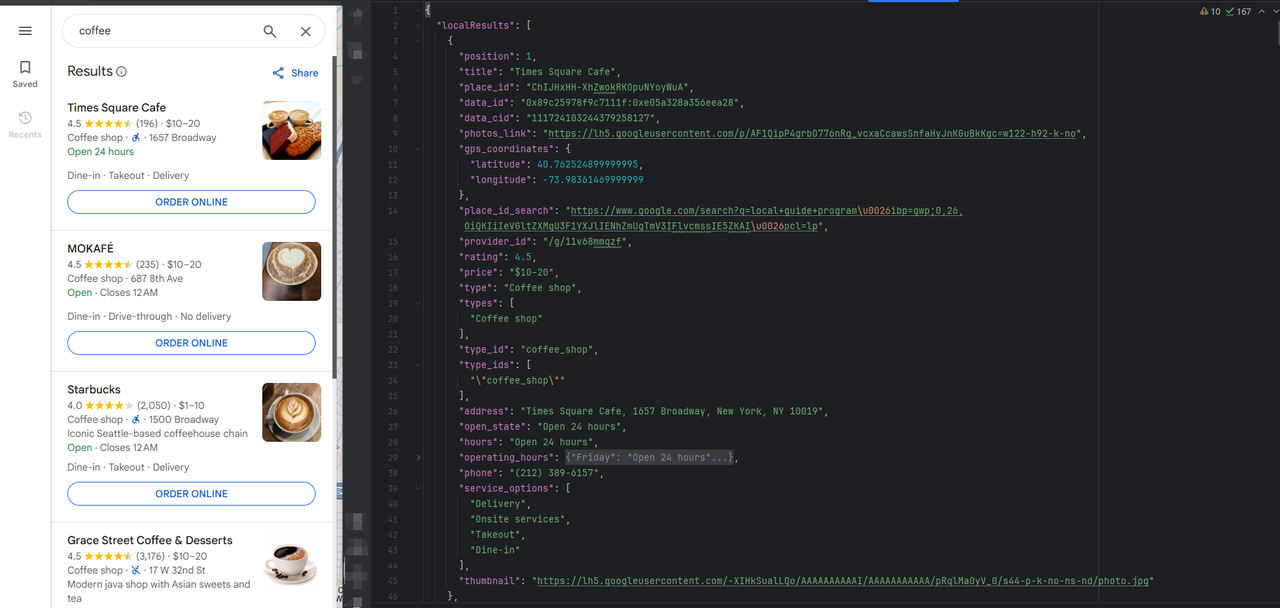

Example of a Scraped JSON Output:

Method 2: Use Google’s Places API

Another approach is to use the Google Places API, but first, you need to set up a Google Cloud project and follow the setup instructions to obtain an API key. Once configured, you can perform searches using HTTP POST requests or the Python SDK.

Drawbacks:

- The initial setup to obtain the API key is complex.

- You may not be able to extract as much data as direct scraping, especially when it comes to reviews or certain media content.

Method 3: Build Your Own DIY Google Maps Scraper

Google Maps dynamically loads its data, making it necessary to use tools like Puppeteer to properly scrape JavaScript-rendered pages. You can leverage Selenium, Pyppeteer, or the Playwright Python package to run a headless browser and extract relevant Google Maps data.

Drawbacks:

- Building your own Google Maps scraping tool is time-consuming.

- You may face challenges such as IP blocking, setting up multiple proxies, and managing request limits.

Scraping Google Map Data with Python [Quick&Easy]

In the following part, we will elaborate on how to scrape Google Map data by combining Python and Scrapeless.

Step 1: The Tools We'll Use

Let's introduce how to scrape Google Maps information using the Scrapeless API. Typically, you might use libraries such as BeautifulSoup, Selenium, Puppeteer, or Requests to build your own DIY solutions for scraping Google Maps data.

But now, you can sit back and relax. We'll handle these tedious tasks for you. With Scrapeless, you no longer need to worry about the various issues that may arise during web scraping. We've got you covered, and all you need to do is run the code.

Scrape Google Map data with ease using Scrapeless, and let us handle the complexities. Whether you're looking to scrape Google Maps reviews, locations, or other valuable information, Scrapeless is your go-to solution. Say goodbye to the hassle of manual scraping and hello to efficient data extraction.

Step 2: Setup and Preparation

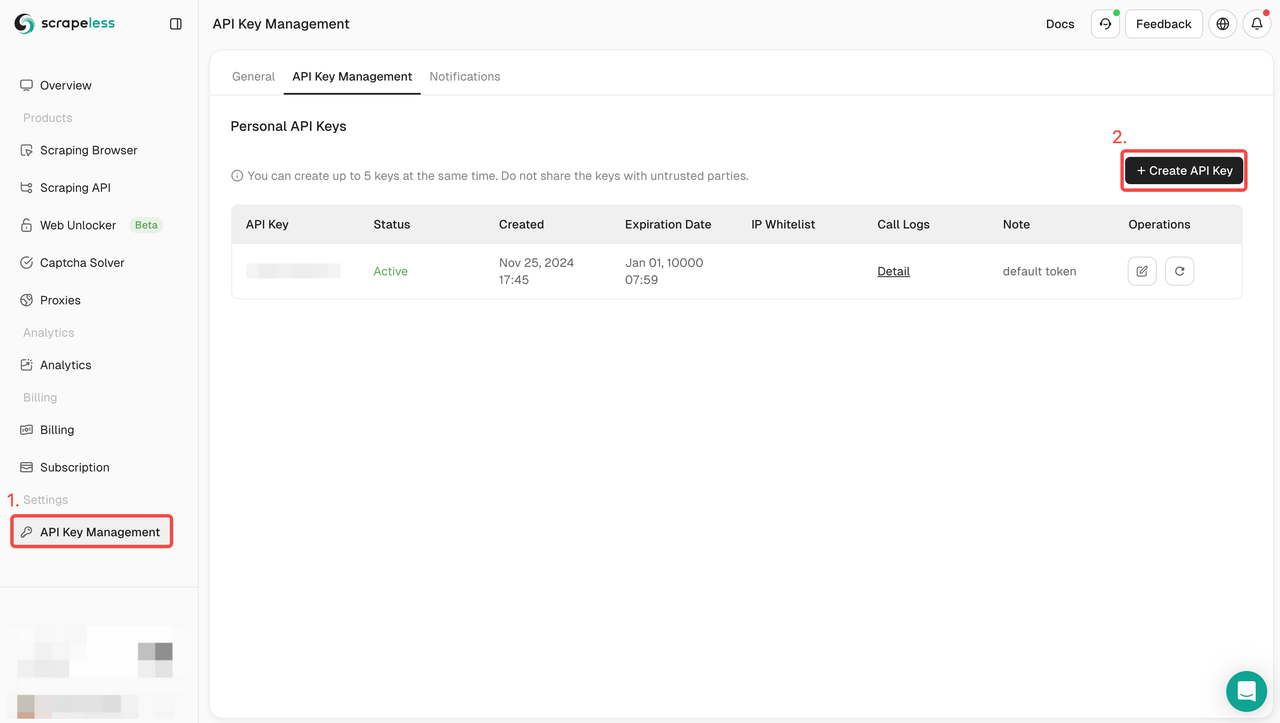

- After signing up for free at scrapeless, you have free $2 to perform searches.

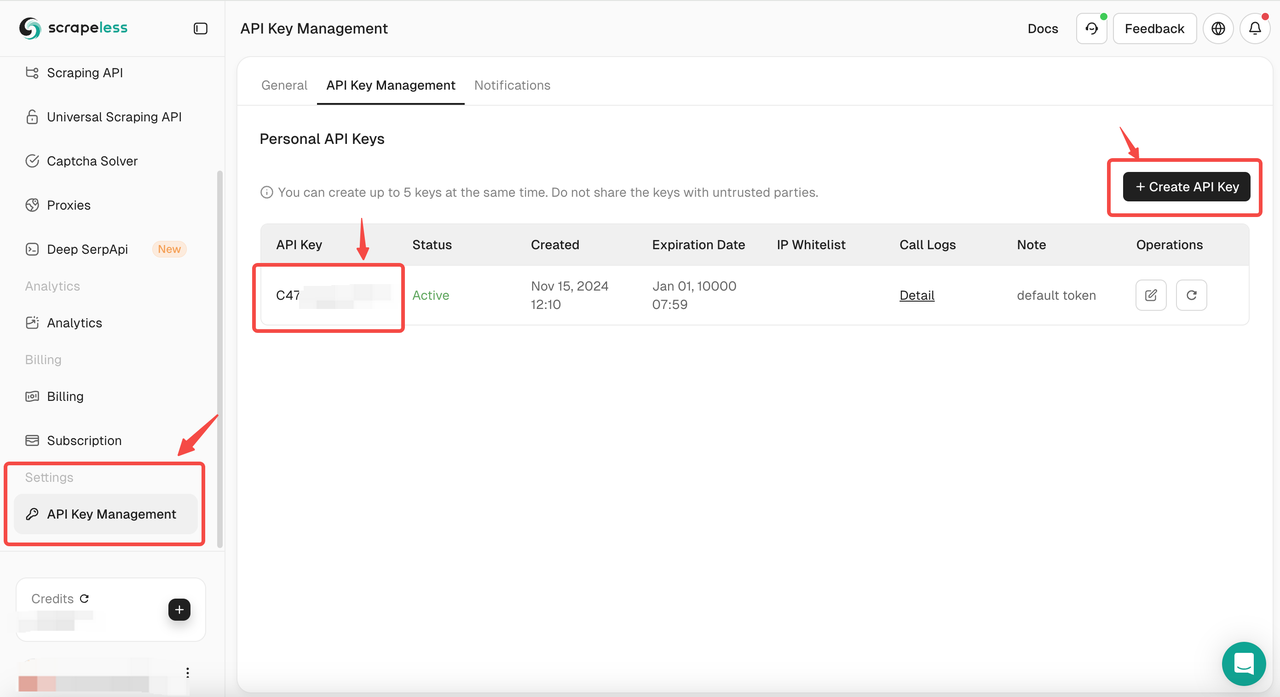

- Navigate to API Key Management. Then click on Create to generate your unique API key. Once created, just click on the API key to copy it.

Step 3: Write scarpeless request code.

Suppose we want to find places in New York based on the coffee keyword. This API requires an ll parameter, which is the latitude and longitude of a certain area. We can use a free tool to get the latitude and longitude.

Visit this link, we just need to enter the city name or area, it will return the relevant longitude and latitude. We separate these numbers with commas to form a complete ll parameter.

Take New York as an example:

- Latitude: 40.712776

- Longitude: -74.005974

So the ll value is @40.712776,-74.005974

Once we have the ll value, we can form the complete Python code of the scarpeless API:

import json

import requests

class Payload:

def __init__(self, actor, input_data):

self.actor = actor

self.input = input_data

def send_request():

host = "api.scrapeless.com"

url = f"https://{host}/api/v1/scraper/request"

token = "your_token"

headers = {

"x-api-token": token

}

input_data = {

"engine": "google_maps",

"q": "coffee",

"type": "search",

"ll": "@40.712776,-74.005974.14z",

}

payload = Payload("scraper.google.maps", input_data)

json_payload = json.dumps(payload.__dict__)

response = requests.post(url, headers=headers, data=json_payload)

if response.status_code != 200:

print("Error:", response.status_code, response.text)

return

print("body", response.text)

if __name__ == "__main__":

send_request()Parameter Description:

- Replace your_token with the API key you obtained from the Scrapeless website.

- The q parameter can be used with any keyword you want to search for.

- The ll parameter specifies the latitude and longitude of the location you want to search.

- Search results can be found in result['local_results'] or result['place_results'].

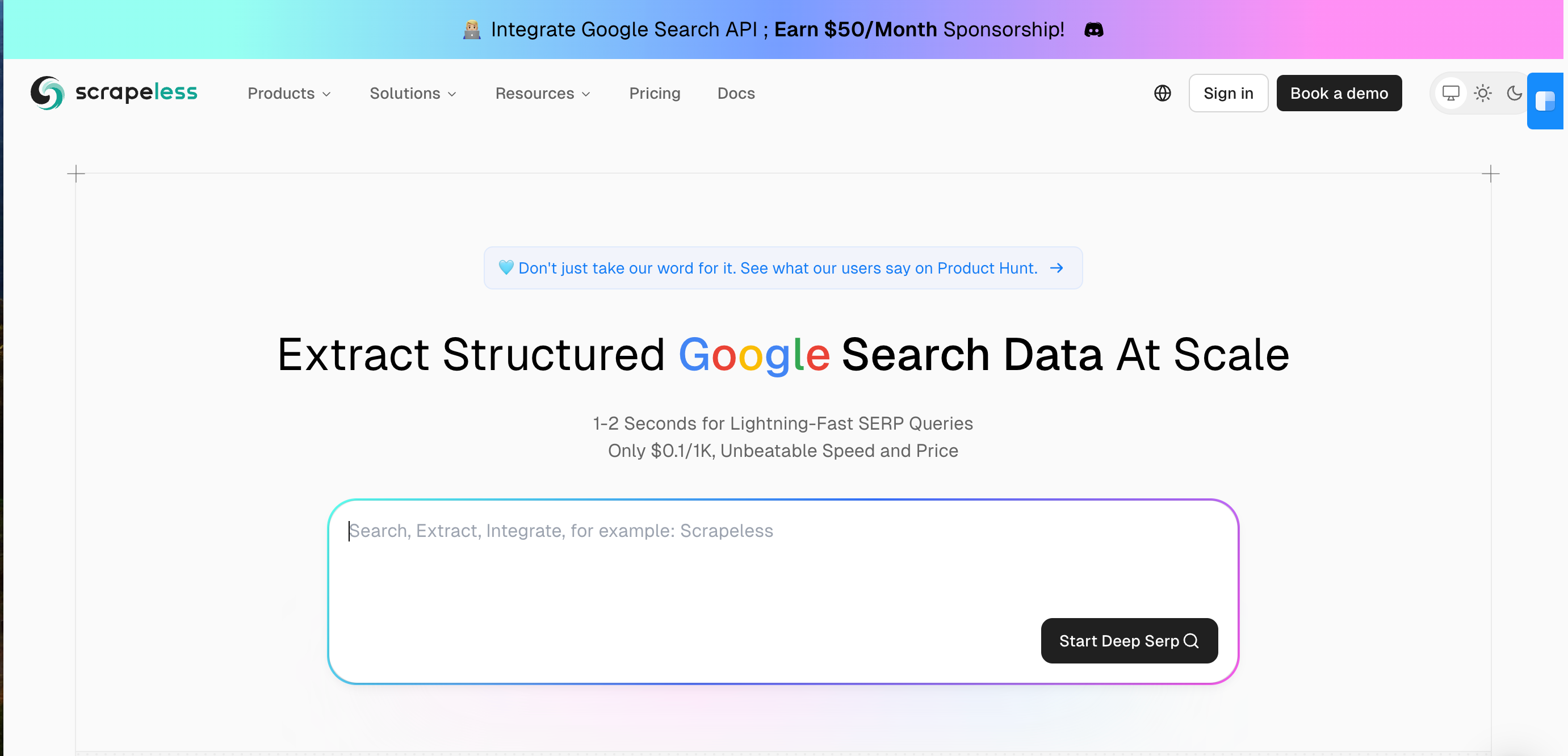

How to use Deep SerpApi to scrape Google map data

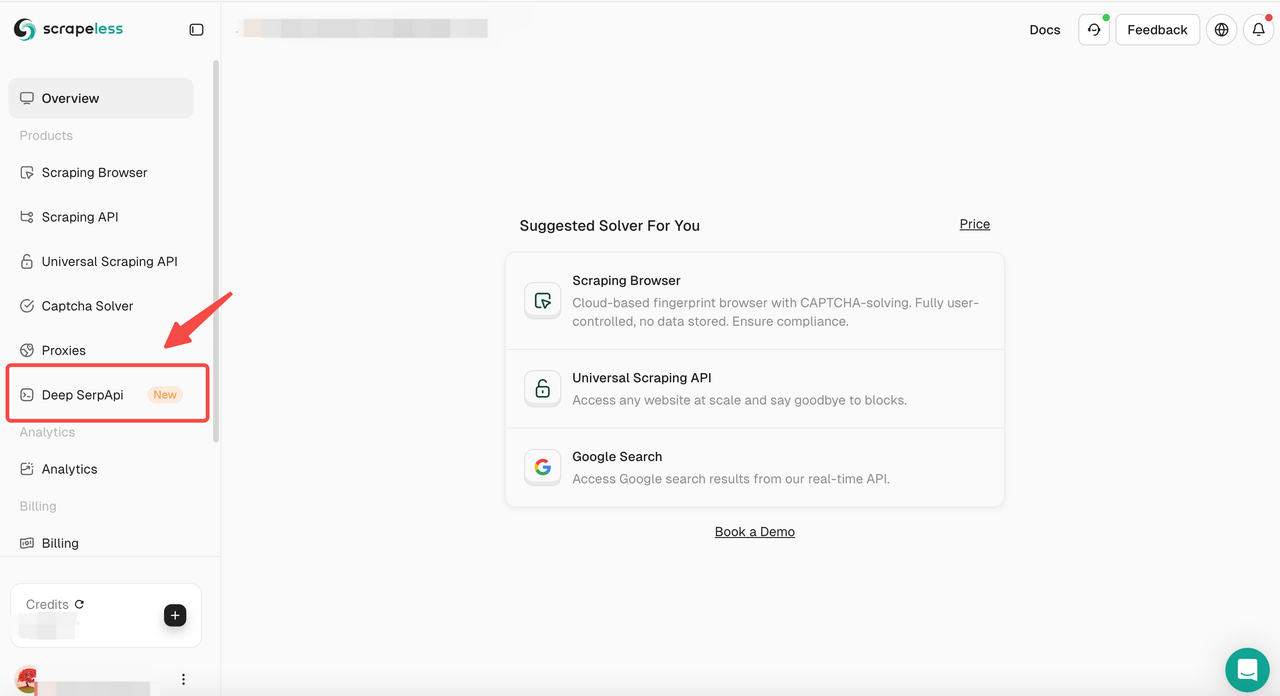

If you don't want to build the crawling parameters and code yourself, you can consider using Scrapeless Deep SerpApi.

Deep SerpAPI provides a cost-effective solution to help developers quickly obtain Google search results page (SERP) data. Its pricing plan is very competitive, with prices as low as $0.1 per 1,000 queries, applicable to more than 20 search results scenarios of Google.

Advantages of Deep SerpAPI

- Lowest price: Deep SerpAPI is priced as low as $0.1/k. It is the lowest price on the market.

- Easy to use: No need to write complex code, just get data through API calls.

- Real-time: Each request can instantly return the latest search results to ensure the timeliness of the data.

- Global support: Through global IP addresses and browser clusters, ensure that search results are consistent with the experience of real users.

- Rich data types: Supports more than 20 search types, such as Google Search, Google Maps, Google Shopping, etc.

- High success rate: Provides up to 99.995% service availability (SLA).

1. Sign Up and Access the API Key

- After signing up for free on Scrapeless, you can get 20,000 free search queries.

- Navigate to API Key Management. Then click Create to generate a unique API key. Once created, just click on AP to copy it.

2. Access the Deep SerpApi Playground

- Then navigate to the "Deep SerpApi" section.

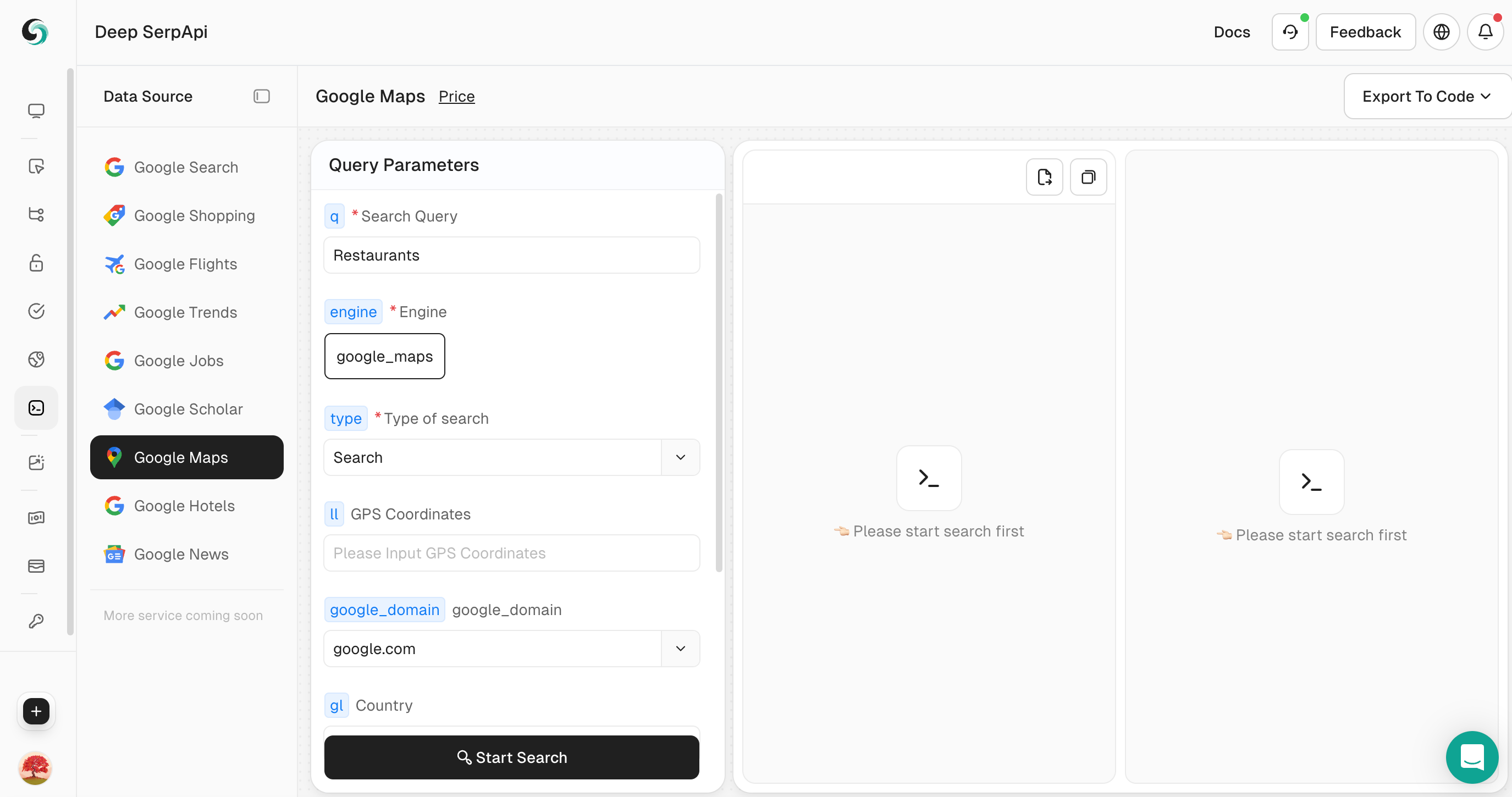

3. Set search parameters

- In the Playground, enter your search keyword, such as "Restaurants".

- Set other parameters, such as country, language, etc.

You can also click to view the official API documentation of Scrapeless to learn about the parameters of Google Map.

4. Perform a search

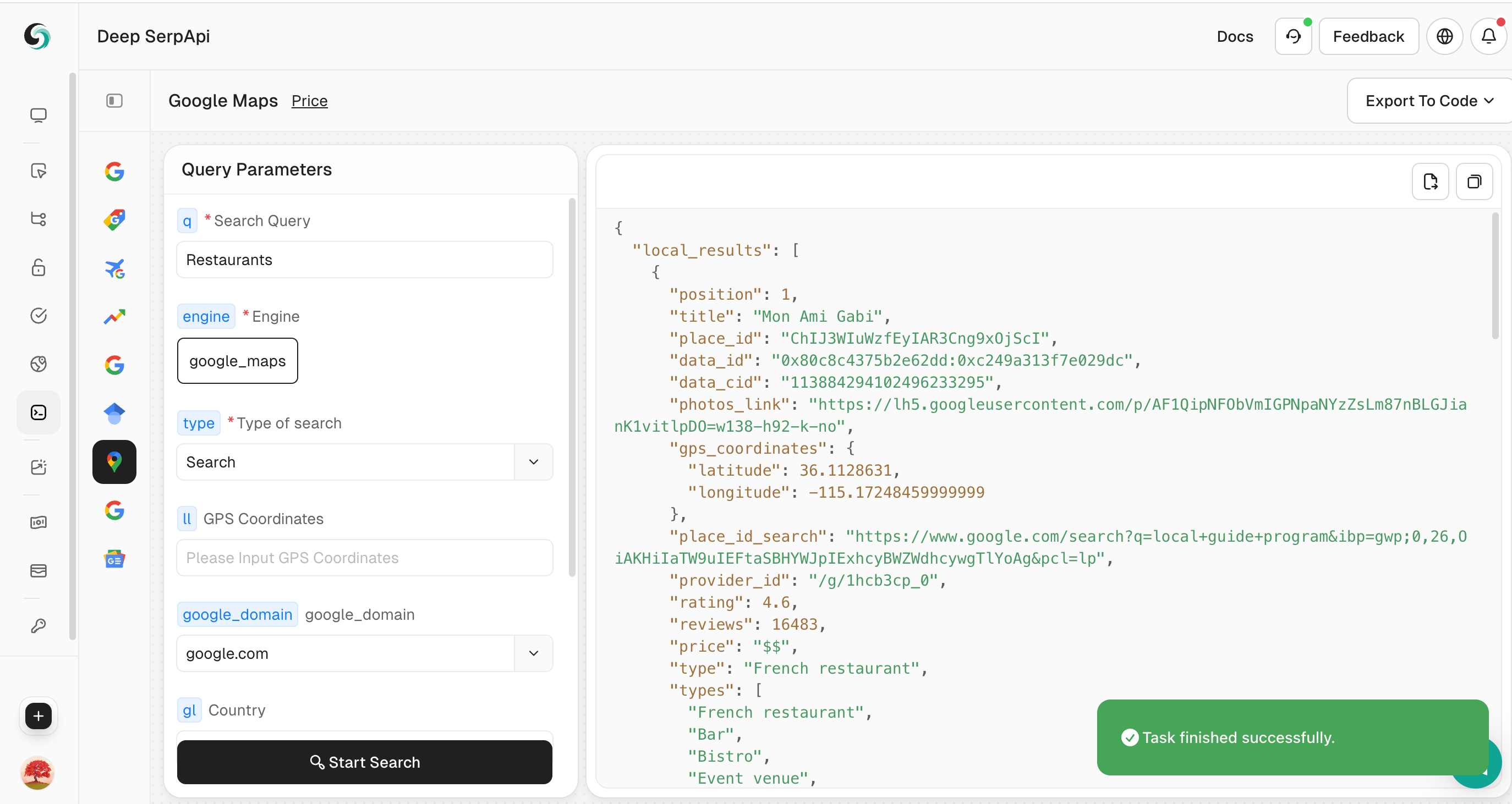

- Click the "Start Search" button, and the Playground will send a request to the Deep Serp API and return structured JSON data.

5. View and export data

- Browse the returned JSON data to view detailed information.

- If necessary, you can click "Export to Code" in the upper right corner to export the data to CSV or JSON format for further analysis.

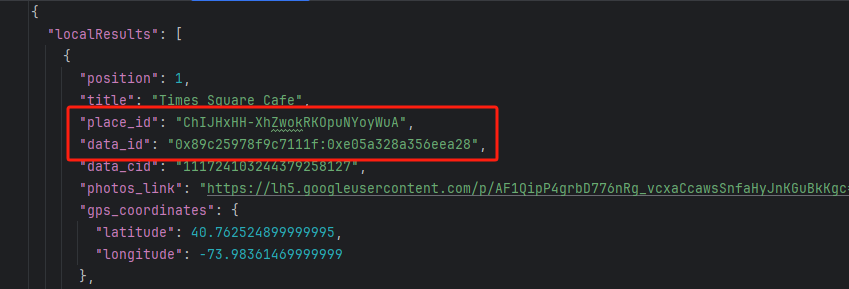

Differences between place results and local results

The Scrapeless API supports two types of searches. By default, the type is set to search, which returns an array of results in local_results. The other type is place, where you set type to place and use the data parameter to provide detailed information for a specific location or business. This type of search returns place_results.

More broadly, local_results is a list provided when the search scope is broader, while place_results offers detailed information for a specific location when the query is very specific, or when you use place_id or data with type=place to fetch results for a particular location.

For detailed instructions on how to use these parameters, please refer to our official Scrapeless API documentation

We can get a lot of data from this Scrapeless Google Map API, such as:

- Title

- GPS coordinates

- Review summary

- Average rating

- Price

- Type

- Address

- Business hours information

- Phone

- Website

- Service options

- And so on.

How to paginate all comments on Google Maps?

In addition to paginating the results we scrape, Google Maps typically returns 20 results per page by default, with the start parameter set to 0 for the first page. If you want to retrieve data for the second page, simply increase the value of the start parameter by 20.

input_data = {

"engine": "google_maps",

"q": "coffee",

"type": "search",

"ll": "@40.712776,-74.005974.14z",

"start": "20",

}We recommend a maximum of 100 (page 6), which is the same behavior as the Google Maps web app; above this limit, results may be duplicated or lost.

How to export Google Maps results to CSV?

If you need data from Google Maps results, you can add the following code. This code example shows you how to store all local_results in CSV. In this example, we saved the title, address, phone, and website.

result = response.json()

local_results = result['local_results']

with open('maps-results.csv', 'w', newline='') as csvfile:

csv_writer = csv.writer(csvfile)

# Write the headers

csv_writer.writerow(["Title", "Address", "Phone Number", "Website"])

# Write the data

for result in local_results:

csv_writer.writerow(

[result["title"], result["address"], result["phone"], result["website"] if "website" in result else ""])

print('Done writing to CSV file.')How to Scrape Google Maps Reviews

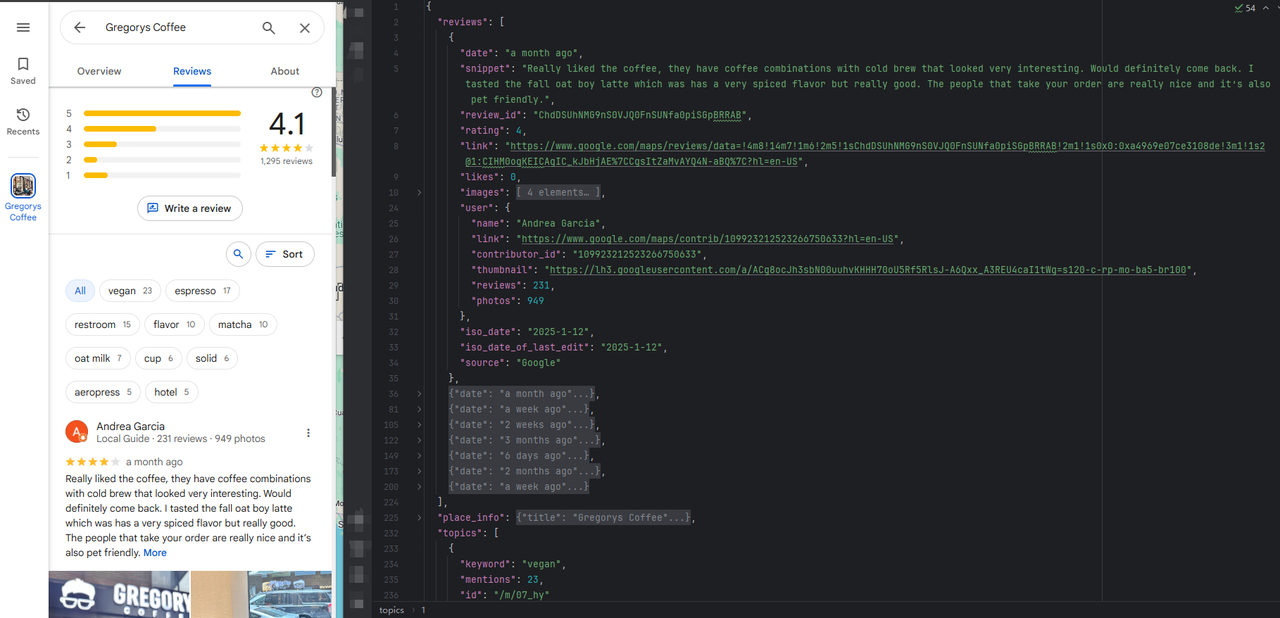

Scrapeless also provides a Reviews API to obtain detailed information about reviews from specific locations. To use this feature, you need to acquire the place_id or data_id.

By simply modifying the parameters in input_data, you can obtain API results corresponding to the parameters you provide. This approach allows you to efficiently scrape Google Maps comments and reviews, leveraging the power of Scrapeless API.

input_data = {

"engine": "google_maps_reviews",

"data_id": "0x89c259af336b3341:0xa4969e07ce3108de",

}Note: The data_id or place_id parameter will be returned in the local_results or place_results results.

The results will include review links, ratings, user details, summaries, and likes. You can use the parameter sort_by to sort by rating from high to low.

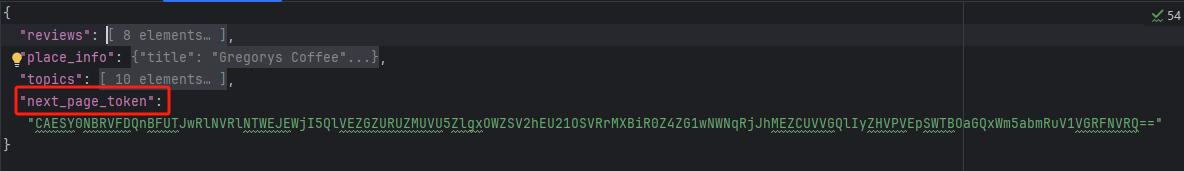

How to paginate reviews

How to paginate the available values in the Reviews API response to get all the reviews data? See below.

- Get the next_page_token in the above Reviews API response:

- Assemble the request parameters to initiate the request, and we will return the next page of Reviews to you:

input_data = {

"engine": "google_maps_reviews",

"data_id": "0x89c259af336b3341:0xa4969e07ce3108de",

"next_page_token": "CAESY0NBRVFDQnBFUTJwRlNVRlNTWEJEWjI5QlVEZGZURUZMUVU5ZlgxOWZSV2hEU21OSVRrMXBiR0Z4ZG1wNWNqRjJhMEZCUVVGQlIyZHVPVEpSWTBOaGQxWm5abmRuV1VGRFNVRQ=="

}What other data can Scrapeless crawl for you

Scrapeless provides multiple scenarios for you to crawl, including the local and location results shown above. In addition, we also provide multiple APIs such as Contributor, Directions API, Photos API, etc. For different APIs, you only need to build different parameters:

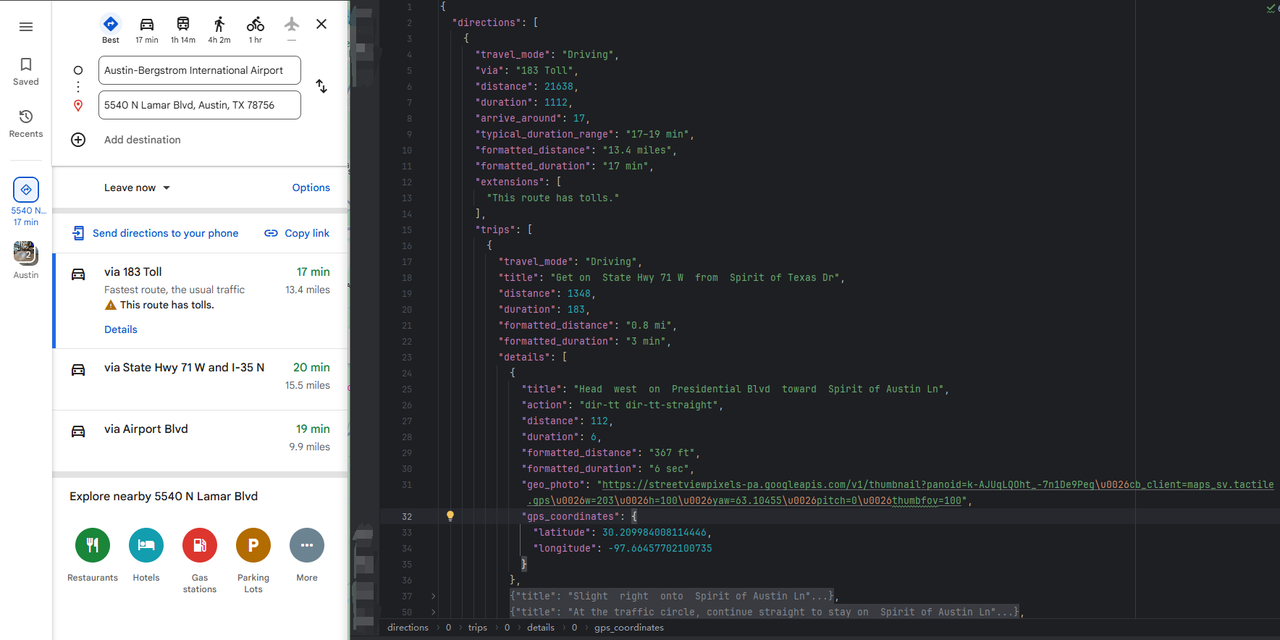

Here, crawling Directions API is used as an example. You only need to change the parameters in input_data in the above code. The results will include distance, time, detailed information of each journey, etc.

input_data = {

"engine": "google_maps_directions",

"start_addr": "Austin-Bergstrom International Airport",

"end_addr": "5540 N Lamar Blvd, Austin, TX 78756, USA",

}In addition, you can use the parameter travel_mode to select the travel mode:

The above is how to crawl Google Maps data and place reviews. I hope it helps you.

Scrapeless Deep SerpApi: Your Powerful Google SERP API Tool

Deep SerpApi is a specialized search engine API designed specifically for large language models (LLMs) and AI agents. It delivers real-time, accurate, and unbiased information, enabling AI applications to efficiently retrieve and process data from Google and beyond.

✅ Access 20+ Google Search API scenarios, integrating data from major search engines.

✅ Cover 20+ data types, including search results, news, videos, images, and more.

✅ Get real-time updates with data refreshed within the last 24 hours.

As part of our future roadmap, we are fully committed to meeting the needs of AI developers by simplifying the integration of dynamic web information into AI-driven solutions. The goal is to provide an ALL-in-One API that allows seamless search and data extraction with a single call.

Exciting Announcement!

🔔 The Developer Support Program is now live: Become a Sponsored Developer by testing and promoting Deep SerpApi, and enjoy 50,000 free queries in the first month!

Conclusion

Scraping Google Maps data and reviews can be a very effective tool. However, without the right tools, it can be a technical challenge, often with issues such as CAPTCHA, IP blocking, and complex data extraction.

With Scrapeless, you can bypass common scraping obstacles and get all types of information.

Sign up now and get 100,000 free requests, and get free credits by joining the Discord community.

Additional Resources

If you are also interested in other Google information scraping, you can read the following detailed articles:

How to Scrape Google Scholar Results

How to Scrape Google Jobs Results

How to Scrape Google Flights Data with Python

How To Scrape Google Trends Data - Easy Guide & Free Trial

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.