How to Scrape Google Play Store with Golang?

Expert Network Defense Engineer

Google Play Store is a store where you can find and download various apps and games. There are millions of games and apps in the Play Store for you to download and use.

In this article, we will show you how to scrape app data and rating information from the Google Play Store. With clear code explanations, you can easily complete the required data crawling.

Let's build a Google Play Store Scraper now!

Why scrape data from Google Play Store?

Scraping data from the Google Play Store can provide valuable insights for a variety of purposes. Here are some key reasons why developers, marketers, and researchers might want to scrape this data:

- Analyze trends in app categories, pricing, and user preferences and identify gaps in the market to develop new apps or improve existing ones.

- Monitor competitors' app performance, including ratings, reviews, and download statistics to understand what features or strategies are working for top-performing apps.

- Extract user reviews to identify common issues, feature requests, or areas for improvement, which can help sentiment analysis to gauge overall user satisfaction.

- Analyze developer activity and app functionality.

What is Google Play Scraper?

A Google Play Scraper automates the process of collecting information such as app details, reviews, ratings, and more. Scrapers can be built using programming languages like Python, along with libraries such as requests, BeautifulSoup, or Selenium for handling dynamic content.

Google Play Scraper is designed to scrape Google Play Store apps, reviews, and developer data based on your search query or URL.

🔍 Extract Google Play data by keyword, specific URL, or app ID.

🆚 Extract official app details, popularity, user reviews, and developer information in one go.

📊 Extract app data from various categories of Google Play Store, including Games, Apps, and Kids sections.

📝 Get 3,000+ results for free.

⏬ Download Google Play data in Excel, CSV, JSON, and other formats.

What datas can we extract?

| What datas can we extract? | DETAILS |

|---|---|

| App Metadata | App Name: The title of the application. Developer Name: The name of the app developer or company. Category: The category the app belongs to (e.g., Games, Productivity, Education). Price: Whether the app is free or paid, and its price if applicable. Size: The size of the app in MB or GB. Version: The current version of the app. Release Date: When the app was first published or last updated. |

| User Reviews and Ratings | Review Text: The content of user reviews. Rating: The star rating given by users (e.g., 1 to 5 stars). Review Date: When the review was posted. Reviewer Information: Username, location (if available), and device information. |

| Download Statistics | Number of Downloads: Approximate download count (e.g., "1,000,000+"). Download Range: The range of downloads (e.g., "10,000–50,000"). |

| App Performance Metrics | Rating Distribution: Breakdown of ratings (e.g., percentage of 1-star, 2-star, etc.). Ranking: The app’s rank in its category or overall. |

| In-App Purchases and Subscriptions | Details about in-app purchases, subscription plans, and pricing. |

| Screenshots and Videos | URLs or links to screenshots and promotional videos. |

| Developer Contact Information | Email addresses, websites, or social media links provided by the developer. |

How to Scrape Google Play Store with Golang?

Prerequisits: Configure the environment

Golang IDE Installation

You can use Golang to program in the Go language.

Go language environment installation

Go language supports the following systems:

- Linux

- FreeBSD

- Mac OS X (also known as Darwin)

- Windows

The installation package download address is: https://go.dev/dl/。

If it cannot be opened, you can use this address: https://golang.google.cn/dl/. Just select the corresponding SDK according to your system.

- UNIX/Linux/Mac OS X, and FreeBSD installation

Use source code installation methods under UNIX/Linux/Mac OS X, and FreeBSD systems:

- Download the binary package: go1.4.linux-amd64.tar.gz.

- Unzip the downloaded binary package to the /usr/local directory.

Python

tar -C /usr/local -xzf go1.4.linux-amd64.tar.gz- Add the /usr/local/go/bin directory to the PATH environment variable:

Python

export PATH=$PATH:/usr/local/go/binThe above can only add PATH temporarily. It will be gone when you close the terminal and log in next time.

We can edit ~/.bash_profile or /etc/profile and add the following command to the end of the file, so that it will take effect permanently:

Python

export PATH=$PATH:/usr/local/go/binAfter adding it, you need to execute:

Python

source ~/.bash_profile

or

source /etc/profileNote: On MAC system, you can use the installation package ending with .pkg to double-click to complete the installation. The installation directory is under /usr/local/go/.

- Installation on Windows system

On Windows, you can use the installation package with the .msi suffix (you can find the file in the download list, such as go1.24.1.windows-amd64.msi) to install.

By default, the .msi file will be installed in the c:\Go directory. You can add the c:\Go\bin directory to the Path environment variable.

Scrape apps data

Now we officially start crawling Google Play Store Apps data.

Step 1. Get the original data

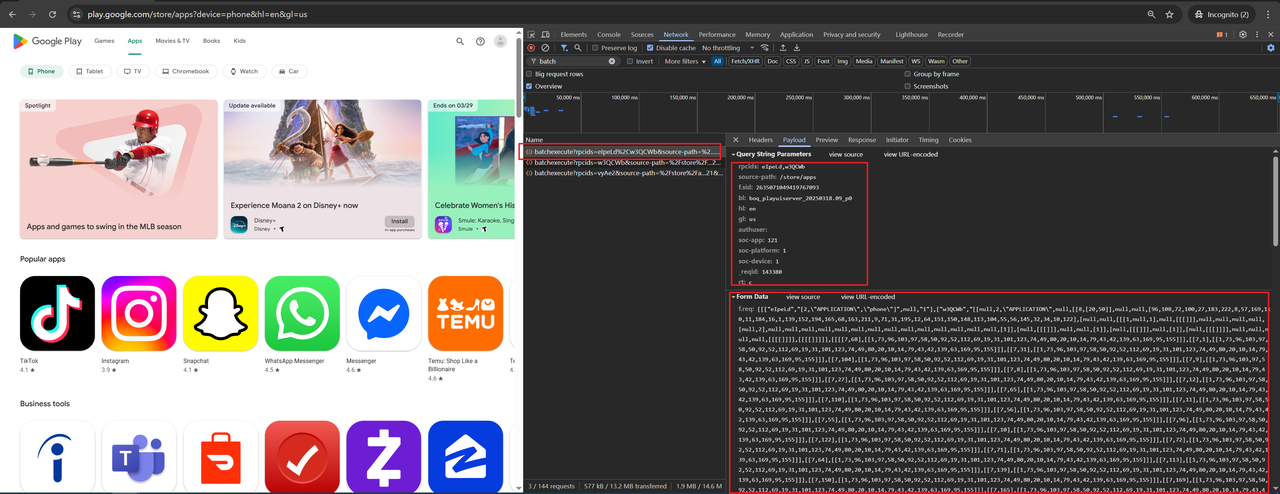

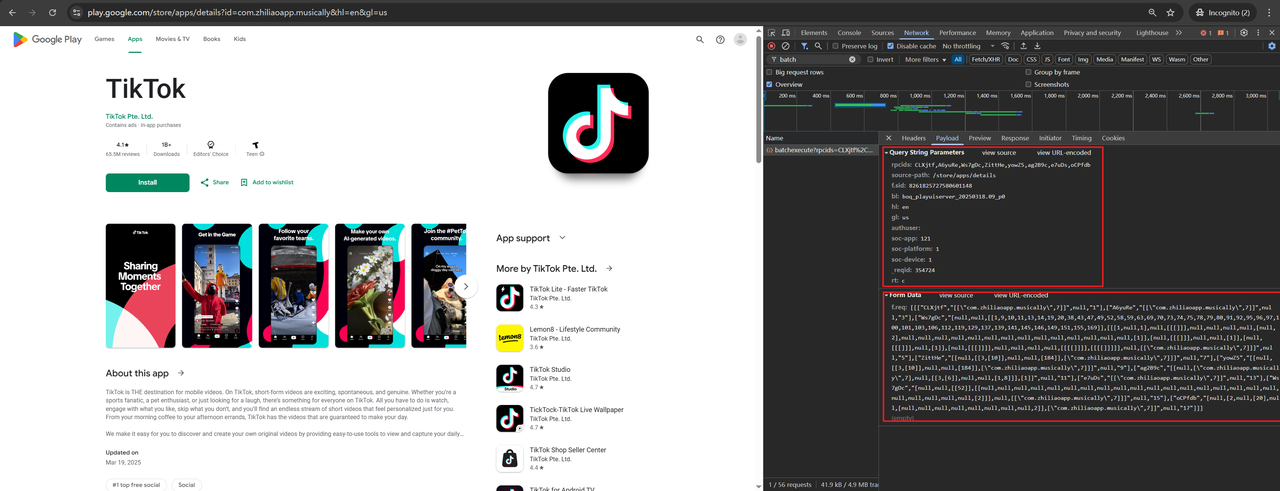

Observe the Apps page of Google Play Store, select phone by default, the url path is: https://play.google.com/store/apps?device=phone&hl=en&gl=us, the actual data request interface is shown in the figure:

The parameters are divided into: Query String Parameters, Form Data. Next, we use Golang to build an http request to obtain the phone data in Apps.

No additional dependencies need to be imported, only the following is required:

Go

import (

"io"

"log"

"net/http"

"net/url"

"os"

"strings"

)The code for getting data is as follows:

Go

func main() {

// Build Query String Parameters

baseURL := buildUrl()

// Build Form Data

data := buildFormData()

client := &http.Client{}

req, err := http.NewRequest("POST", baseURL.String(), data)

if err != nil {

log.Fatal(err)

}

// Set Headers

setHeaders(req)

resp, err := client.Do(req)

if err != nil {

log.Fatal(err)

}

defer resp.Body.Close()

bodyText, err := io.ReadAll(resp.Body)

if err != nil {

log.Fatal(err)

}

// Write local temporary file

file, err := os.Create("response.txt")

_, err = file.WriteString(string(bodyText))

}

func setHeaders(req *http.Request) {

req.Header.Set("accept", "*/*")

req.Header.Set("accept-language", "en")

req.Header.Set("cache-control", "no-cache")

req.Header.Set("content-type", "application/x-www-form-urlencoded;charset=UTF-8")

req.Header.Set("origin", "https://play.google.com")

req.Header.Set("pragma", "no-cache")

req.Header.Set("priority", "u=1, i")

req.Header.Set("referer", "https://play.google.com/")

req.Header.Set("sec-ch-ua", `"Chromium";v="134", "Not:A-Brand";v="24", "Google Chrome";v="134"`)

req.Header.Set("sec-ch-ua-arch", `"x86"`)

req.Header.Set("sec-ch-ua-bitness", `"64"`)

req.Header.Set("sec-ch-ua-form-factors", `"Desktop"`)

req.Header.Set("sec-ch-ua-full-version", `"134.0.6998.117"`)

req.Header.Set("sec-ch-ua-full-version-list", `"Chromium";v="134.0.6998.117", "Not:A-Brand";v="24.0.0.0", "Google Chrome";v="134.0.6998.117"`)

req.Header.Set("sec-ch-ua-mobile", "?0")

req.Header.Set("sec-ch-ua-model", `""`)

req.Header.Set("sec-ch-ua-platform", `"Windows"`)

req.Header.Set("sec-ch-ua-platform-version", `"10.0.0"`)

req.Header.Set("sec-ch-ua-wow64", "?0")

req.Header.Set("sec-fetch-dest", "empty")

req.Header.Set("sec-fetch-mode", "cors")

req.Header.Set("sec-fetch-site", "same-origin")

req.Header.Set("user-agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/134.0.0.0 Safari/537.36")

req.Header.Set("x-same-domain", "1")

req.Header.Set("cookie", "AEC=AVcja2e-yhtOeM_UbfLomoefoXdXonq1CsRoDl2NqMMN3GzsPm_bxIVfcw; OGPC=19046228-1:; NID=522=tiP_XNKxalG_Kp8umeb53_yO6GGBe4sO3TKR0NhvTYHKurU_ZJwZT4kYrQ2uhkqR2vaNScGpBjMHzKs4-WK61D7ZIpRA7m-nSqsuniquPxy0i8ntIu1urVyM-wFOKXN3ldls94qyt1bGa3DE4FM7r8hymCQyMV1of90dxsY8-t8X6KzuW0vQcJrkRrDuCm3ZtvFjBjmPeRNnqeVNJqUo5Uq48YAM2XSFVevrwFSC9tJifIbsMVqD_rHnht2TMauc0fMJ6ERpni3UtFK7BHr94nAZXVt56HOWPBRhGqs; _gid=GA1.3.1046009992.1742443368; _gat_UA199959031=1; _gcl_au=1.1.669526702.1742443368; OTZ=8002323_24_24__24_; _ga=GA1.1.522768357.1742443368; _ga_6VGGZHMLM2=GS1.1.1742443368.1.1.1742443377.0.0.0")

}

func buildFormData() *strings.Reader {

// Build Form Data

// freq Arrays will be spliced according to different scenes and page options. Here is only an example

var freq = "[[[\"eIpeLd\",\"[2,\\\"APPLICATION\\\",\\\"phone\\\"]\",null,\"1\"],[\"w3QCWb\",\"[[null,2,\\\"APPLICATION\\\",null,[[8,[20,50]],null,null,[96,108,72,100,27,183,222,8,57,169,110,11,184,16,1,139,152,194,165,68,163,211,9,71,31,195,12,64,151,150,148,113,104,55,56,145,32,34,10,122],[null,null,[[[1,null,1],null,[[[]]],null,null,null,null,[null,2],null,null,null,null,null,null,null,null,null,null,null,null,null,null,[1]],[null,[[[]]],null,null,[1]],[null,[[[]]],null,[1]],[null,[[[]]]],null,null,null,null,[[[[]]]],[[[[]]]]],[[[[7,68],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,1],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,31],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,104],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,9],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,8],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,27],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,12],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,65],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,110],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,11],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,56],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,55],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,96],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,10],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,122],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,72],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,71],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,64],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,113],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,139],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,150],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,169],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,165],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,151],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,163],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,32],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,16],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,108],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,100],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,194],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,211],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,184],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[7,183],[[1,73,96,103,97,58,50,92,52,112,69,19,31,101,123,74,49,80,20,10,14,79,43,42,139,63,169,95,155]]],[[9,68],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,1],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,31],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,104],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,9],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,8],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,27],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,12],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,65],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,110],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,11],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,56],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,55],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,96],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,10],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,122],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,72],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,71],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,64],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,113],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,139],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,150],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,169],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,165],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,151],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,163],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,32],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,16],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,108],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,100],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,194],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,211],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,184],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[9,183],[[1,7,9,24,12,31,5,15,27,8,13,10]]],[[17,68],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,1],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,31],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,104],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,9],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,8],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,27],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,12],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,65],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,110],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,11],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,56],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,55],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,96],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,10],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,122],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,72],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,71],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,64],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,113],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,139],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,150],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,169],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,165],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,151],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,163],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,32],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,16],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,108],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,100],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,194],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,211],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,184],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[17,183],[[1,7,9,25,13,31,5,41,27,8,14,10]]],[[65,68],[[1,5,4,7,11,6]]],[[65,1],[[1,5,4,7,11,6]]],[[65,31],[[1,5,4,7,11,6]]],[[65,104],[[1,5,4,7,11,6]]],[[65,9],[[1,5,4,7,11,6]]],[[65,8],[[1,5,4,7,11,6]]],[[65,27],[[1,5,4,7,11,6]]],[[65,12],[[1,5,4,7,11,6]]],[[65,65],[[1,5,4,7,11,6]]],[[65,110],[[1,5,4,7,11,6]]],[[65,11],[[1,5,4,7,11,6]]],[[65,56],[[1,5,4,7,11,6]]],[[65,55],[[1,5,4,7,11,6]]],[[65,96],[[1,5,4,7,11,6]]],[[65,10],[[1,5,4,7,11,6]]],[[65,122],[[1,5,4,7,11,6]]],[[65,72],[[1,5,4,7,11,6]]],[[65,71],[[1,5,4,7,11,6]]],[[65,64],[[1,5,4,7,11,6]]],[[65,113],[[1,5,4,7,11,6]]],[[65,139],[[1,5,4,7,11,6]]],[[65,150],[[1,5,4,7,11,6]]],[[65,169],[[1,5,4,7,11,6]]],[[65,165],[[1,5,4,7,11,6]]],[[65,151],[[1,5,4,7,11,6]]],[[65,163],[[1,5,4,7,11,6]]],[[65,32],[[1,5,4,7,11,6]]],[[65,16],[[1,5,4,7,11,6]]],[[65,108],[[1,5,4,7,11,6]]],[[65,100],[[1,5,4,7,11,6]]],[[65,194],[[1,5,4,7,11,6]]],[[65,211],[[1,5,4,7,11,6]]],[[65,184],[[1,5,4,7,11,6]]],[[65,183],[[1,5,4,7,11,6]]],[[10,68],[[1,7,6,9,15,8]]],[[10,1],[[1,7,6,9,15,8]]],[[10,31],[[1,7,6,9,15,8]]],[[10,104],[[1,7,6,9,15,8]]],[[10,9],[[1,7,6,9,15,8]]],[[10,8],[[1,7,6,9,15,8]]],[[10,27],[[1,7,6,9,15,8]]],[[10,12],[[1,7,6,9,15,8]]],[[10,65],[[1,7,6,9,15,8]]],[[10,110],[[1,7,6,9,15,8]]],[[10,11],[[1,7,6,9,15,8]]],[[10,56],[[1,7,6,9,15,8]]],[[10,55],[[1,7,6,9,15,8]]],[[10,96],[[1,7,6,9,15,8]]],[[10,10],[[1,7,6,9,15,8]]],[[10,122],[[1,7,6,9,15,8]]],[[10,72],[[1,7,6,9,15,8]]],[[10,71],[[1,7,6,9,15,8]]],[[10,64],[[1,7,6,9,15,8]]],[[10,113],[[1,7,6,9,15,8]]],[[10,139],[[1,7,6,9,15,8]]],[[10,150],[[1,7,6,9,15,8]]],[[10,169],[[1,7,6,9,15,8]]],[[10,165],[[1,7,6,9,15,8]]],[[10,151],[[1,7,6,9,15,8]]],[[10,163],[[1,7,6,9,15,8]]],[[10,32],[[1,7,6,9,15,8]]],[[10,16],[[1,7,6,9,15,8]]],[[10,108],[[1,7,6,9,15,8]]],[[10,100],[[1,7,6,9,15,8]]],[[10,194],[[1,7,6,9,15,8]]],[[10,211],[[1,7,6,9,15,8]]],[[10,184],[[1,7,6,9,15,8]]],[[10,183],[[1,7,6,9,15,8]]],[[58,68],[[5,3,1,2,6,8]]],[[58,1],[[5,3,1,2,6,8]]],[[58,31],[[5,3,1,2,6,8]]],[[58,104],[[5,3,1,2,6,8]]],[[58,9],[[5,3,1,2,6,8]]],[[58,8],[[5,3,1,2,6,8]]],[[58,27],[[5,3,1,2,6,8]]],[[58,12],[[5,3,1,2,6,8]]],[[58,65],[[5,3,1,2,6,8]]],[[58,110],[[5,3,1,2,6,8]]],[[58,11],[[5,3,1,2,6,8]]],[[58,56],[[5,3,1,2,6,8]]],[[58,55],[[5,3,1,2,6,8]]],[[58,96],[[5,3,1,2,6,8]]],[[58,10],[[5,3,1,2,6,8]]],[[58,122],[[5,3,1,2,6,8]]],[[58,72],[[5,3,1,2,6,8]]],[[58,71],[[5,3,1,2,6,8]]],[[58,64],[[5,3,1,2,6,8]]],[[58,113],[[5,3,1,2,6,8]]],[[58,139],[[5,3,1,2,6,8]]],[[58,150],[[5,3,1,2,6,8]]],[[58,169],[[5,3,1,2,6,8]]],[[58,165],[[5,3,1,2,6,8]]],[[58,151],[[5,3,1,2,6,8]]],[[58,163],[[5,3,1,2,6,8]]],[[58,32],[[5,3,1,2,6,8]]],[[58,16],[[5,3,1,2,6,8]]],[[58,108],[[5,3,1,2,6,8]]],[[58,100],[[5,3,1,2,6,8]]],[[58,194],[[5,3,1,2,6,8]]],[[58,211],[[5,3,1,2,6,8]]],[[58,184],[[5,3,1,2,6,8]]],[[58,183],[[5,3,1,2,6,8]]],[[44,68],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,1],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,31],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,104],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,9],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,8],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,27],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,12],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,65],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,110],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,11],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,56],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,55],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,96],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,10],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,122],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,72],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,71],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,64],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,113],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,139],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,150],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,169],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,165],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,151],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,163],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,32],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,16],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,108],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,100],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,194],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,211],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,184],[[3,4,9,6,7,2,8,1,10,11,5]]],[[44,183],[[3,4,9,6,7,2,8,1,10,11,5]]],[[1,68],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,1],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,31],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,104],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,9],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,8],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,27],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,12],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,65],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,110],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,11],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,56],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,55],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,96],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,10],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,122],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,72],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,71],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,64],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,113],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,139],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,150],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,169],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,165],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,151],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,163],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,32],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,16],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,108],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,100],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,194],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,211],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,184],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[1,183],[[1,5,14,38,19,29,34,4,12,11,6,30,43,40,42,16,10,7]]],[[4,68],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,1],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,31],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,104],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,9],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,8],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,27],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,12],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,65],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,110],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,11],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,56],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,55],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,96],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,10],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,122],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,72],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,71],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,64],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,113],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,139],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,150],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,169],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,165],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,151],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,163],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,32],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,16],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,108],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,100],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,194],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,211],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,184],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[4,183],[[1,3,5,4,7,6,11,19,21,17,15,12,16,20]]],[[3,68],[[1,5,14,4,10,17]]],[[3,1],[[1,5,14,4,10,17]]],[[3,31],[[1,5,14,4,10,17]]],[[3,104],[[1,5,14,4,10,17]]],[[3,9],[[1,5,14,4,10,17]]],[[3,8],[[1,5,14,4,10,17]]],[[3,27],[[1,5,14,4,10,17]]],[[3,12],[[1,5,14,4,10,17]]],[[3,65],[[1,5,14,4,10,17]]],[[3,110],[[1,5,14,4,10,17]]],[[3,11],[[1,5,14,4,10,17]]],[[3,56],[[1,5,14,4,10,17]]],[[3,55],[[1,5,14,4,10,17]]],[[3,96],[[1,5,14,4,10,17]]],[[3,10],[[1,5,14,4,10,17]]],[[3,122],[[1,5,14,4,10,17]]],[[3,72],[[1,5,14,4,10,17]]],[[3,71],[[1,5,14,4,10,17]]],[[3,64],[[1,5,14,4,10,17]]],[[3,113],[[1,5,14,4,10,17]]],[[3,139],[[1,5,14,4,10,17]]],[[3,150],[[1,5,14,4,10,17]]],[[3,169],[[1,5,14,4,10,17]]],[[3,165],[[1,5,14,4,10,17]]],[[3,151],[[1,5,14,4,10,17]]],[[3,163],[[1,5,14,4,10,17]]],[[3,32],[[1,5,14,4,10,17]]],[[3,16],[[1,5,14,4,10,17]]],[[3,108],[[1,5,14,4,10,17]]],[[3,100],[[1,5,14,4,10,17]]],[[3,194],[[1,5,14,4,10,17]]],[[3,211],[[1,5,14,4,10,17]]],[[3,184],[[1,5,14,4,10,17]]],[[3,183],[[1,5,14,4,10,17]]],[[2,68],[[1,5,7,4,13,16,12,18]]],[[2,1],[[1,5,7,4,13,16,12,18]]],[[2,31],[[1,5,7,4,13,16,12,18]]],[[2,104],[[1,5,7,4,13,16,12,18]]],[[2,9],[[1,5,7,4,13,16,12,18]]],[[2,8],[[1,5,7,4,13,16,12,18]]],[[2,27],[[1,5,7,4,13,16,12,18]]],[[2,12],[[1,5,7,4,13,16,12,18]]],[[2,65],[[1,5,7,4,13,16,12,18]]],[[2,110],[[1,5,7,4,13,16,12,18]]],[[2,11],[[1,5,7,4,13,16,12,18]]],[[2,56],[[1,5,7,4,13,16,12,18]]],[[2,55],[[1,5,7,4,13,16,12,18]]],[[2,96],[[1,5,7,4,13,16,12,18]]],[[2,10],[[1,5,7,4,13,16,12,18]]],[[2,122],[[1,5,7,4,13,16,12,18]]],[[2,72],[[1,5,7,4,13,16,12,18]]],[[2,71],[[1,5,7,4,13,16,12,18]]],[[2,64],[[1,5,7,4,13,16,12,18]]],[[2,113],[[1,5,7,4,13,16,12,18]]],[[2,139],[[1,5,7,4,13,16,12,18]]],[[2,150],[[1,5,7,4,13,16,12,18]]],[[2,169],[[1,5,7,4,13,16,12,18]]],[[2,165],[[1,5,7,4,13,16,12,18]]],[[2,151],[[1,5,7,4,13,16,12,18]]],[[2,163],[[1,5,7,4,13,16,12,18]]],[[2,32],[[1,5,7,4,13,16,12,18]]],[[2,16],[[1,5,7,4,13,16,12,18]]],[[2,108],[[1,5,7,4,13,16,12,18]]],[[2,100],[[1,5,7,4,13,16,12,18]]],[[2,194],[[1,5,7,4,13,16,12,18]]],[[2,211],[[1,5,7,4,13,16,12,18]]],[[2,184],[[1,5,7,4,13,16,12,18]]],[[2,183],[[1,5,7,4,13,16,12,18]]]]]],null,null,[[[1,2],[10,8,9]]]],null,2],[1,1]]\",null,\"2\"]]]"

var data = strings.NewReader(`f.req=` + freq)

return data

}

func buildUrl() *url.URL {

baseURL := &url.URL{

Scheme: "https",

Host: "play.google.com",

Path: "/_/PlayStoreUi/data/batchexecute",

}

// Build Query String Parameters

params := url.Values{}

params.Add("rpcids", "eIpeLd,w3QCWb")

params.Add("source-path", "/store/apps")

params.Add("f.sid", "2635071049419767093")

params.Add("bl", "boq_playuiserver_20250318.09_p0")

params.Add("hl", "en")

params.Add("gl", "us")

params.Add("authuser", "")

params.Add("soc-app", "121")

params.Add("soc-platform", "1")

params.Add("soc-device", "1")

params.Add("_reqid", "143380")

params.Add("rt", "c")

baseURL.RawQuery = params.Encode()

return baseURL

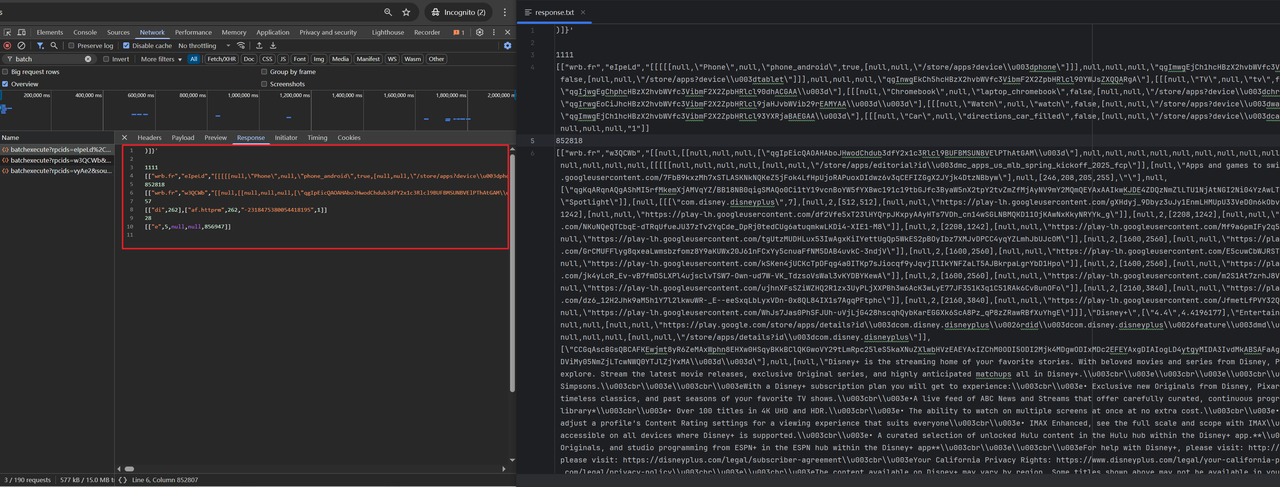

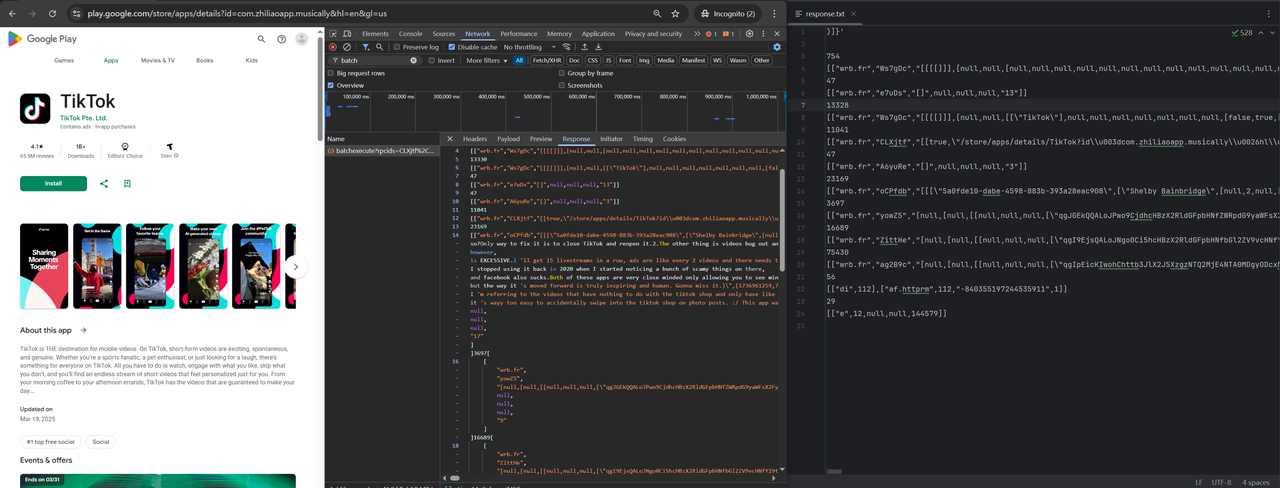

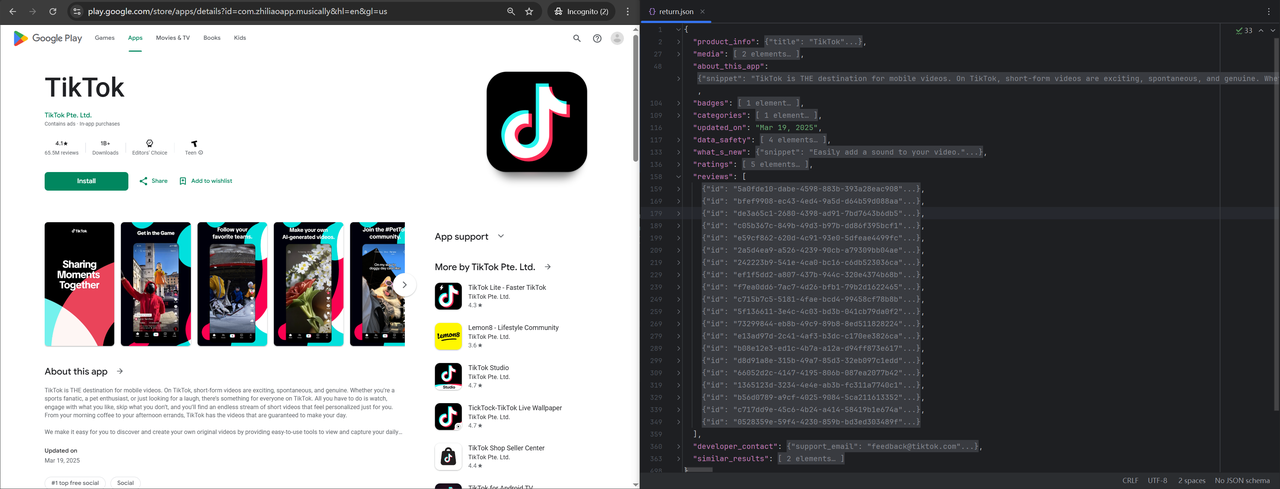

}You will see the response.txt file in the directory where the code is located, as shown in the figure (the right side shows the data you requested):

Step 2. Data analysis

This data looks messy, so we need to parse the data.

First, we can define several structures to store data. The structural examples are as follows:

Go

type Response struct {

ChartOption []ChartOption `json:"chart_option,omitempty"`

HighlightItem [][]HighlightItem `json:"highlight_item,omitempty"`

OrganicResults []OrganicResults `json:"organic_results,omitempty"`

// product info

ProductInfo *ProductInfo `json:"product_info,omitempty"`

Media *Media `json:"media,omitempty"`

AboutThisApp *AboutThisApp `json:"about_this_app,omitempty"`

Badges []Badges `json:"badges,omitempty"`

Categories []Categories `json:"categories,omitempty"`

// format: Mar 7, 2025

UpdatedOn string `json:"updated_on,omitempty"`

DataSafety []DataSafety `json:"data_safety,omitempty"`

WhatIsNew *WhatIsNew `json:"what_s_new,omitempty"`

Ratings []Ratings `json:"ratings,omitempty"`

Reviews []Review `json:"reviews,omitempty"`

DeveloperContact *DeveloperContact `json:"developer_contact,omitempty"`

SimilarResults []SimilarResults `json:"similar_results,omitempty"`

}

type OrganicResults struct {

Title string `json:"title,omitempty"`

Item []Item `json:"item,omitempty"`

}

type ChartOption struct {

Text string `json:"text,omitempty"`

Value string `json:"value,omitempty"`

}

type HighlightItem struct {

Title string `json:"title,omitempty"`

Subtitle string `json:"subtitle,omitempty"`

Link string `json:"link,omitempty"`

ProductID string `json:"product_id,omitempty"`

SerpapiLink string `json:"serpapi_link,omitempty"`

Thumbnail string `json:"thumbnail,omitempty"`

}

type Item struct {

Title string `json:"title,omitempty"`

Link string `json:"link,omitempty"`

ProductID string `json:"product_id,omitempty"`

SerpapiLink string `json:"serpapi_link,omitempty"`

Rating float64 `json:"rating,omitempty"`

Author string `json:"author,omitempty"`

Category string `json:"category,omitempty"`

Downloads string `json:"downloads,omitempty"`

Thumbnail string `json:"thumbnail,omitempty"`

Description string `json:"description,omitempty"`

Video string `json:"video,omitempty"`

Extension []string `json:"extension,omitempty"`

}

type ProductInfo struct {

Title string `json:"title,omitempty"`

Authors []Author `json:"authors,omitempty"`

Extensions []string `json:"extensions,omitempty"`

Rating float32 `json:"rating,omitempty"`

Reviews int64 `json:"reviews,omitempty"`

ContentRating ContentRating `json:"content_rating,omitempty"`

Downloads string `json:"downloads,omitempty"`

Thumbnail string `json:"thumbnail,omitempty"`

Offers []Offer `json:"offers,omitempty"`

}

type Author struct {

Name string `json:"name,omitempty"`

Link string `json:"link,omitempty"`

}

type ContentRating struct {

Text string `json:"text,omitempty"`

Thumbnail string `json:"thumbnail,omitempty"`

}

type Offer struct {

Text string `json:"text,omitempty"`

Link string `json:"link,omitempty"`

}

type Media struct {

Video Video `json:"video,omitempty"`

Images []string `json:"images,omitempty"`

}

type Video struct {

Thumbnail string `json:"thumbnail,omitempty"`

Link string `json:"link,omitempty"`

}

type AboutThisApp struct {

Snippet string `json:"snippet,omitempty"`

InAppPurchases string `json:"in_app_purchases,omitempty"`

ReleasedOn string `json:"released_on,omitempty"`

UpdatedOn string `json:"updated_on,omitempty"`

Downloads string `json:"downloads,omitempty"`

ContentRating string `json:"content_rating,omitempty"`

InteractiveElements string `json:"interactive_elements,omitempty"`

OfferedBy string `json:"offered_by,omitempty"`

Permissions []Permission `json:"permissions,omitempty"`

}

type Permission struct {

Type string `json:"type,omitempty"`

Details []string `json:"details,omitempty"`

}

type Badges struct {

Name string `json:"name,omitempty"`

}

type Categories struct {

Name string `json:"name,omitempty"`

Link string `json:"link,omitempty"`

CategoryID string `json:"category_id,omitempty"`

SerpapiLink string `json:"serpapi_link,omitempty"`

}

type DataSafety struct {

Text string `json:"text,omitempty"`

Subtext string `json:"subtext,omitempty"`

Link string `json:"link,omitempty"`

}

type WhatIsNew struct {

Snippet string `json:"snippet,omitempty"`

}

type Ratings struct {

Stars int `json:"stars,omitempty"`

Count int64 `json:"count,omitempty"`

}

type Review struct {

ID string `json:"id,omitempty"`

Title string `json:"title,omitempty"`

Avatar string `json:"avatar,omitempty"`

Rating float32 `json:"rating,omitempty"`

Snippet string `json:"snippet,omitempty"`

Likes int `json:"likes,omitempty"`

Date string `json:"date,omitempty"`

ISODate string `json:"iso_date,omitempty"`

}

type DeveloperContact struct {

SupportEmail string `json:"support_email,omitempty"`

}

type SimilarResults struct {

Title string `json:"title,omitempty"`

SeeMoreLink string `json:"see_more_link,omitempty"`

SeeMoreToken string `json:"see_more_token,omitempty"`

SerpapiLink string `json:"serpapi_link,omitempty"`

Items []Item `json:"items,omitempty"`

}After completing the structure definition, you need to get the github.com/json-iterator/go package to facilitate data parsing:

Bash

go get github.com/json-iterator/goThis step involves more array structure parsing. The sample code is as follows:

Go

func parsing(respBytes []byte) (organics []OrganicResults, highlightItems [][]HighlightItem, chartOptions []ChartOption) {

wrbs := GetWrbs(respBytes)

var organicWrb []byte

i := len(wrbs)

if i > 0 {

if i > 1 {

organicWrb = wrbs[i-1]

} else {

organicWrb = wrbs[0]

}

var wrbFr [][]string

err := json.Unmarshal(organicWrb, &wrbFr)

if err != nil {

panic("unmarshal organic wrb: %v" + err.Error())

}

playOrganic := wrbFr[0][2]

// get real data

clazz := jsoniter.Get([]byte(playOrganic), 0, 1).ToString()

var actual []interface{}

err = json.Unmarshal([]byte(clazz), &actual)

for _, a := range actual {

marshal, err := json.Marshal(a)

if err != nil {

fmt.Println("unmarshal actual: " + err.Error())

continue

}

cat := jsoniter.Get(marshal, 21, 1).ToString()

if cat == "" {

// In some places, the array subscript is 22

cat = jsoniter.Get(marshal, 22, 1).ToString()

}

// If the above two judgments have a value, it is organic_result; otherwise, it is items_highlight or top_chart

// Here, it is judged whether it is highlight content. If it is empty, it is highlight. Generally, the highlight array subscript is 34, and the top_chart array subscript is 27

if cat == "" {

highlight := jsoniter.Get(marshal, 34).ToString()

if highlight == "" {

// check

chartsJson := jsoniter.Get(marshal, 27, 1, 0).ToString()

if chartsJson != "" {

var charts []interface{}

err := json.Unmarshal([]byte(chartsJson), &charts)

if err != nil {

fmt.Println("unmarshal charts option: " + err.Error())

continue

}

// Do not operate if there is no data in serialization

if len(charts) > 0 {

for _, chart := range charts {

chatJson, err := json.Marshal(chart)

if err != nil {

fmt.Println("unmarshal chatJson: " + err.Error())

continue

} else {

text := jsoniter.Get(chatJson, 0).ToString()

value := jsoniter.Get(chatJson, 1, 9, 0, 1).ToString()

chartOption := ChartOption{

Text: text,

Value: value,

}

chartOptions = append(chartOptions, chartOption)

}

}

}

}

} else {

// Parse each array element and assemble it into highlight

highs := jsoniter.Get([]byte(highlight), 0).ToString()

var high []interface{}

err := json.Unmarshal([]byte(highs), &high)

if err != nil {

fmt.Println("unmarshal organic wrb: " + err.Error())

continue

}

var highItem []HighlightItem

if len(high) > 0 {

for _, v := range high {

it, err := json.Marshal(v)

if err != nil {

fmt.Println("unmarshal highlight: " + err.Error())

continue

}

productId := jsoniter.Get(it, 1, 0, 0, 0).ToString()

title := jsoniter.Get(it, 1, 0, 3).ToString()

var subTitle string

var link string

nail := jsoniter.Get(it, 1, 0, 1, 3, 2).ToString()

if title == "" {

title = jsoniter.Get(it, 0, 1, 1).ToString()

link = jsoniter.Get(it, 0, 0, 4, 2).ToString()

subTitle = jsoniter.Get(it, 0, 2, 1).ToString()

} else {

link = jsoniter.Get(it, 1, 0, 10, 3).ToString()

subTitle = jsoniter.Get(it, 1, 2, 1).ToString()

}

highlightItem := HighlightItem{

Title: title,

Subtitle: subTitle,

Link: "https://play.google.com" + link,

ProductID: productId,

SerpapiLink: "",

Thumbnail: nail,

}

highItem = append(highItem, highlightItem)

}

}

highlightItems = append(highlightItems, highItem)

}

} else {

// assemble organic_result

var organicResult OrganicResults

orgaincs := jsoniter.Get(marshal, 21, 0).ToString()

var organic []interface{}

var items []Item

// Some arrays have a length of 23, which needs to be judged

if orgaincs == "" {

orgaincs = jsoniter.Get(marshal, 22, 0).ToString()

}

err := json.Unmarshal([]byte(orgaincs), &organic)

if err != nil {

fmt.Println("unmarshal organic wrb: " + err.Error())

continue

}

title := jsoniter.Get([]byte(cat), 0).ToString()

organicResult.Title = title

items = assemOrganic(organic)

if len(items) > 0 {

organicResult.Item = items

}

// Cyclic assembly of items in organic

organics = append(organics, organicResult)

}

}

return organics, highlightItems, chartOptions

} else {

return organics, highlightItems, chartOptions

}

}

func GetWrbs(respBytes []byte) [][]byte {

// Split []byte by line

lines := bytes.Split(respBytes, []byte("\n"))

var wrbs [][]byte

// Traverse each line

for _, line := range lines {

// Check whether it starts with [["wrb.fr", and the actual data starts with this

if bytes.HasPrefix(line, []byte(`[["wrb.fr"`)) {

wrbs = append(wrbs, line)

}

}

return wrbs

}

func assemOrganic(organic []interface{}) []Item {

var items []Item

if len(organic) > 0 {

for _, org := range organic {

orgMarsh, err := json.Marshal(org)

if err != nil {

fmt.Println("marshal organic: " + err.Error())

continue

}

title := jsoniter.Get(orgMarsh, 3).ToString()

var link, productId, author, category, download, video, thumbnail, desc string

var rating float64

if title == "" {

// Some are the data at the next level, corresponding to "some arrays have a length of 23, some have a length of 22", and those observed as 23 are at the next level

author = jsoniter.Get(orgMarsh, 0, 14).ToString()

link = jsoniter.Get(orgMarsh, 0, 10, 4, 2).ToString()

productId = jsoniter.Get(orgMarsh, 0, 0, 0).ToString()

rating = jsoniter.Get(orgMarsh, 0, 4, 0).ToFloat64()

title = jsoniter.Get(orgMarsh, 0, 3).ToString()

category = jsoniter.Get(orgMarsh, 0, 5).ToString()

download = jsoniter.Get(orgMarsh, 0, 15).ToString()

video = jsoniter.Get(orgMarsh, 0, 12, 0, 0, 3, 2).ToString()

thumbnail = jsoniter.Get(orgMarsh, 0, 1, 3, 2).ToString()

desc = jsoniter.Get(orgMarsh, 0, 13, 1).ToString()

} else {

link = jsoniter.Get(orgMarsh, 10, 4, 2).ToString()

productId = jsoniter.Get(orgMarsh, 0, 0).ToString()

rating = jsoniter.Get(orgMarsh, 4, 0).ToFloat64()

author = jsoniter.Get(orgMarsh, 14).ToString()

category = jsoniter.Get(orgMarsh, 5).ToString()

download = jsoniter.Get(orgMarsh, 15).ToString()

video = jsoniter.Get(orgMarsh, 12, 0, 0, 3, 2).ToString()

thumbnail = jsoniter.Get(orgMarsh, 1, 3, 2).ToString()

desc = jsoniter.Get(orgMarsh, 13, 1).ToString()

}

item := Item{

Title: title,

Link: "https://play.google.com" + link,

ProductID: productId,

SerpapiLink: "",

Rating: rating,

Author: author,

Category: category,

Downloads: download,

Thumbnail: thumbnail,

Description: desc,

Video: video,

}

items = append(items, item)

}

}

return items

}Step 3. Merge the results

Merge the raw data and data analysis together. The main method code example is as follows:

Go

import (

"bytes"

"encoding/json"

"fmt"

jsoniter "github.com/json-iterator/go"

"html"

"io"

"log"

"net/http"

"net/url"

"os"

"regexp"

"strings"

"time"

)

func main() {

// Build Query String Parameters

baseURL := buildUrl()

// Build Form Data

data := buildFormData()

client := &http.Client{}

req, err := http.NewRequest("POST", baseURL.String(), data)

if err != nil {

log.Fatal(err)

}

// Set Headers

setHeaders(req)

resp, err := client.Do(req)

if err != nil {

log.Fatal(err)

}

defer resp.Body.Close()

bodyText, err := io.ReadAll(resp.Body)

if err != nil {

log.Fatal(err)

}

// Write local temporary file

file, err := os.Create("response.txt")

_, err = file.WriteString(string(bodyText))

// parse data

organics, items, options := parsing(bodyText)

response := Response{

ChartOption: options,

HighlightItem: items,

OrganicResults: organics,=

}

marshal, err := json.Marshal(response)

returnFile, err := os.Create("return.json")

_, err = returnFile.WriteString(string(marshal))

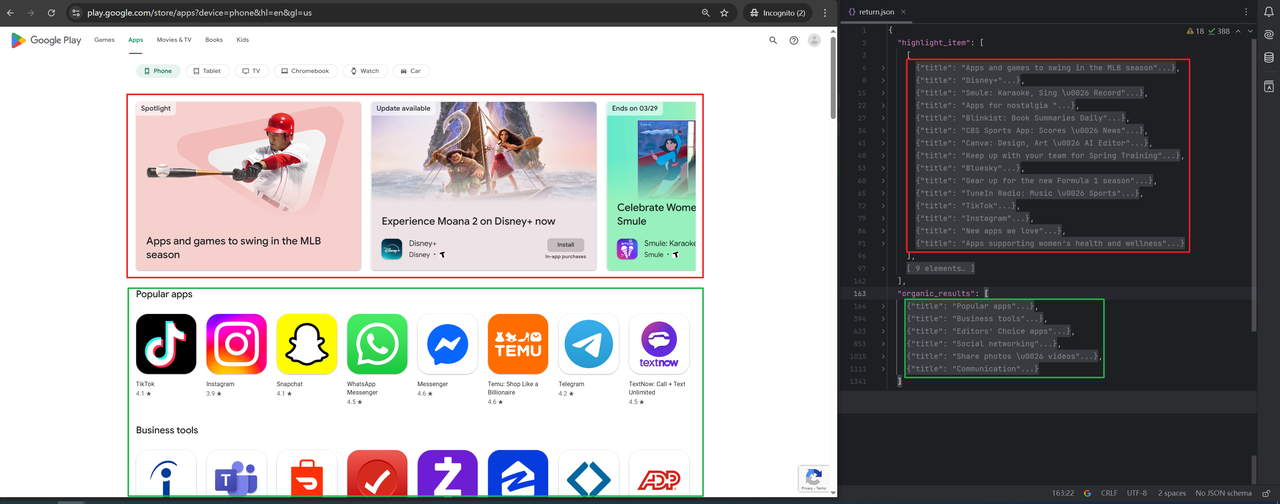

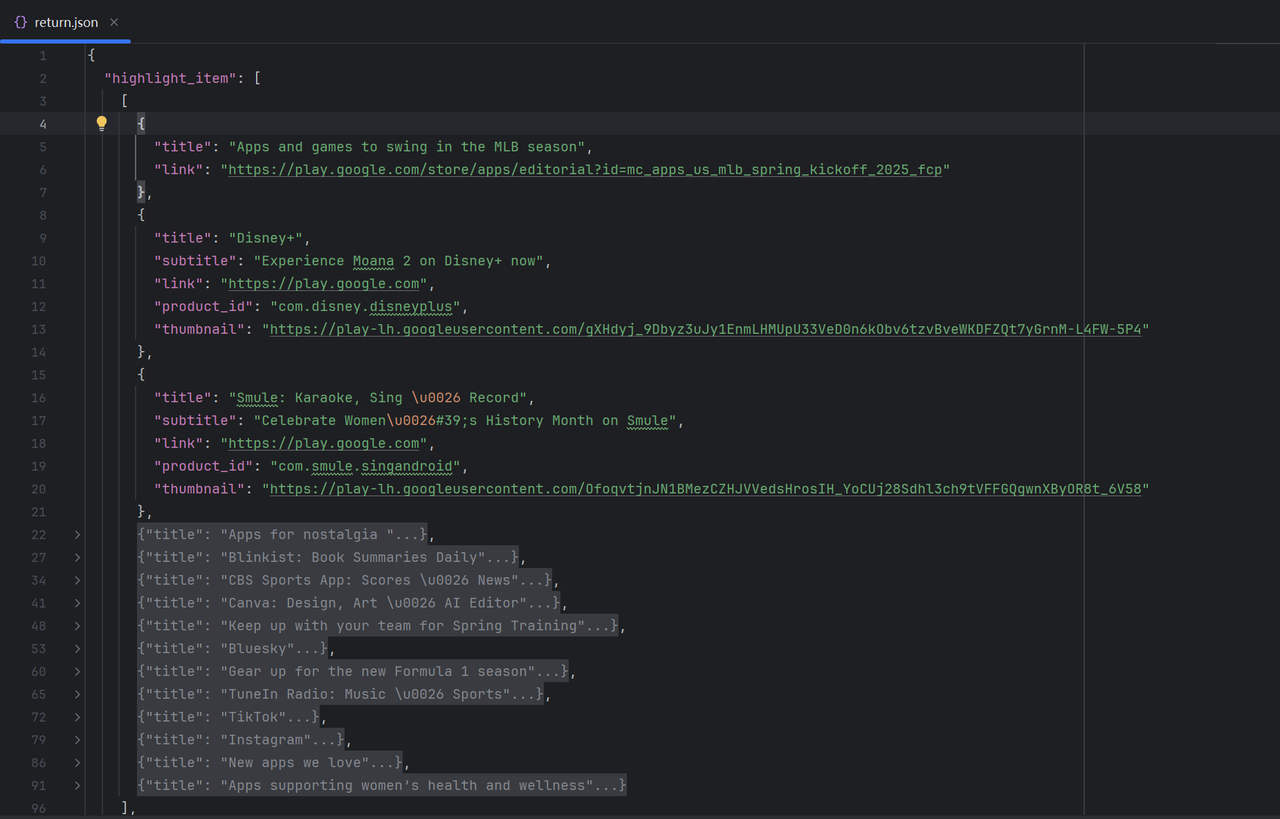

}When you start the method, you will get two files, one response.txt (original data) and one return.json (parsed data). The following is an example of the comparison between return.json and web page data:

Scrape product reviews data

Step 1. Get the original data

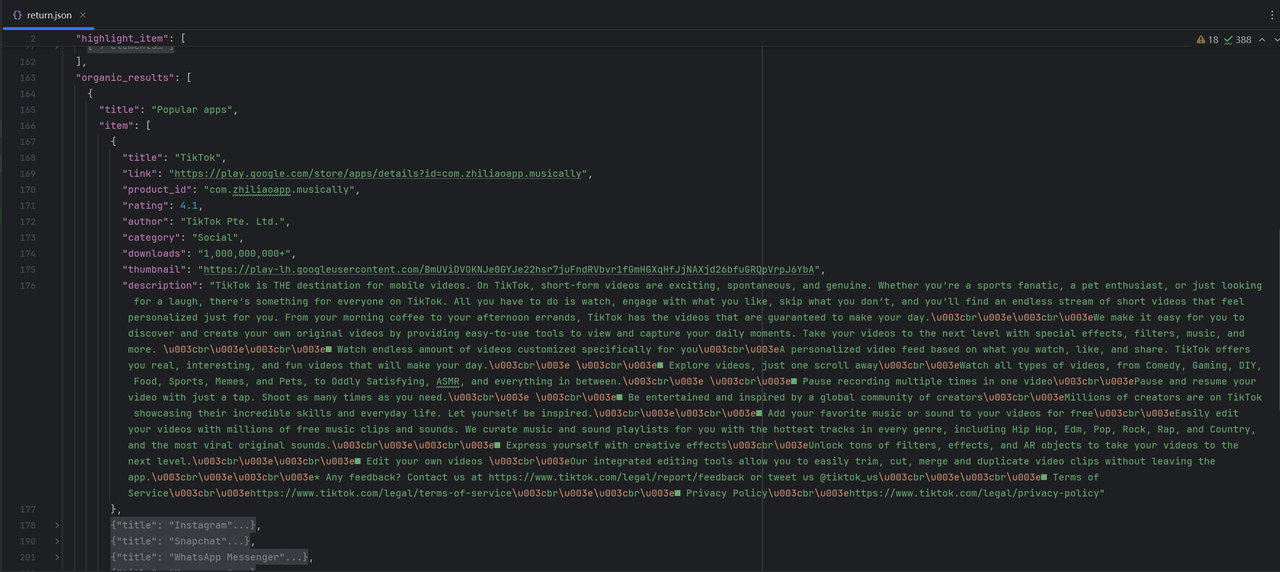

Similar to Scrape apps data, just modify the Query String Parameters and Form Data. The example uses TikTok.

Example of modifying Query String Parameters:

Go

func buildUrl() *url.URL {

baseURL := &url.URL{

Scheme: "https",

Host: "play.google.com",

Path: "/_/PlayStoreUi/data/batchexecute",

}

// Build Query String Parameters

params := url.Values{}

params.Add("rpcids", "CLXjtf,A6yuRe,Ws7gDc,ZittHe,yowZ5,ag2B9c,e7uDs,oCPfdb")

params.Add("source-path", "/store/apps/details")

params.Add("f.sid", "8261825727580601148")

params.Add("bl", "boq_playuiserver_20250318.09_p0")

params.Add("hl", "en")

params.Add("gl", "us")

params.Add("authuser", "")

params.Add("soc-app", "121")

params.Add("soc-platform", "1")

params.Add("soc-device", "1")

params.Add("_reqid", "143380")

params.Add("rt", "c")

baseURL.RawQuery = params.Encode()

return baseURL

}

Modify the Form Data example as follows:

func buildFormData() *strings.Reader {

// Build Form Data

var freq = "[[["CLXjtf","[[\"com.zhiliaoapp.musically\",7]]",null,"1"],["A6yuRe","[[\"com.zhiliaoapp.musically\",7]]",null,"3"],["Ws7gDc","[null,null,[[1,9,10,11,13,14,19,20,38,43,47,49,52,58,59,63,69,70,73,74,75,78,79,80,91,92,95,96,97,100,101,103,106,112,119,129,137,139,141,145,146,149,151,155,169]],[[[1,null,1],null,[[[]]],null,null,null,null,[null,2],null,null,null,null,null,null,null,null,null,null,null,null,null,null,[1]],[null,[[[]]],null,null,[1]],[null,[[[]]],null,[1]],[null,[[[]]]],null,null,null,null,[[[[]]]],[[[[]]]]],null,[[\"com.zhiliaoapp.musically\",7]]]",null,"5"],["ZittHe","[[null,[[3,[10]],null,null,[184]],[\"com.zhiliaoapp.musically\",7]]]",null,"7"],["yowZ5","[[null,[[3,[10]],null,null,[184]],[\"com.zhiliaoapp.musically\",7]]]",null,"9"],["ag2B9c","[[null,[\"com.zhiliaoapp.musically\",7],null,[[3,[6]],null,null,[1,8]]],[1]]",null,"11"],["e7uDs","[[\"com.zhiliaoapp.musically\",7]]",null,"13"],["Ws7gDc","[null,null,[[52]],[[null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,null,[2]]],null,[[\"com.zhiliaoapp.musically\",7]]]",null,"15"],["oCPfdb","[null,[2,null,[20],null,[null,null,null,null,null,null,null,null,2]],[\"com.zhiliaoapp.musically\",7]]",null,"17"]]]"

var data = strings.NewReader(`f.req=` + freq)

return data

}The following is an example of the returned result:

Step 2. Data analysis

We still need to perform data analysis. The analysis sample code is as follows:

Go

// parsing product

func parseProductInfo(wrbs [][]byte) (pctInfo ProductInfo, media Media, atApp AboutThisApp,

badges []Badges, cgs []Categories, updateOn string, dataSafety []DataSafety, what WhatIsNew,

ratings []Ratings, reviews []Review, contact DeveloperContact, apps []SimilarResults) {

if len(wrbs) > 0 {

var ratingSym = 0

for _, wrb := range wrbs {

rpcid := jsoniter.Get(wrb, 0, 1).ToString()

switch rpcid {

case "Ws7gDc":

data := jsoniter.Get(wrb, 0, 2).ToString()

dataBytes := []byte(data)

pctInfo = getProductIndo(dataBytes)

media = getMedia(dataBytes)

atApp = getAboutThisApp(dataBytes)

badges = getBadges(dataBytes)

cgs = getCategories(dataBytes)

updateOn = jsoniter.Get(dataBytes, 1, 2, 145, 0, 0).ToString()

dataSafety = getDataSafety(dataBytes)

what = getWhatIsNew(dataBytes)

if ratingSym < 1 {

ratings = getRatings(dataBytes)

ratingSym = ratingSym + 1

}

contact = DeveloperContact{

SupportEmail: jsoniter.Get(dataBytes, 1, 2, 69, 1, 0).ToString(),

}

case "ag2B9c":

data := jsoniter.Get(wrb, 0, 2).ToString()

dataBytes := []byte(data)

apps = getApps(dataBytes)

case "oCPfdb":

data := jsoniter.Get(wrb, 0, 2).ToString()

dataBytes := []byte(data)

reviews = getReviews(dataBytes)

default:

continue

}

}

}

return pctInfo, media, atApp, badges, cgs, updateOn, dataSafety, what, ratings, reviews, contact, apps

}

func getReviews(dataBytes []byte) []Review {

var reviews []Review

reviewStr := jsoniter.Get(dataBytes, 0).ToString()

var reviewMarsh []interface{}

err := json.Unmarshal([]byte(reviewStr), &reviewMarsh)

if err != nil {

return reviews

}

for _, it := range reviewMarsh {

marshal, err := json.Marshal(it)

if err != nil {

fmt.Println(err)

continue

}

timestamp := jsoniter.Get(marshal, 5, 0).ToInt64()

t := time.Unix(timestamp, 0)

formattedDate := t.Format("January 2, 2006")

formattedUTC := t.UTC().Format(time.RFC3339)

reviews = append(reviews, Review{

ID: jsoniter.Get(marshal, 0).ToString(),

Title: jsoniter.Get(marshal, 1, 0).ToString(),

Avatar: jsoniter.Get(marshal, 1, 1, 3, 2).ToString(),

Rating: jsoniter.Get(marshal, 2).ToFloat32(),

Snippet: jsoniter.Get(marshal, 4).ToString(),

Likes: jsoniter.Get(marshal, 6).ToInt(),

Date: formattedDate,

ISODate: formattedUTC,

})

}

return reviews

}

func getApps(dataBytes []byte) (similarResults []SimilarResults) {

moreAppStr := jsoniter.Get(dataBytes, 1, 1, 1, 21).ToString()

SimilarAppStr := jsoniter.Get(dataBytes, 1, 1, 0, 21).ToString()

link := jsoniter.Get([]byte(moreAppStr), 1, 2, 4, 2).ToString()

moreAppItems := getProductItems(moreAppStr)

SimilarAppItems := getProductItems(SimilarAppStr)

moreApp := SimilarResults{

Title: jsoniter.Get([]byte(moreAppStr), 1, 0).ToString(),

SeeMoreLink: GooglePlayUrl + link,

SeeMoreToken: jsoniter.Get([]byte(moreAppStr), 1, 3, 1).ToString(),

Items: moreAppItems,

}

similarApp := SimilarResults{

Title: jsoniter.Get([]byte(SimilarAppStr), 1, 0).ToString(),

SeeMoreLink: GooglePlayUrl + link,

SeeMoreToken: jsoniter.Get([]byte(SimilarAppStr), 1, 3, 1).ToString(),

SerpapiLink: "",

Items: SimilarAppItems,

}

similarResults = append(similarResults, moreApp, similarApp)

return similarResults

}

func getProductItems(similarStr string) (similarItems []Item) {

similarArrStr := jsoniter.Get([]byte(similarStr), 0).ToString()

var similarArr []interface{}

err := json.Unmarshal([]byte(similarArrStr), &similarArr)

if err != nil {

return similarItems

}

for _, it := range similarArr {

marshal, err := json.Marshal(it)

if err != nil {

fmt.Println(err)

}

similarItems = append(similarItems, Item{

Title: jsoniter.Get(marshal, 3).ToString(),

Link: GooglePlayUrl + jsoniter.Get(marshal, 10, 4, 2).ToString(),

ProductID: jsoniter.Get(marshal, 0, 0).ToString(),

SerpapiLink: "",

Rating: jsoniter.Get(marshal, 4, 0).ToFloat64(),

Thumbnail: jsoniter.Get(marshal, 1, 3, 2).ToString(),

Extension: []string{jsoniter.Get(marshal, 14).ToString()},

})

}

return similarItems

}

func getRatings(dataBytes []byte) []Ratings {

var ratings []Ratings

rateStr := jsoniter.Get(dataBytes, 1, 2, 51, 1).ToString()

var rateStrMarsh []interface{}

err := json.Unmarshal([]byte(rateStr), &rateStrMarsh)

if err != nil {

fmt.Println(err)

return ratings

}

for i, marsh := range rateStrMarsh {

if marsh == nil {

continue

}

marshal, err := json.Marshal(marsh)

if err != nil {

fmt.Println(err)

continue

}

count := jsoniter.Get(marshal, 1).ToInt64()

ratings = append(ratings, Ratings{

Stars: i,

Count: count,

})

}

return ratings

}

func getBadges(dataBytes []byte) []Badges {

var badges []Badges

badge2 := jsoniter.Get(dataBytes, 1, 2, 58, 0).ToString()

badge1 := jsoniter.Get(dataBytes, 1, 2, 58, 2).ToString()

return append(badges, Badges{Name: badge1 + " " + badge2})

}

func getCategories(dataBytes []byte) (categories []Categories) {

cgsStr := jsoniter.Get(dataBytes, 1, 2, 79, 0).ToString()

var cgsStrMarsh []interface{}

err := json.Unmarshal([]byte(cgsStr), &cgsStrMarsh)

if err != nil {

return categories

}

for _, item := range cgsStrMarsh {

marshal, err := json.Marshal(item)

if err != nil {

fmt.Println(err)

continue

}

categories = append(categories, Categories{

Name: jsoniter.Get(marshal, 0).ToString(),

Link: GooglePlayUrl + jsoniter.Get(marshal, 1, 4, 2).ToString(),

CategoryID: jsoniter.Get(marshal, 2).ToString(),

SerpapiLink: "",

})

}

return categories

}

func getDataSafety(dataBytes []byte) (safeties []DataSafety) {

dataStr := jsoniter.Get(dataBytes, 1, 2, 136, 1).ToString()

var dataStrMarsh []interface{}

err := json.Unmarshal([]byte(dataStr), &dataStrMarsh)

if err != nil {

return safeties

}

for _, item := range dataStrMarsh {

marshal, err := json.Marshal(item)

if err != nil {

fmt.Println(err)

continue

}

subtext := jsoniter.Get(marshal, 2, 1).ToString()

unescapeString := html.UnescapeString(subtext)

var link = ""

if strings.Contains(unescapeString, "href") {

hrefPattern := `href=["']([^"']+)["']`

pattern := `<a[^>]*>(.*?)<\/a>\s*(.*)`

hrefRegex := regexp.MustCompile(hrefPattern)

aRegex := regexp.MustCompile(pattern)

hrefMatch := hrefRegex.FindStringSubmatch(unescapeString)

match := aRegex.FindStringSubmatch(unescapeString)

if len(hrefMatch) < 2 || len(match) < 2 {

fmt.Println(err)

continue

}

if len(match) > 2 {

subtext = match[1] + match[2]

} else {

subtext = match[1]

}

link = hrefMatch[1]

}

safeties = append(safeties, DataSafety{

Text: jsoniter.Get(marshal, 1).ToString(),

Subtext: subtext,

Link: link,

})

}

return safeties

}

func getWhatIsNew(dataBytes []byte) WhatIsNew {

return WhatIsNew{

Snippet: jsoniter.Get(dataBytes, 1, 2, 144, 1, 1).ToString(),

}

}

func getAboutThisApp(dataBytes []byte) (about AboutThisApp) {

var permissions []Permission

detailsStr := jsoniter.Get(dataBytes, 1, 2, 74, 2, 0).ToString()

var detailStrMarsh []interface{}

err := json.Unmarshal([]byte(detailsStr), &detailStrMarsh)

if err != nil {

return about

}

for _, item := range detailStrMarsh {

marshal, err := json.Marshal(item)

if err != nil {

fmt.Println(err)

continue

}

detStr := jsoniter.Get(marshal, 2).ToString()

var detStrArr []interface{}

err = json.Unmarshal([]byte(detStr), &detStrArr)

if err != nil {

fmt.Println(err)

continue

}

var details []string

for _, item := range detStrArr {

it, err := json.Marshal(item)

if err != nil {

fmt.Println(err)

}

det := jsoniter.Get(it, 1).ToString()

details = append(details, det)

}

var permission = Permission{

Type: jsoniter.Get(marshal, 0).ToString(),

Details: details,

}

permissions = append(permissions, permission)

}

return AboutThisApp{

Snippet: jsoniter.Get(dataBytes, 1, 2, 72, 0, 1).ToString(),

InAppPurchases: jsoniter.Get(dataBytes, 1, 2, 19, 0).ToString(),

ReleasedOn: jsoniter.Get(dataBytes, 1, 2, 10, 0).ToString(),

UpdatedOn: jsoniter.Get(dataBytes, 1, 2, 145, 0, 0).ToString(),

Downloads: jsoniter.Get(dataBytes, 1, 2, 13, 0).ToString(),

ContentRating: jsoniter.Get(dataBytes, 1, 2, 9, 0).ToString(),

InteractiveElements: jsoniter.Get(dataBytes, 1, 2, 9, 3, 1).ToString(),

OfferedBy: jsoniter.Get(dataBytes, 1, 2, 37, 0).ToString(),

Permissions: permissions,

}

}

func getMedia(dataBytes []byte) (media Media) {

var video = Video{

Thumbnail: jsoniter.Get(dataBytes, 1, 2, 100, 0, 1, 3, 2).ToString(),

Link: jsoniter.Get(dataBytes, 1, 2, 100, 0, 0, 3, 2).ToString(),

}

var images []string

imgStr := jsoniter.Get(dataBytes, 1, 2, 78, 0).ToString()

var imgStrMarsh []interface{}

err := json.Unmarshal([]byte(imgStr), &imgStrMarsh)

if err != nil {

return media

}

for _, marsh := range imgStrMarsh {

marshal, err := json.Marshal(marsh)

if err != nil {

}

imgUrl := jsoniter.Get(marshal, 3, 2).ToString()

images = append(images, imgUrl)

}

media = Media{

Video: video,

Images: images,

}

return media

}

func getProductIndo(dataByte []byte) (pctInfo ProductInfo) {

rating := jsoniter.Get(dataByte, 1, 2, 51, 0, 0).ToFloat32()

title := jsoniter.Get(dataByte, 1, 2, 0, 0).ToString()

author := Author{

Name: jsoniter.Get(dataByte, 1, 2, 68, 0).ToString(),

Link: GooglePlayUrl + jsoniter.Get(dataByte, 1, 2, 68, 1, 4, 2).ToString(),

}

extension := jsoniter.Get(dataByte, 1, 2, 48, 0).ToString()

reviews := jsoniter.Get(dataByte, 1, 2, 51, 21).ToInt64()

contentRating := ContentRating{

Text: jsoniter.Get(dataByte, 1, 2, 9, 0).ToString(),

Thumbnail: jsoniter.Get(dataByte, 1, 2, 9, 1, 3, 2).ToString(),

}

downloads := jsoniter.Get(dataByte, 1, 2, 13, 2).ToString()

thumbnail := jsoniter.Get(dataByte, 1, 2, 95, 0, 3, 2).ToString()

offersStr := jsoniter.Get(dataByte, 1, 2, 57, 0).ToString()

var offersUnMarsh []interface{}

err := json.Unmarshal([]byte(offersStr), &offersUnMarsh)

if err != nil {

return pctInfo

}

var offers []Offer

for _, marsh := range offersUnMarsh {

marshal, err := json.Marshal(marsh)

if err != nil {

fmt.Println("marsh marshal: %v" + err.Error())

}

text := jsoniter.Get(marshal, 1, 0).ToString()

link := jsoniter.Get(marshal, 0, 0, 6, 5, 2).ToString()

offers = append(offers, Offer{text, link})

}

pctInfo = ProductInfo{

Title: title,

Authors: []Author{author},

Extensions: []string{extension},

Rating: rating,

Reviews: reviews,

ContentRating: contentRating,

Downloads: downloads,

Thumbnail: thumbnail,

Offers: offers,

}

return pctInfo

}Step 3. Data merging

Modify the main method and call the parseProductInfo method for parsing. The example is as follows:

Go

import (

"bytes"

"encoding/json"

"fmt"

jsoniter "github.com/json-iterator/go"

"html"

"io"

"log"

"net/http"

"net/url"

"os"

"regexp"

"strings"

"time"

)

var GooglePlayUrl = "https://play.google.com"

func main() {

// Build Query String Parameters

baseURL := buildUrl()

// Build Form Data

data := buildFormData()

client := &http.Client{}

req, err := http.NewRequest("POST", baseURL.String(), data)

if err != nil {

log.Fatal(err)

}

// Set Headers

setHeaders(req)

resp, err := client.Do(req)

if err != nil {

log.Fatal(err)

}

defer resp.Body.Close()

bodyText, err := io.ReadAll(resp.Body)

if err != nil {

log.Fatal(err)

}

// Write local temporary file

file, err := os.Create("response.txt")

_, err = file.WriteString(string(bodyText))

// parse apps data

info, media, app, badges, cgs, on, safety, what, ratings, reviews, contact, apps := parseProductInfo(GetWrbs(bodyText))

var response = Response{

ProductInfo: &info,

Media: &media,

AboutThisApp: &app,

Badges: badges,

Categories: cgs,

UpdatedOn: on,

DataSafety: safety,

WhatIsNew: &what,

Ratings: ratings,

Reviews: reviews,

DeveloperContact: &contact,

SimilarResults: apps,

}

marshal, err := json.Marshal(response)

returnFile, err := os.Create("return.json")

_, err = returnFile.WriteString(string(marshal))

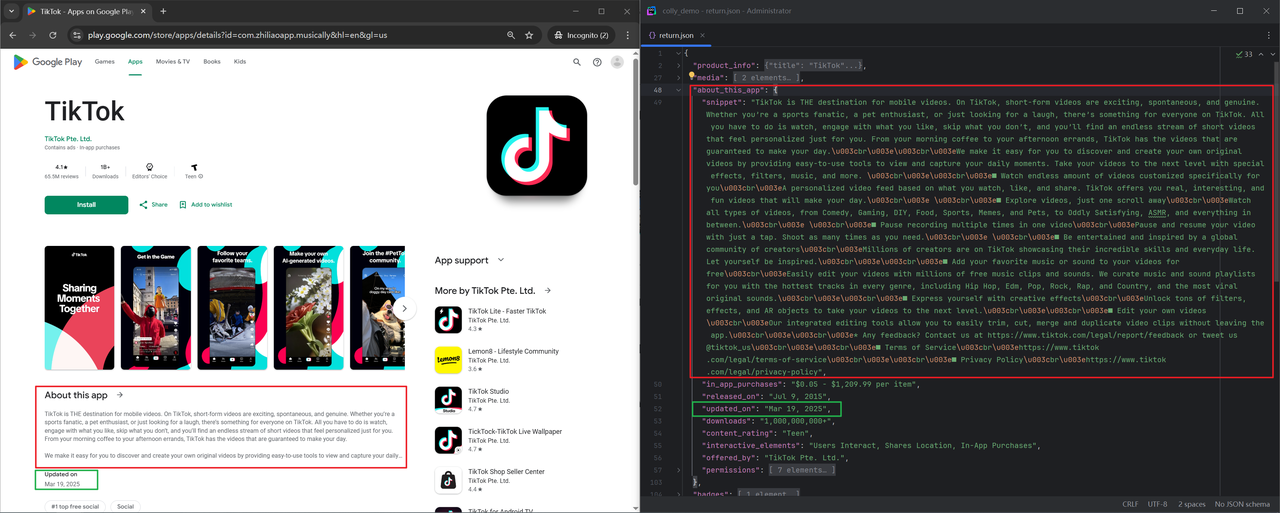

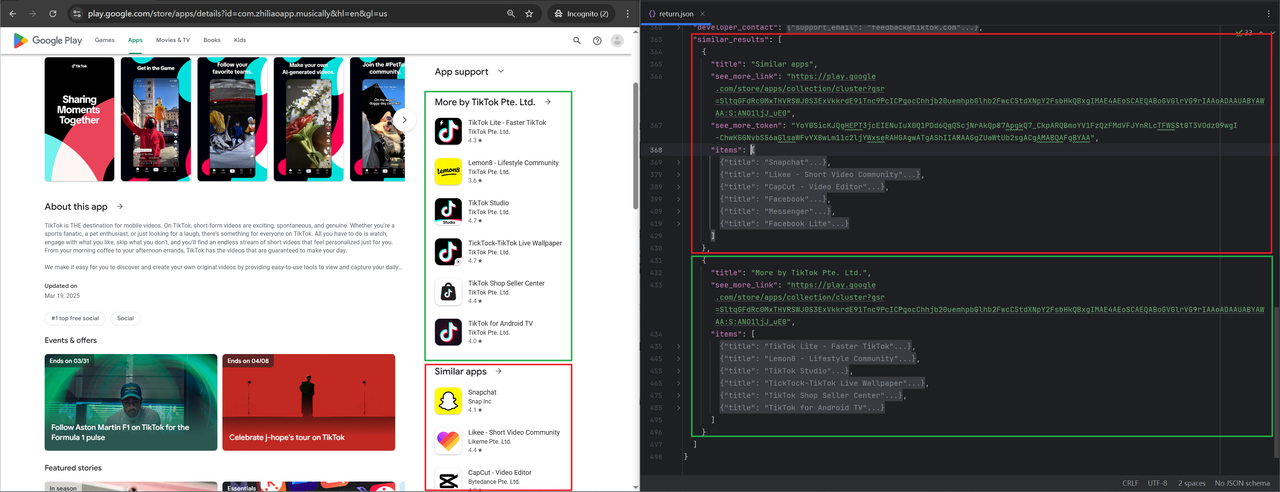

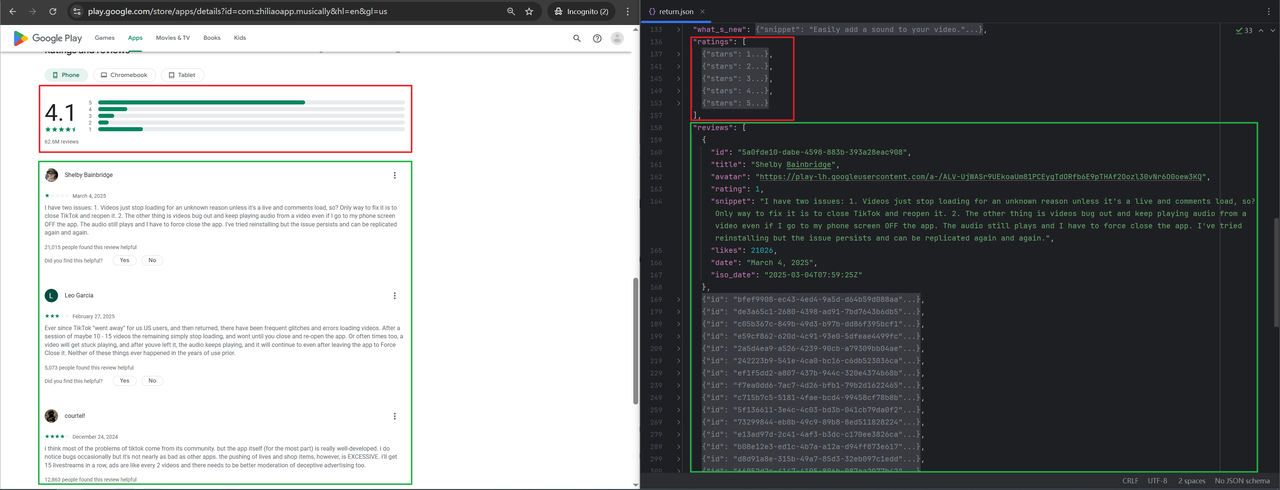

}When you start the method, you will get two files, one response.txt (raw data) and one return.json (parsed data). The following is an example of the comparison between return.json and web page data:

Let's expand the data for comparison. The comparison chart is as follows:

Scrapeless Scraping API - More Effective Method

Why use Scraping API?

There are several reasons why you might want to use an API:

- No need to create a parser from scratch and maintain it.

- Bypass Google's blocking: solving CAPTCHAs or solving IP blocking.

- Paying for proxies and CAPTCHA solvers.

- No need to use browser automation.

The Scrapeless API handles everything on the backend, the response time is very fast, less than ~3.3 seconds per request, and there is no need for browser automation, so it will be much faster.

Is this Google Play Store API free?

Yes. Scrapeless provides you with a $2 free credit. You can sign up directly to claim free credit. With Google Play Scraper, you can easily scrape thousands of results for free. The range of free results depends on the complexity of your input: URL, review, or keyword.

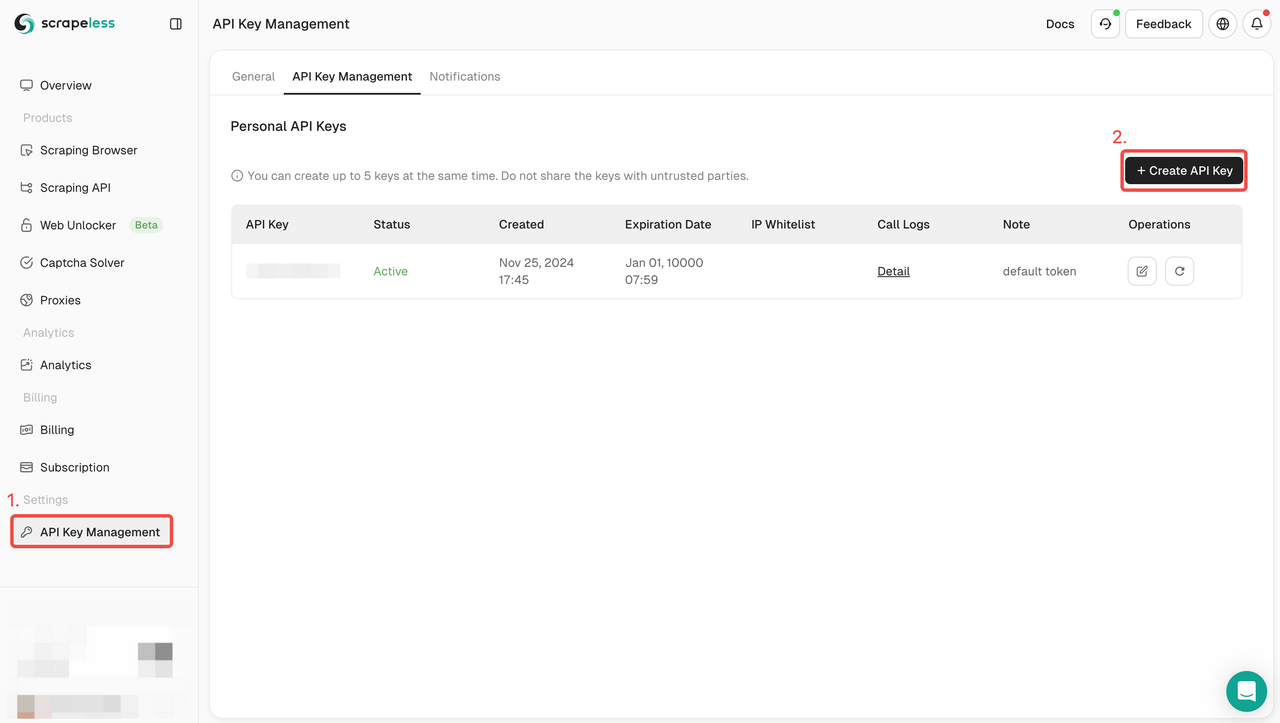

Step 1. Create your API Token

To get started, you’ll need to obtain your API Key from the Scrapeless Dashboard:

- Log in to the Scrapeless Dashboard.

- Navigate to API Key Management.

- Click Create to generate your unique API Key.

- Once created, simply click on the API Key to copy it.

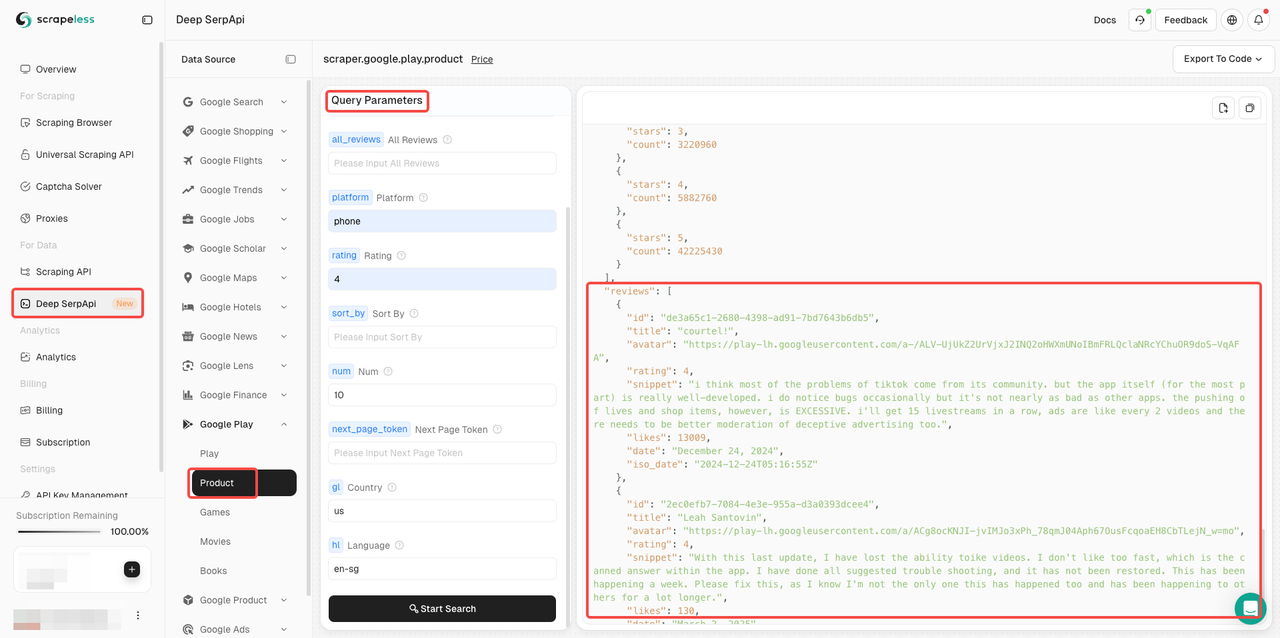

Step 2. Jump to Deep SerpApi

After creating the API token, click Deep SerpApi.

- Find the Google Play actor and choose the "Product". You can also scrape Play, Games, Movies, and Books according to your needs.

- Add the query parameters you need to complete the request configuration.

- Click Start Search and wait for the results to load.

For specific parameter filling requirements, please refer to our API documentation.

The Ending Thoughts

This article details how to scrape Google Play Store using Golang. You can easily scrape apps and review data according to the tutorial in this article. Scrapeless is constantly expanding into newer, more reliable, and more feature-rich scrapers. Welcome to explore more Google scrapers.

FAQs

Does Google Play Store have API?

Unfortunately, Google Play does not have an official API dedicated to retrieving games, reviews, and developer data. Although there is a Google Play Developer API, this API cannot access app data. The best approach is to choose to use a third-party API that is cheaper and responds quickly.

You can also use webhooks to perform actions when events occur, for example, you will be notified every time the Play Store Scraper successfully completes its run.

Is it legal to scrape data from Google Play?

Please note that personal data such as names are protected by the EU GDPR and other regulations around the world. You should not scrape personal data unless you have a legitimate reason. However, our API only scrapes public data on the web, so you don't have to worry about legal sanctions for using Scrapeless.

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.