How to Scrape Google News with Python

Advanced Data Extraction Specialist

Google News is a goldmine of real-time information, but scraping data from it is not always straightforward. The platform employs various anti-scraping mechanisms, dynamic content loading, and legal restrictions that can make scraping challenging. In this article, we will explore three different methods for scraping Google News with Python, and ultimately, we’ll recommend using Scrapeless to scrape Google News more efficiently.

Challenges in Scraping Google News with Python

Before diving into methods, it's important to understand the challenges involved in scraping Google News:

- Anti-Scraping Mechanisms: Google uses CAPTCHA systems, rate-limiting, and IP blocking to prevent automated scraping.

- Dynamic Content: Some of the content on Google News is loaded dynamically through JavaScript, which requires specialized tools to handle.

- Legal Considerations: Always ensure you comply with Google’s Terms of Service, which restricts automated data scraping. Using official tools like APIs can help ensure legal compliance.

With these challenges in mind, let's look at how we can overcome them.

Method 1: Scraping Google News with Requests & BeautifulSoup

One of the simplest ways to scrape Google News is by using the Requests library to fetch the HTML page and BeautifulSoup to parse the content. Here's an example:

language

import requests

from bs4 import BeautifulSoup

# Send a request to the Google News URL

url = "https://news.google.com/search?q=technology"

response = requests.get(url)

# Parse the HTML content with BeautifulSoup

soup = BeautifulSoup(response.text, 'html.parser')

# Find all the headlines

headlines = soup.find_all('h3')

for headline in headlines:

print(headline.text)Limitations:

- No JavaScript Rendering: If the page loads content dynamically through JavaScript, Requests & BeautifulSoup won't be able to capture it.

- Anti-Scraping Risk: Google can easily detect and block scraping attempts if the traffic volume is too high.

Best Use Case:

This method is suitable for small-scale scraping, where data is static and does not require dynamic content loading.

Method 2: Scraping Google News with Selenium

For websites like Google News, which heavily rely on JavaScript to render dynamic content, Selenium is a better option. Selenium automates browser actions and can scrape dynamically loaded content.

Here’s how you can use Selenium to scrape Google News:

language

from selenium import webdriver

# Set up the browser driver (ensure that the appropriate driver is installed)

driver = webdriver.Chrome(executable_path='/path/to/chromedriver')

# Open the Google News page

driver.get('https://news.google.com/search?q=technology')

# Find all the headlines

headlines = driver.find_elements_by_xpath('//h3')

for headline in headlines:

print(headline.text)

# Close the browser

driver.quit()Pros:

- Handles Dynamic Content: Selenium can interact with pages that use JavaScript to load content.

- Browser Automation: Mimics real user behavior, reducing the chances of getting blocked.

Cons:

- Slower: Running an actual browser is resource-intensive and can be slow compared to simpler solutions.

- Detection Risk: While Selenium can avoid some basic anti-scraping measures, it is still susceptible to detection by more advanced systems.

Best Use Case:

Ideal for small-scale scraping or projects where JavaScript rendering is required, but not recommended for large-scale data collection due to its slower speed.

Method 3: Scraping Google News with Scrapeless API (Recommended Solution)

While the above methods work, they come with limitations such as speed, ease of use, and maintenance. Scrapeless provides a much faster and more efficient solution to scrape Google News at scale. It allows you to access Google News data directly via an API, bypassing all the issues related to anti-scraping measures and dynamic content.

Why Scrapeless is the Best Choice:

- No Maintenance: With Scrapeless, you don’t need to worry about handling proxies, CAPTCHA, or rate-limiting.

- Faster and More Efficient: The API is optimized for speed, and you don’t need to rely on browser automation or complicated setup.

- Affordable: Scrapeless offers a pricing plan that is cost-effective for both small businesses and enterprise-level operations ($0.1 per 1K API calls).

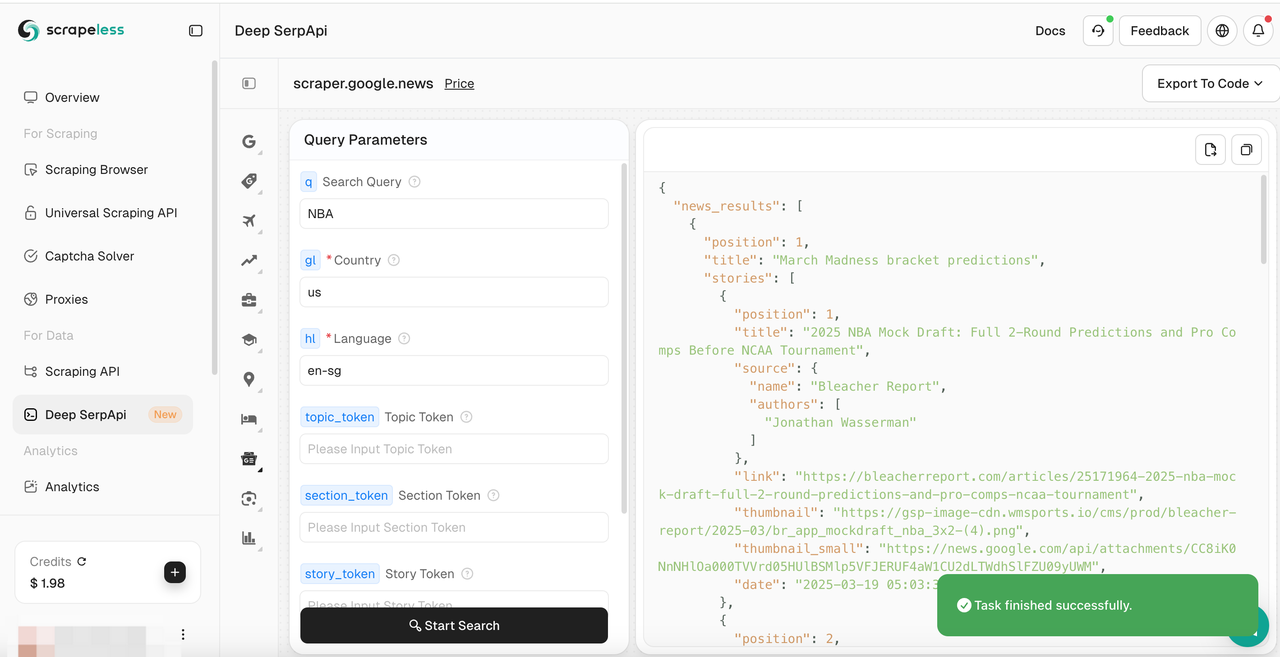

- Reliable Data: Scrapeless provides high-quality, structured data in JSON format, making it easier to integrate with your applications.

How to Scrape Google News with Scrapeless

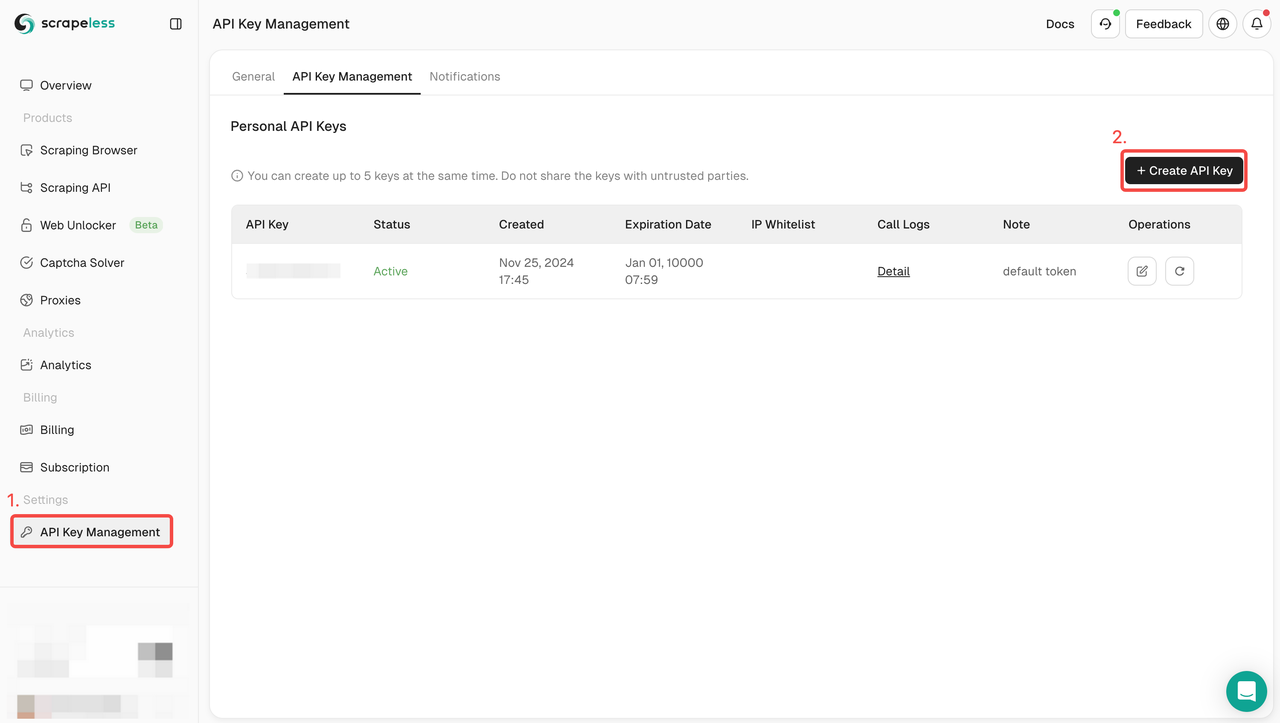

Step 1: Create your Google Play Store API Token

To get started, you’ll need to obtain your API Key from the Scrapeless Dashboard:

- Log in to the Scrapeless Dashboard.

- Navigate to API Key Management.

- Click Create to generate your unique API Key.

- Once created, simply click on the API Key to copy it.

Join Scrapeless now to get a free trial; you can also join the Scrapeless Discord to participate in more free activities.

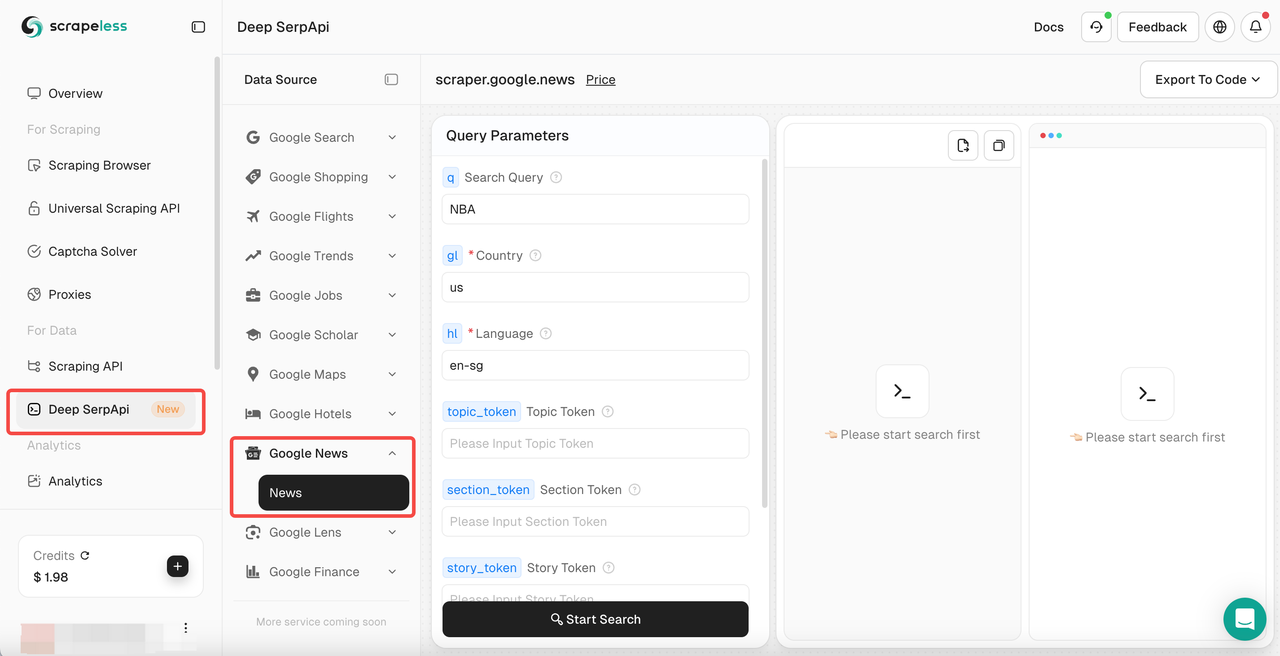

Step 2: Select Deep Serpapi, then select Google News

Step 3: Set the corresponding scraping parameters and click "Start Scraping"

You can also integrate Scrapeless into your project:

language

import json

import requests

class Payload:

def __init__(self, actor, input_data):

self.actor = actor

self.input = input_data

def send_request():

host = "api.scrapeless.com"

url = f"https://{host}/api/v1/scraper/request"

token = "your_token"

headers = {

"x-api-token": token

}

input_data = {

"engine": "google_news",

"q": "pizza",

"gl": "us",

"hl": "en",

}

payload = Payload("scraper.google.news", input_data)

json_payload = json.dumps(payload.__dict__)

response = requests.post(url, headers=headers, data=json_payload)

if response.status_code != 200:

print("Error:", response.status_code, response.text)

return

print("body", response.text)

if __name__ == "__main__":

send_request()Tired of dealing with IP blocks, CAPTCHA, and constantly changing HTML structures?

With Scrapeless Google News API, you can bypass restrictions, extract real-time news data, and save hours of development time—all with a simple API call!

Benefits:

- Bypasses Anti-Scraping Mechanisms: No need to worry about IP blocking or CAPTCHAs.

- Handles Dynamic Content: The API provides real-time, dynamic data without the need for complex browser automation.

- Scalable and Cost-Effective: Whether you’re scraping a few articles or thousands, Scrapeless scales with your needs.

Best Use Case:

Perfect for large-scale, enterprise-level data scraping needs where speed, accuracy, and legal compliance are cruci

FAQs about scrape google news

Q1: Can I use Scrapy or Selenium for large-scale Google News scraping?

Scrapy and Selenium can work for scraping Google News, but they are not ideal for large-scale operations. They are prone to being blocked and are resource-intensive. Scrapeless provides a better solution for high-volume scraping.

Q2: How do I handle Google’s anti-scraping mechanisms?

Google employs CAPTCHA, rate-limiting, and IP blocking to prevent scraping. With Scrapeless, these issues are bypassed since it uses a robust API that ensures smooth data retrieval without the hassle.

Q3: What’s the best tool for scraping Google News?

For most users, Scrapeless is the best tool for scraping Google News. It’s faster, more reliable, and doesn’t require any maintenance. It’s the most efficient option, especially for businesses.

Conclusion

In this article, we’ve explored three methods to scrape Google News: using Requests & BeautifulSoup, Selenium, and Scrapeless. While Requests & BeautifulSoup and Selenium are viable options for smaller-scale scraping, Scrapeless offers the most efficient and scalable solution, particularly for enterprise-level needs. It’s faster, more reliable, and cost-effective, making it the best choice for businesses and developers.

Try Scrapeless today with a free trial and experience hassle-free scraping for Google News and other platforms.

Related resources

How To Scrape Flight Data from Kayak

How to Use Selenium with PowerShell

Scrape Google Jobs to easily make Job Lists using Scrapeless

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.