TLS Fingerprinting: What Is It?

Expert Network Defense Engineer

In this article, you’ll learn all about TLS fingerprinting and how Scrapeless, a company offering web unlocker, Captcha Solver and proxy services, utilizes it to mask proxies and enhance web scraping.

Comprehending TLS Fingerprinting

TLS is a well-liked encryption protocol that is frequently used to protect communications between web clients and servers in computer networks. The TLS handshake starts the process of discovering and interacting with safe websites on the internet:

The server must accept the connection request made by your web browser or client in order for it to begin. The client then sends a ClientHello message to the website server to start the TLS handshake. This message provides details about the preferences and capabilities of the web browser, including supported TLS versions, extensions, and cipher suites. After receiving this message, the website server verifies that the list of cipher suites in the ClientHello message matches the list of ciphers that the server supports. Subsequently, the server replies with a Hello message of its own, comprising the selected cipher suite, the TLS protocol, and the server's security certificate, which contains the public encryption key.

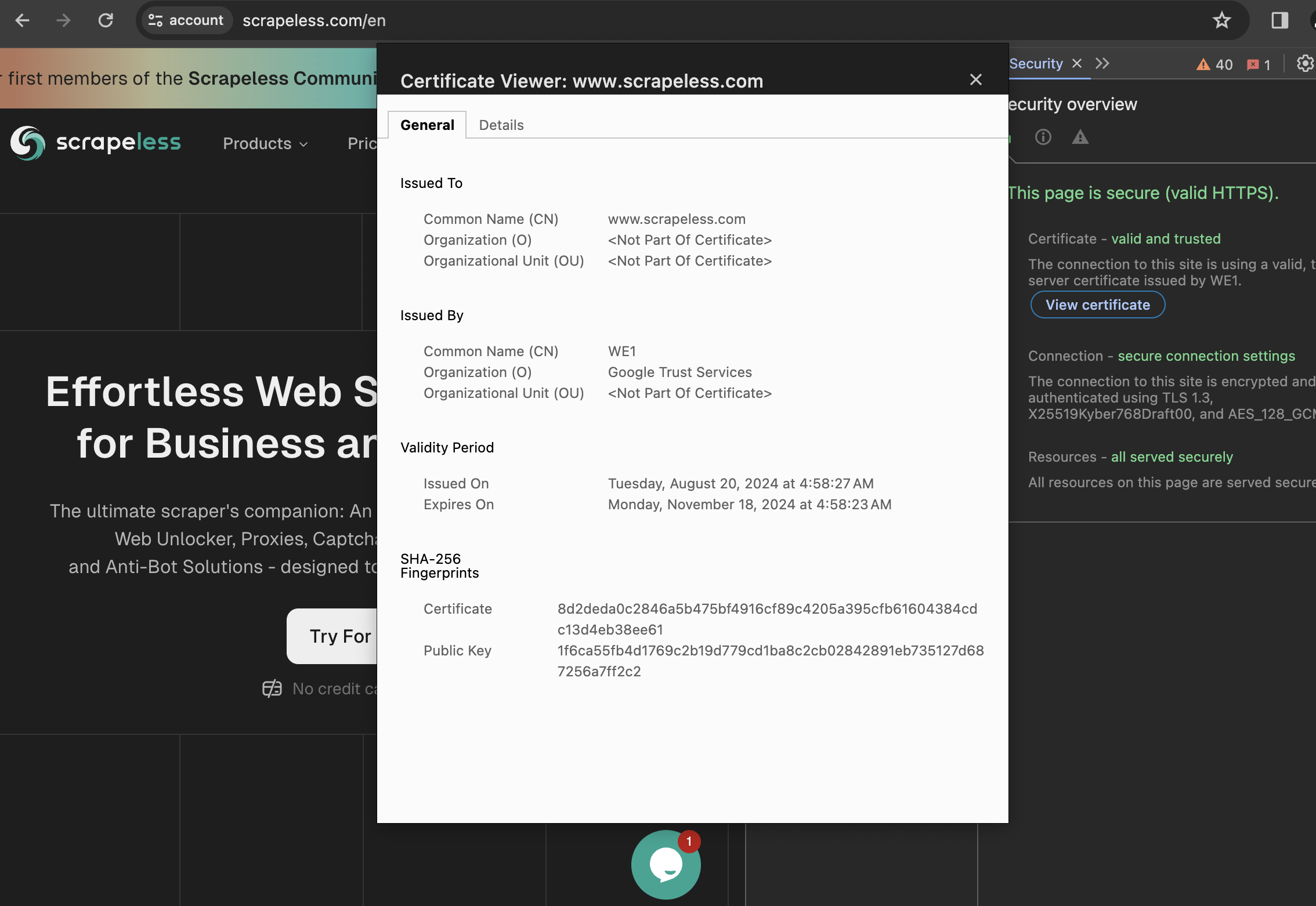

After confirming the security certificate of the server with the certifying authority, the client replies with a premaster secret key that has been encrypted with the public key of the web server. A secure connection for online surfing is established when the server decrypts the premaster secret and the client and server jointly produce a session key. For instance, the TLS certificate that appears when you visit scrapeless.com is as follows:

A distinct TLS library with a varied set of supported cipher suites and extensions is used by each web browser or client. For example, Firefox depends on the Network Security Services (NSS) library; Chrome utilizes Google's open-source BoringSSL TLS library; Python uses OpenSSL; Safari requires Apple's own TLS implementation, Secure Transport; and Microsoft Edge uses Schannel.

A TLS fingerprint may be computed and compared to the anticipated TLS library setup for each web browser using the data from a client's Hello message.

Clients' operating systems, web browsers, and fingerprints can all be used to identify them. In cases when the user headers do not match their TLS fingerprint, it can additionally keep an eye out for unusual requests.

TLS Identification and Anonymous Proxy

Another technique used by online corporations and organizations in their ongoing efforts to efficiently manage and safeguard their web traffic is TLS fingerprinting. Its goal is to prevent access to data or information by web clients, bots, and whole areas. It is no longer sufficient to mask your IP address, change proxies, strip, or alter user agent headers since user-agent information can still be disguised and TLS fingerprinting can still determine the underlying client characteristics based on other handshake parameters. Every attempt at establishing a connection may be compared to several TLS fingerprints and labeled as unusual traffic.

TLS fingerprinting is a workable security precaution for your website traffic, but it is not infallible. As more companies develop and employ TLS fingerprinting technologies for anti-bot defenses, new ways to get around TLS fingerprinting are developed.

In order to evade discovery or blockage, proxy services frequently try to mix user traffic with genuine traffic. Considering TLS fingerprinting protocols, certain proxy services (such as Scrapeless) offer proxies that imitate popular clients' or apps' TLS fingerprints. This enhances anonymity by making the proxy traffic seem like real connections.

Web scraping and TLS fingerprinting

Apart from its double function of regulating and safeguarding online traffic for web enterprises and augmenting anonymity for users of proxy services, TLS fingerprinting provides businesses with a novel perspective on which to examine and investigate their web traffic.

TLS fingerprinting makes it possible to distinguish between real and fake online traffic by identifying novel patterns in web traffic. Web scrapers and bots can be recognized by their TLS fingerprint and their access to websites blocked when they make repeated requests. Furthermore, bot traffic may be quickly recognized as suspicious when it comes with an inconsistent coupling of a TLS fingerprint and device class (OS, browser name, or browser version). A web scraper, for example, may project browser headers from a Firefox client, but its requests might not display the complementary TLS fingerprint that Firefox browsers usually have.

Anti-scraping services gather extensive TLS fingerprint compilations and use these lists to find common browser-like TLS signatures and add common web-scraping fingerprints to a blacklist in order to improve this security feature. Furthermore, data collecting systems such as Scrapeless keep a library of TLS fingerprints as a result of the usage of these fingerprints in anti-scraping procedures. By using these fingerprints of actual online users, they can more accurately simulate genuine web traffic.

TLS Identification and Data Transfer

Finally, a simple and efficient way to identify user clients is using TLS fingerprinting. Unlike security checks and restrictions like CAPTCHA, login/authentication forms, and deep packet inspection (DPI) checks, it is non-invasive and does not obstruct communication. Your web connection handles and processes data transmission without requiring decryption when TLS fingerprinting is used as a security check.

Numerous websites employ non-intrusive methods, such IP address, user activity analysis, and TLS fingerprinting, to verify users before enacting more stringent security measures. For online traffic security, projecting a legitimate TLS fingerprint helps prevent intrusive checks and data transfer limitations.

By creating personalized TLS handshakes at the network level and dynamically synthesizing user-agent headers and other web traffic characteristics to resemble the requests of actual browsers, Scrapeless assures seamless data transport. With its clever handling of fingerprinting, headers, and emulation, the Scrapeless Web Unlocker maximizes website access and data transfer while guaranteeing effective and inconspicuous data collecting.

Fed up with constant web scraping blocks and CAPTCHAs?

Introducing Scrapeless - the ultimate all-in-one web scraping solution!

Unlock the full potential of your data extraction with our powerful suite of tools:

Best Web Unlocker

Automatically solve advanced CAPTCHAs, keeping your scraping seamless and uninterrupted.

Experience the difference - try it for free!

Conclusion

Web scraping and anti-scraping groups can both benefit from the flexible application of TLS fingerprinting. It helps businesses better identify potentially harmful activities and improve their study of online traffic trends. Furthermore, companies who concentrate on gathering data might use TLS fingerprints to blend in with the traffic of a target website, enhancing web scraping and proxy anonymity.

At Scrapeless, we only access publicly available data while strictly complying with applicable laws, regulations, and website privacy policies. The content in this blog is for demonstration purposes only and does not involve any illegal or infringing activities. We make no guarantees and disclaim all liability for the use of information from this blog or third-party links. Before engaging in any scraping activities, consult your legal advisor and review the target website's terms of service or obtain the necessary permissions.